Why Quantum Computers Need Cryogenic Control Electronics

TL;DR: JAX and TensorFlow represent competing philosophies for harnessing TPU power: JAX's functional purity enables elegant composability, while TensorFlow's mature tooling serves production systems at scale. Framework choice determines not just performance, but which AI futures become computationally feasible.

In 2018, Google Research quietly released JAX, a library that would reshape how engineers think about training massive AI models. What started as a successor to the obscure Autograd library has become the secret weapon behind some of today's most sophisticated machine learning systems. JAX didn't just add another framework to an already crowded ecosystem - it fundamentally rethought how software should communicate with specialized hardware like Tensor Processing Units. Within a few years, companies like Kakao achieved a 2.7x throughput increase for their large language models simply by switching to JAX on Cloud TPUs. That's not incremental improvement. That's a paradigm shift in how we harness silicon to teach machines.

The story of TPU software isn't just about speed. It's about a quiet revolution in how humans and machines collaborate to solve problems that were impossible a decade ago. As AI models grow from millions to hundreds of billions of parameters, the frameworks that feed these digital brains have become as critical as the chips themselves. The difference between a model that takes three weeks versus three days to train isn't just convenience - it determines which research ideas get tested and which startups survive their first year.

Traditional CPUs evolved over decades with general-purpose computing in mind. GPUs borrowed heavily from graphics rendering. But TPUs? They were purpose-built from day one for matrix multiplication - the fundamental operation underlying neural networks. Google designed these chips around systolic arrays, elegant grids where data flows rhythmically between processing elements, minimizing the memory bottleneck that cripples other architectures.

Here's the catch: specialized hardware demands specialized software. You can't just throw legacy TensorFlow code at a TPU and expect magic. The XLA compiler serves as the critical translator, transforming high-level operations into TPU-optimized instructions through fusion, layout optimization, and memory allocation tailored to the hardware's unique architecture. Think of XLA as a polyglot interpreter who doesn't just translate words but restructures entire sentences to preserve meaning across languages.

Specialized hardware demands specialized software. The XLA compiler doesn't just translate operations - it fundamentally restructures computation to match how data flows through TPU systolic arrays.

Each TPU generation requires updated software stacks to exploit new capabilities. TPU v2 introduced bfloat16 precision. TPU v4 added optical circuit switching that reconfigures in under 10 nanoseconds, enabling dynamic connections across physical distances exceeding 50 meters. These aren't minor spec bumps - they're fundamental changes that software must adapt to, or performance gains evaporate.

JAX's philosophy breaks from the object-oriented traditions that dominated earlier frameworks. Instead of building computational graphs with stateful layers, JAX embraces functional purity. Functions transform data without side effects. This seemingly academic choice unlocks profound practical advantages for TPU computing.

The magic happens through composable function transformations. Need automatic differentiation? Wrap your function in grad(). Want to vectorize across a batch? Apply vmap(). Ready to distribute across TPU cores? Use pmap(). These primitives combine like Lego blocks, letting engineers build complex distributed training pipelines from simple, testable components.

Consider how JAX handles vectorization. The vmap primitive automatically transforms a scalar computation into a batched operation over the leading dimension, eliminating manual loop unrolling. On TPUs with their massive parallel processing elements, this automatic batching means your code naturally exploits the hardware's strengths without low-level tweaking.

JAX's automatic differentiation implementation differs fundamentally from TensorFlow's approach. It employs forward-over-reverse differentiation combined with JIT compilation, enabling efficient on-the-fly computation of complex derivatives. For problems requiring second-order optimization - think training neural architecture search or meta-learning systems - this architectural choice delivers dramatic speedups, sometimes 10x faster than PyTorch for specialized workloads.

TensorFlow hasn't stood still while JAX gained ground. The framework that trained BERT, T5, and countless production models continues evolving its TPU story. TensorFlow's strength lies in its mature ecosystem and battle-tested distributed training strategies.

TensorFlow integrates deeply with XLA, performing optimizations like high-level graph construction, layout transformations, and kernel fusion that improve execution speed and memory usage on accelerator hardware. While JAX traces and compiles eagerly, TensorFlow can build static graphs that XLA optimizes holistically before execution.

This difference matters for different workloads. On small tasks, TensorFlow's static graph overhead becomes apparent. Benchmark tests on 28×28 images showed TensorFlow taking 0.8985 seconds for inference compared to JAX's 0.3620 seconds. But scale up to 64×64 images, and the story shifts: TensorFlow clocks 2.0182 seconds while JAX drops to 1.2692 seconds. The JIT compilation overhead that penalizes JAX on tiny workloads becomes negligible as problem size grows.

"For distributed training across TPU pods, TensorFlow requires careful manual configuration - but this gives infrastructure engineers precise control over gradient aggregation, critical when debugging training instabilities across hundreds of cores."

- Towards Data Science, Accelerated Distributed Training

For distributed training across TPU pods, TensorFlow requires careful manual configuration. Developers must explicitly reduce losses using tf.reduce_sum and scale by global batch size, since automatic reduction is disabled in distributed mode. This manual control feels cumbersome compared to JAX's pmap, but it gives infrastructure engineers precise control over gradient aggregation - critical when debugging training instabilities across hundreds of TPU cores.

Raw speed tells only part of the performance story. Real-world ML engineering involves navigating trade-offs between compilation overhead, memory efficiency, and developer productivity.

JAX's trace-and-compile approach introduces measurable overhead on simple workloads. The framework must trace through your Python code to build a computation graph before XLA can optimize it. For high-throughput inference serving thousands of small requests, this overhead accumulates. Eager frameworks like PyTorch start executing immediately, giving them a head start.

But flip the script to training a transformer with 175 billion parameters. Now compilation happens once, and the optimized kernel runs for days. JAX's aggressive XLA usage pays dividends. Lightricks reports successfully scaling video diffusion models that would have been impractical with other frameworks.

Matrix multiplication dimensions create another performance puzzle. TPUs achieve peak efficiency with specific batch sizes and tensor shapes. Misaligned dimensions leave processing elements idle, wasting expensive accelerator time. JAX's default bfloat16 precision for matrix multiplication on TPUs offers automatic performance gains, but only if your model accommodates reduced precision.

Neither JAX nor TensorFlow exists in isolation. They've spawned ecosystems of specialized libraries that abstract common patterns and push framework capabilities.

Flax emerged as JAX's answer to Keras, providing neural network primitives while preserving functional programming principles. Researchers at DeepMind and Google Brain adopted Flax for major projects, validating its design for cutting-edge research.

Optax tackles optimization, offering a functional API for gradient transformations and learning rate schedules. Haiku brings a more familiar object-oriented interface for teams transitioning from PyTorch. These libraries don't just wrap JAX - they demonstrate patterns for building maintainable ML code on TPUs.

The Pallas kernel language represents the frontier of TPU customization. When XLA's automatic optimizations fall short, Pallas lets engineers write custom kernels that map directly to TPU hardware primitives. This level of control was previously impossible outside Google, democratizing hardware-level optimization for the research community.

More recently, vLLM added unified TPU support for both PyTorch and JAX, recognizing that production systems often need framework interoperability. You might train with JAX for scaling advantages, then serve with PyTorch for deployment tooling.

More recently, vLLM added unified TPU support for both PyTorch and JAX, recognizing that production systems often need framework interoperability. You might train with JAX for its scaling advantages, then serve with PyTorch for its deployment tooling.

Academic benchmarks matter, but production deployments reveal frameworks' true capabilities. Kakao's 2.7x throughput increase came from more than just switching frameworks. Their engineering team restructured data pipelines, tuned batch sizes, and optimized checkpoint strategies - work enabled by JAX's composability.

Google itself recently unveiled Ironwood, a co-designed AI stack pairing new TPU hardware with optimized software. The system demonstrates what's possible when chip designers and framework developers collaborate from the start, rather than adapting existing software to new silicon.

Research infrastructure like the TPU Research Cloud gives academic teams access to TPU pods with production-grade frameworks. This democratization accelerates innovation - a graduate student in Seoul can experiment with techniques that previously required a tech giant's resources.

But access alone doesn't solve all problems. TPU instances on platforms like Kaggle can only read data from Google Cloud Storage, not local filesystems. These constraints force architectural decisions that ripple through entire projects. Your brilliant model means nothing if you can't feed it data at the rate TPUs demand.

So which framework should you choose? The answer frustrates engineers who want definitive guidance: it depends on your specific situation.

Starting fresh with a transformer architecture for NLP? JAX's functional approach and modern libraries like Flax offer a clean path. The learning curve is steeper than Keras, but the scalability ceiling is higher.

Migrating a mature TensorFlow codebase? Unless performance problems demand it, wholesale rewrites rarely justify their cost. TensorFlow's TPU support is mature and well-documented, particularly for computer vision models.

Building a startup where iteration speed matters more than peak performance? Consider whether you really need TPUs. GPUs offer more flexible debugging and a broader software ecosystem. TPUs excel at specific workloads - large-scale transformer training, certain vision models - but they're not universally superior.

For teams hedging bets, framework interoperability is improving. You can develop in one framework and deploy in another, though this adds operational complexity. Some organizations train JAX models then convert to ONNX for inference, gaining JAX's training advantages without committing their entire stack.

The TPU software stack isn't finished evolving. Google's TPUv7 promises new capabilities that frameworks must adapt to exploit. As fleet efficiency analysis reveals bottlenecks in large-scale deployments, software optimizations target the metrics that matter: goodput, the useful work extracted from expensive hardware.

Emerging paradigms like mixed-precision training require framework-level support. Simply enabling bfloat16 isn't enough - frameworks must carefully manage precision throughout the computational graph to maintain model quality while achieving speedups.

The convergence of TPU architectures with inference-optimized designs like Ironwood suggests frameworks will increasingly specialize for training versus serving. Today's one-size-fits-all approach may give way to paired frameworks optimized for each phase of the ML lifecycle.

Behind the technical specifications and benchmark numbers, framework choice profoundly affects research culture. JAX's functional style encourages reproducibility - pure functions with explicit randomness produce identical results given the same inputs. This might seem like a minor convenience, but debugging distributed training becomes vastly simpler when you can reliably reproduce failures.

TensorFlow's vast community means abundant Stack Overflow answers and tutorials. For junior engineers learning ML, this support network matters as much as framework features. The best framework is the one your team can actually use effectively.

Research velocity - the rate at which you can test hypotheses - often dominates raw performance. If JAX's learning curve costs your team two weeks, but saves 20% training time, you need to train many models before that investment pays off. Make these calculations explicitly rather than defaulting to whatever seems most modern.

TPUs represent extraordinary engineering, packing trillions of operations per second into chips that sip power compared to GPU alternatives. But that potential sits dormant without software frameworks that can communicate effectively with the silicon. JAX and TensorFlow serve as amplifiers, transforming hardware capability into research breakthroughs and production systems.

The divergence between these frameworks reflects competing philosophies about how humans and machines should collaborate. JAX bets on functional purity and composability, trusting that simple primitives combine to handle complexity. TensorFlow emphasizes comprehensive tooling and backward compatibility, prioritizing the needs of production systems serving billions of users.

Neither approach is objectively superior. The future likely holds room for both, along with new frameworks we can't yet imagine. As AI models approach and exceed human performance on ever-broader tasks, the software stacks enabling these achievements will evolve in lock-step.

For ML engineers today, understanding these frameworks means understanding more than syntax and APIs. It means grasping how software architecture shapes what's computationally feasible, and therefore what research questions we can ask. The choice of framework isn't just technical - it's strategic, determining which futures we can build and how quickly we arrive there.

Rotating detonation engines use continuous supersonic explosions to achieve 25% better fuel efficiency than conventional rockets. NASA, the Air Force, and private companies are now testing this breakthrough technology in flight, promising to dramatically reduce space launch costs and enable more ambitious missions.

Triclosan, found in many antibacterial products, is reactivated by gut bacteria and triggers inflammation, contributes to antibiotic resistance, and disrupts hormonal systems - but plain soap and water work just as effectively without the harm.

AI-powered cameras and LED systems are revolutionizing sea turtle conservation by enabling fishing nets to detect and release endangered species in real-time, achieving up to 90% bycatch reduction while maintaining profitable shrimp operations through technology that balances environmental protection with economic viability.

The pratfall effect shows that highly competent people become more likable after making small mistakes, but only if they've already proven their capability. Understanding when vulnerability helps versus hurts can transform how we connect with others.

Leafcutter ants have practiced sustainable agriculture for 50 million years, cultivating fungus crops through specialized worker castes, sophisticated waste management, and mutualistic relationships that offer lessons for human farming systems facing climate challenges.

Gig economy platforms systematically manipulate wage calculations through algorithmic time rounding, silently transferring billions from workers to corporations. While outdated labor laws permit this, European regulations and worker-led audits offer hope for transparency and fair compensation.

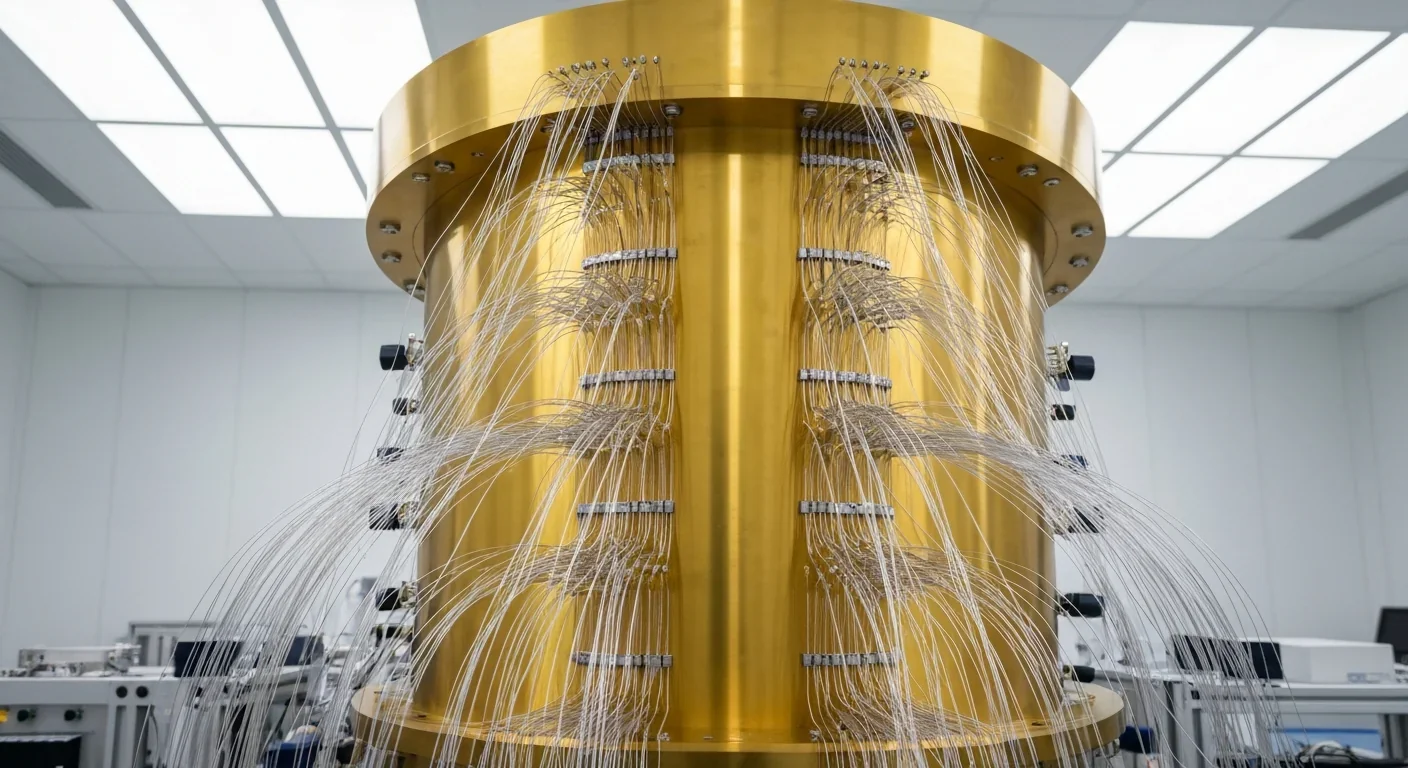

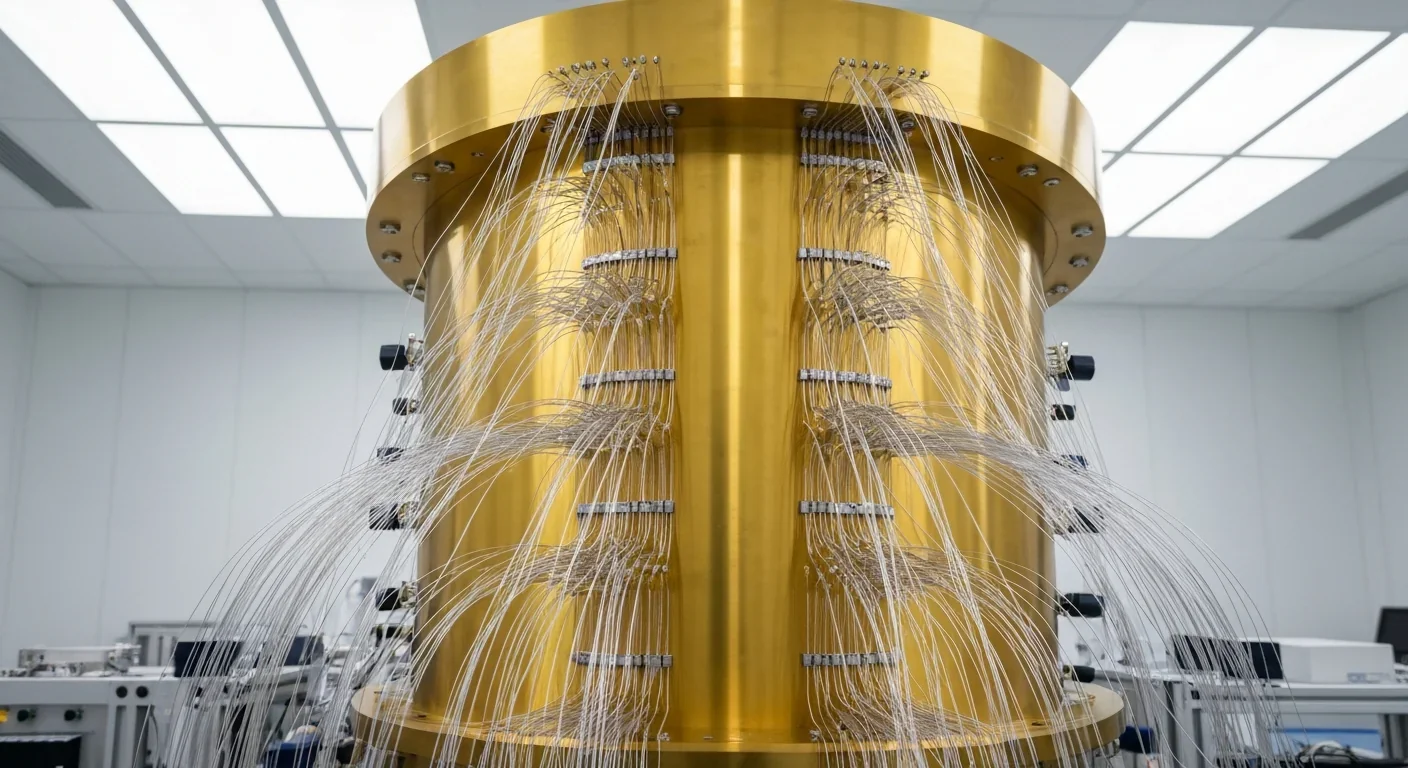

Quantum computers face a critical but overlooked challenge: classical control electronics must operate at 4 Kelvin to manage qubits effectively. This requirement creates engineering problems as complex as the quantum processors themselves, driving innovations in cryogenic semiconductor technology.