Why Quantum Computers Need Cryogenic Control Electronics

TL;DR: TPU training costs extend far beyond hourly rates. Hidden expenses in power, cooling, networking, software migration, and specialized talent can flip the economics. At hyperscale with large-batch workloads, TPUs deliver compelling savings. Below that threshold, GPU flexibility often wins despite higher sticker prices.

When Midjourney announced it had slashed monthly compute costs from $2 million to $700,000 by switching to Google's Tensor Processing Units, the AI infrastructure world took notice. That's a 65% reduction in operating expenses for running one of the world's most popular generative AI platforms. But here's what nobody tells you: the sticker price of TPU training is just the opening bid in a much more complicated economic game.

The real question isn't whether TPUs cost less per hour than NVIDIA's flagship H100 GPUs. It's whether the total cost of ownership - including power infrastructure, cooling systems, engineering talent, software licensing, and the invisible tax of vendor lock-in - actually delivers on Google's promise of 4x better performance per dollar. For organizations betting hundreds of millions on AI infrastructure, getting this calculation wrong doesn't just hurt the budget. It can determine whether your AI ambitions succeed or stall.

Walk into any conversation about AI accelerators and you'll hear the same refrain: TPUs offer better economics than GPUs. Google's pricing seems to back this up. Cloud TPU v6e starts at $1.375 per hour on-demand, dropping to $0.55 per hour with three-year commitments. Compare that to NVIDIA H100 instances at $2.99 to $7.98 per hour, and the math looks compelling.

But chip pricing tells maybe 30-40% of the total story. According to enterprise case studies presented at major tech conferences, initial hardware costs represent only 30-40% of total ownership costs over a three-year lifecycle. The rest? Hidden in plain sight across your infrastructure stack.

Hardware costs represent only 30-40% of total AI infrastructure ownership costs. The remaining 60-70% hides in power, cooling, networking, software, and engineering expertise.

Start with memory architecture. TPU v6e delivers 32GB of high-bandwidth memory per chip, while NVIDIA's H100 packs 80GB. Training a 70-billion parameter model on TPUs requires eight units - 256GB total - at $21.60 per hour for the pod. The H100's larger memory footprint means you need fewer chips to fit the same model in VRAM, often cutting unit requirements in half. Suddenly that per-hour advantage starts evaporating when you calculate how many chips you actually need.

Then there's the networking tax. TPU Pods achieve inter-chip bandwidth of 13 terabytes per second across 256 chips delivering 235 petaflops of compute. That's phenomenal performance, but it requires Google's proprietary interconnect fabric. Building equivalent infrastructure on-premise means not just buying TPU chips but engineering an entire custom network topology. For GPU clusters, you're looking at InfiniBand networking at $2,000-$5,000 per node, plus switches ranging from $20,000-$100,000 depending on port count and speed. Either way, high-speed interconnects add up fast.

Here's where things get interesting. TPUs consume significantly less power than comparable GPUs - up to 60-65% less energy for equivalent workloads. TPU v4 achieves 275 TFLOPS at 170 watts thermal design power, yielding 1.62 TFLOPS per watt. Compare that to each H100 GPU pulling up to 700 watts under load, and the energy efficiency advantage seems decisive.

But raw chip power consumption doesn't tell the whole infrastructure story. Google's data centers maintain a Power Usage Effectiveness of 1.1 versus the industry average of 1.58. That means for every watt of compute power, Google's facilities waste only 0.1 watts on cooling and distribution, while typical data centers waste 0.58 watts. When you're running thousands of accelerators, that differential compounds into millions in annual energy costs.

If you're using cloud TPUs, Google's superior PUE is baked into your hourly rate - you benefit without thinking about it. But if you're deploying on-premise or using GPU infrastructure, you're paying for that cooling inefficiency directly. Dense GPU clusters generate tremendous heat, requiring specialized cooling solutions costing $15,000-$100,000 depending on scale. Water-cooling infrastructure or enhanced HVAC systems aren't optional extras; they're mandatory investments to keep silicon from melting.

"Power and cooling can represent 25-35% of total costs over a 3-year lifecycle."

- Enterprise case studies from GTC 2025

According to conference intelligence gathered at major tech events, power and cooling represent 25-35% of total costs over a three-year lifecycle. For a cluster consuming megawatts of power, that percentage translates to eye-watering dollar amounts. A facility upgrade to support dedicated power distribution units for a multi-GPU cluster can add $10,000-$50,000 before you even rack the first chip.

TPUs run fast, but they don't run everything. Google designed these chips specifically for TensorFlow, JAX, and the XLA compiler framework. This specialization delivers exceptional performance for large-batch tensor operations but comes with significant software constraints. GPUs support a broader ecosystem including CUDA, PyTorch, vLLM, and essentially every major machine learning framework humanity has invented.

What does framework lock-in actually cost? For organizations with existing GPU-based training pipelines, migration requires adapting code from CUDA/PyTorch to TensorFlow/JAX, typically taking 2-6 months for large deployments. That's 4-8 full-time engineer months for major migrations. If your ML engineers earn $200,000-$300,000 annually, that migration effort represents $130,000-$400,000 in engineering costs before you train a single model.

But the opportunity cost runs deeper. TPUs struggle with models using dynamic shapes, custom operations, or advanced debugging workflows. The GPU ecosystem's maturity means better tooling, more extensive documentation, and a larger talent pool. When your training job fails at 80% completion because of a framework incompatibility, the real cost is measured in wasted compute hours and delayed product launches.

Some organizations have found ways around these limitations. According to DeepMind research cited in migration case studies, teams that fully embraced the TPU software stack reduced model development time by 50% compared to CUDA workflows. But that requires building institutional expertise from scratch - hiring engineers who know JAX/XLA or retraining your existing team. Either path carries substantial costs.

So when do TPUs actually make financial sense? The answer depends heavily on your scale, workload characteristics, and time horizon.

For small research teams or startups training models occasionally, cloud GPUs offer lower barriers to entry. Training a Llama 70B model from scratch on eight H100 GPUs takes 4-6 weeks at $2.99 per hour per GPU. Total cloud cost for the median five-week run: approximately $20,093. You can spin up, train, and shut down without any capital investment in hardware, networking, or facilities.

The calculus shifts at purchase scale. Eight H100 GPUs cost roughly $200,000, plus another $50,000 in infrastructure - networking, power, cooling, racks. Total capital outlay: $250,000. Break-even occurs around 10,450 GPU-hours, roughly seven weeks of continuous operation for the eight-GPU cluster. If you're training multiple large models per quarter, ownership starts making economic sense.

At hyperscale - training foundation models or serving billions of inference requests - TPU economics become compelling. Below that threshold, GPU flexibility often delivers better total value despite higher hourly rates.

TPUs follow a different economic curve. Because they're primarily accessed via Google Cloud as managed instances, there's no on-premise option for most organizations. You're locked into pay-as-you-go pricing, committed use discounts for longer terms, or volume discounts at truly massive scale. Google's PaLM model training used 6,144 TPU v4 chips, while Gemini Ultra trains on tens of thousands of TPUs. At that scale, the cost advantages become substantial - but you need Google-scale ambitions to access Google-scale economics.

According to analysis of TPU v7 economics, Google's per-chip total cost of ownership is approximately 44% lower than GB200 (NVIDIA's latest architecture) when procuring chips through Broadcom. But that TCO advantage applies to Google's internal deployments. For external customers accessing TPUs through Google Cloud Platform, the pricing includes Google's margin. The analysis estimates Anthropic pays approximately $1.60 per TPU-hour from GCP, achieving roughly 40% model FLOP utilization and delivering about 52% lower TCO per effective petaFLOP compared to GB300 NVL72.

Those numbers matter because they reveal the scale threshold. If you're Anthropic, Google, or another hyperscaler training foundation models measured in hundreds of billions of parameters, the TCO advantage is real and substantial. If you're training smaller models or running diverse workloads requiring framework flexibility, the economics reverse.

Midjourney's migration from GPUs to TPUs produced dramatic cost savings: from $2 million monthly to $700,000. That's the headline everyone cites. But Midjourney runs inference workloads serving millions of image generation requests. Their use case - large batch sizes, consistent tensor operations, inference-optimized workflows - plays perfectly to TPU strengths.

Contrast that with organizations running mixed workloads. Conference floor insights from GTC 2025 revealed that 70%+ of large enterprises will use both GPUs and TPUs by 2026, precisely because different accelerators excel at different tasks. TPUs deliver superior performance for large-batch transformer training. GPUs dominate small or variable batch workloads, computer vision tasks, and scenarios requiring rich debugging tools.

The hidden pattern? Organizations achieving the best economics run hybrid deployments, matching workload characteristics to accelerator strengths. Training happens on TPUs for maximum efficiency. Fine-tuning, experimentation, and inference serving with variable request patterns happen on GPUs for maximum flexibility. But now you're managing two different infrastructure stacks, two sets of software dependencies, and two pools of specialized engineering talent. Those operational overheads don't show up in vendor pricing sheets.

"Hidden migration costs are routinely underestimated by 3-5x when switching between platforms. Moving large projects can cost $500,000-$2 million+ when you account for engineering time, performance optimization, workflow retooling, and inevitable debugging marathons."

- Enterprise case studies, Tech Events 2025

According to enterprise case studies, hidden migration costs are routinely underestimated by 3-5x when switching between platforms. Moving large projects between GPU and TPU environments can cost $500,000-$2 million+ when you account for engineering time, performance optimization, workflow retooling, and inevitable debugging marathons.

TPUs are only available through Google Cloud or Colab. You won't find them in personal computers, on-premise data centers, or other cloud providers. This creates strategic dependencies that extend beyond immediate costs.

What happens if Google raises prices? What if your regulatory environment requires data residency that Google can't accommodate? What if you've built your entire training pipeline on TPUs and need to migrate to another cloud for business reasons? The specialization advantage that makes TPUs powerful also creates switching costs measured in millions of dollars and months of engineering time.

GPU infrastructure, while more expensive initially, offers deployment flexibility. You can run NVIDIA chips on-premise, in any major cloud provider, or in hybrid configurations. PyTorch code written for AWS GPUs runs on Azure GPUs with minimal modification. The skills your engineers develop transfer across environments. That optionality has economic value, even if it's harder to quantify than hourly instance pricing.

Some organizations view this as insurance against uncertainty. Others see it as paying a premium for flexibility you may never need. The right answer depends on your risk tolerance, scale trajectory, and strategic commitments. But it's a cost consideration that belongs in any honest TCO analysis.

Training large language models on TPUs requires specialized engineering expertise. Teams migrating from GPUs often need significant code refactoring and new debugging practices. The talent market reflects this specialization. ML engineers with deep TPU/JAX expertise command premium salaries and remain harder to hire than their CUDA/PyTorch counterparts simply because the pool is smaller.

This manifests in several ways. Recruiting timelines extend when you need TPU specialists. Training programs for existing staff take longer because the ecosystem documentation, while improving, remains less mature than CUDA's two decades of accumulated knowledge. When production issues arise at 3 AM, you need engineers who understand XLA's compiler optimizations and TPU Pod interconnect topologies - not skills you find on every resume.

Some organizations solve this by partnering with Google's professional services team. Others build internal centers of excellence. Either approach requires investment beyond hardware costs. Whether it's external consulting fees or internal training programs, the talent dimension adds real expenses that compound over time.

For organizations committed to TPU infrastructure, several strategies can dramatically improve economics:

Preemptible TPUs reduce costs by up to 70% for fault-tolerant workloads. If your training jobs support checkpointing and can tolerate interruptions, preemptible instances offer aggressive savings. The trade-off is operational complexity - you need infrastructure that automatically restarts interrupted jobs and manages checkpoint frequency to avoid wasted work.

Preemptible TPUs can cut costs by 70% for fault-tolerant workloads, while committed use discounts drop pricing from $1.375 to $0.55 per hour with three-year terms. The catch? You need accurate capacity planning to avoid paying for unused resources.

Committed use discounts drop TPU v6e pricing from $1.375 per hour to $0.55 per hour with three-year commitments. That's a 60% reduction, but it requires accurate capacity planning. Overcommit and you're paying for unused resources. Undercommit and you're back to on-demand pricing for overflow workloads.

Batch size optimization matters more on TPUs than GPUs. TPUs excel with large batches; GPUs handle small or variable batches better. Organizations that carefully tune batch sizes to maximize TPU utilization can achieve 2-3x better performance per dollar than naive migrations.

The most sophisticated optimization strategy? Dynamic workload allocation. Train foundation models on TPU Pods during off-peak hours when preemptible capacity is plentiful. Run fine-tuning and experimentation on GPUs where framework flexibility enables faster iteration. Reserve high-cost on-demand TPU capacity for time-sensitive production training runs. This requires orchestration sophistication, but the economics can be compelling.

The competitive dynamics between Google's TPUs and NVIDIA's GPUs continue evolving. Google now offers NVIDIA Blackwell alongside its Trillium TPUs, blurring the competitive lines. Behind-the-scenes partnerships between traditional competitors suggest the market is moving toward heterogeneous deployment models rather than winner-take-all scenarios.

TPU performance improvements follow a steady cadence. The evolution from TPU v1 delivering 0.31 TFLOPS per watt to v4's 1.62 TFLOPS per watt shows Google's sustained focus on energy efficiency. Each generation pushes performance higher while holding power consumption steady or reducing it. That trajectory suggests ongoing improvements in performance per dollar, especially for inference workloads where energy efficiency compounds across millions of requests.

Meanwhile, NVIDIA's roadmap promises continued performance scaling. The GPU-vs-TPU debate isn't resolving toward a single answer because different accelerators solve different problems. The real trend? Increasingly sophisticated workload matching, with organizations deploying the economically optimal accelerator for each distinct use case.

So should you bet on TPUs for your AI infrastructure? Start by honestly answering these questions:

What's your primary workload? If you're training large transformer models with consistent batch sizes and can commit to TensorFlow/JAX frameworks, TPUs offer compelling economics. If you're running diverse workloads, need framework flexibility, or require extensive debugging capabilities, GPUs provide better total value despite higher per-hour costs.

What's your scale? At small to medium scale - training occasional models, experimenting with architectures, serving modest inference traffic - cloud GPUs offer lower barriers and greater flexibility. At hyperscale - training foundation models, serving billions of inference requests monthly - TPU economics become increasingly attractive.

What's your team's expertise? If you have JAX/TensorFlow experts in-house or can recruit them, the learning curve is manageable. If your team lives in PyTorch land and switching frameworks would require extensive retraining, the migration costs may overwhelm the hardware savings.

What's your risk tolerance for vendor lock-in? If being tied to Google Cloud Platform doesn't concern you strategically, TPUs offer a clear path to cost optimization. If you need multi-cloud optionality or on-premise deployment capabilities, GPUs provide necessary flexibility.

The honest answer for most organizations? It's not either/or. The most economically efficient AI infrastructure uses TPUs where they excel - large-batch training and high-throughput inference - while leveraging GPUs for workloads requiring flexibility, diverse framework support, and rapid iteration. The hidden cost of TPU training isn't just in the infrastructure, software, and talent investments. It's in the opportunity cost of choosing wrong for your specific circumstances.

What the price tag doesn't tell you is that there's no universal answer. The right infrastructure decision depends on matching your workload characteristics, scale trajectory, team capabilities, and strategic priorities to the strengths and limitations of each accelerator type. Getting that match right determines whether you're optimizing costs or just shifting them around.

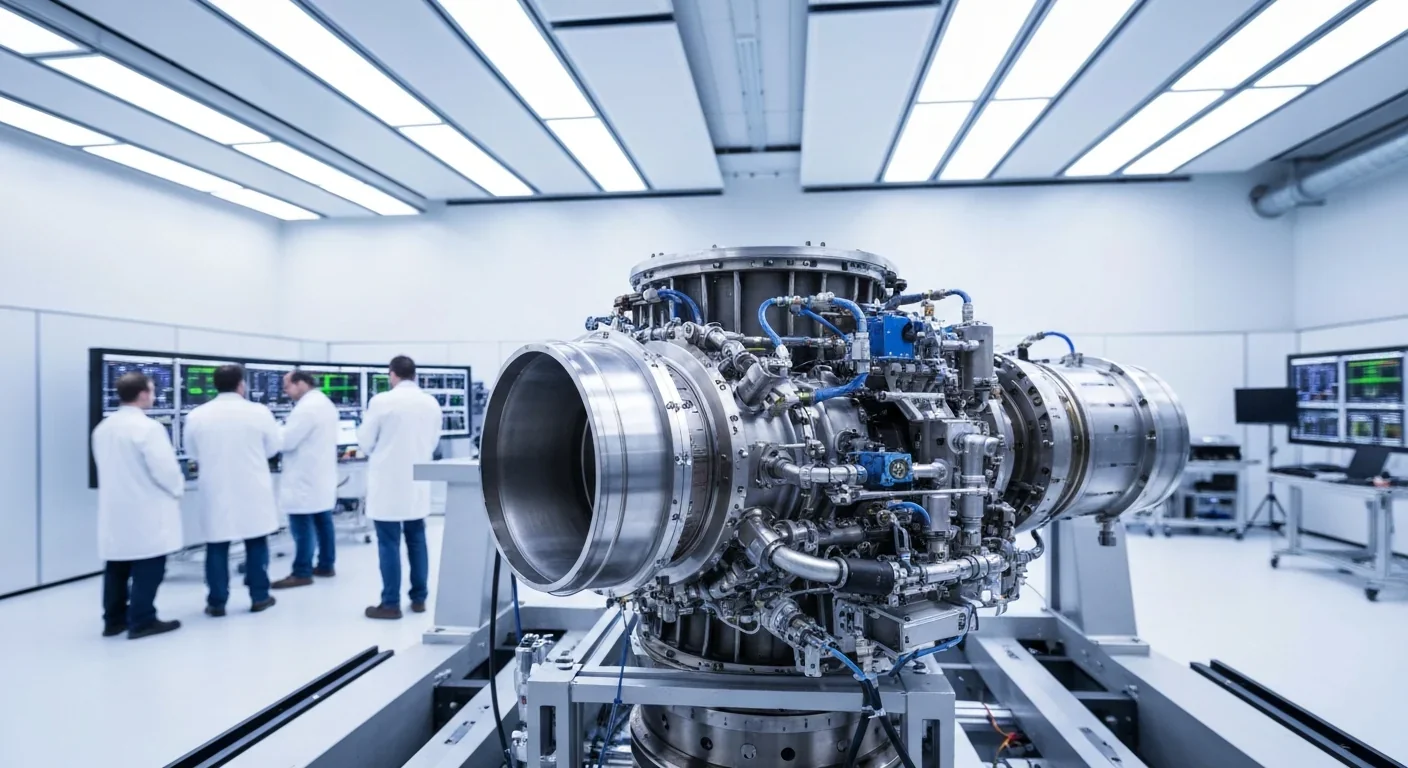

Rotating detonation engines use continuous supersonic explosions to achieve 25% better fuel efficiency than conventional rockets. NASA, the Air Force, and private companies are now testing this breakthrough technology in flight, promising to dramatically reduce space launch costs and enable more ambitious missions.

Triclosan, found in many antibacterial products, is reactivated by gut bacteria and triggers inflammation, contributes to antibiotic resistance, and disrupts hormonal systems - but plain soap and water work just as effectively without the harm.

AI-powered cameras and LED systems are revolutionizing sea turtle conservation by enabling fishing nets to detect and release endangered species in real-time, achieving up to 90% bycatch reduction while maintaining profitable shrimp operations through technology that balances environmental protection with economic viability.

The pratfall effect shows that highly competent people become more likable after making small mistakes, but only if they've already proven their capability. Understanding when vulnerability helps versus hurts can transform how we connect with others.

Leafcutter ants have practiced sustainable agriculture for 50 million years, cultivating fungus crops through specialized worker castes, sophisticated waste management, and mutualistic relationships that offer lessons for human farming systems facing climate challenges.

Gig economy platforms systematically manipulate wage calculations through algorithmic time rounding, silently transferring billions from workers to corporations. While outdated labor laws permit this, European regulations and worker-led audits offer hope for transparency and fair compensation.

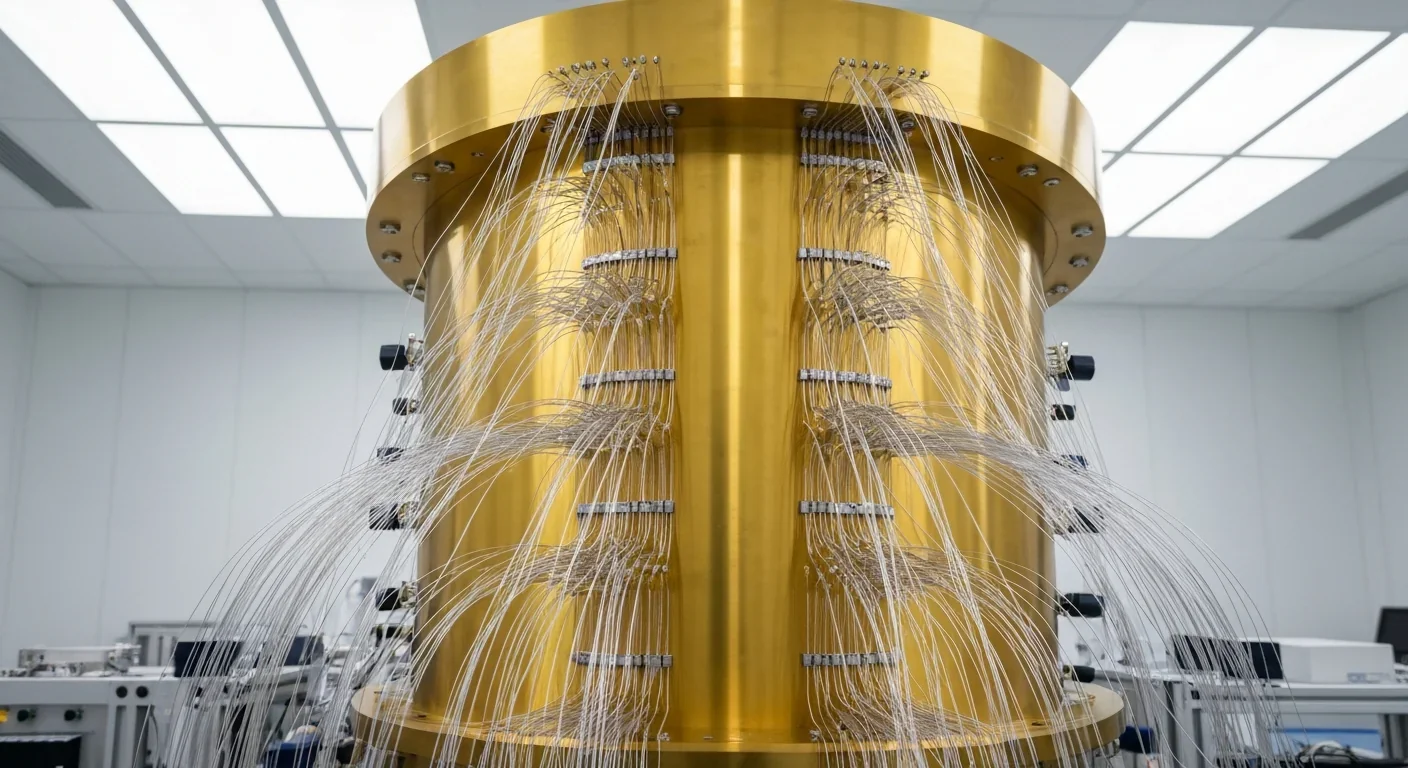

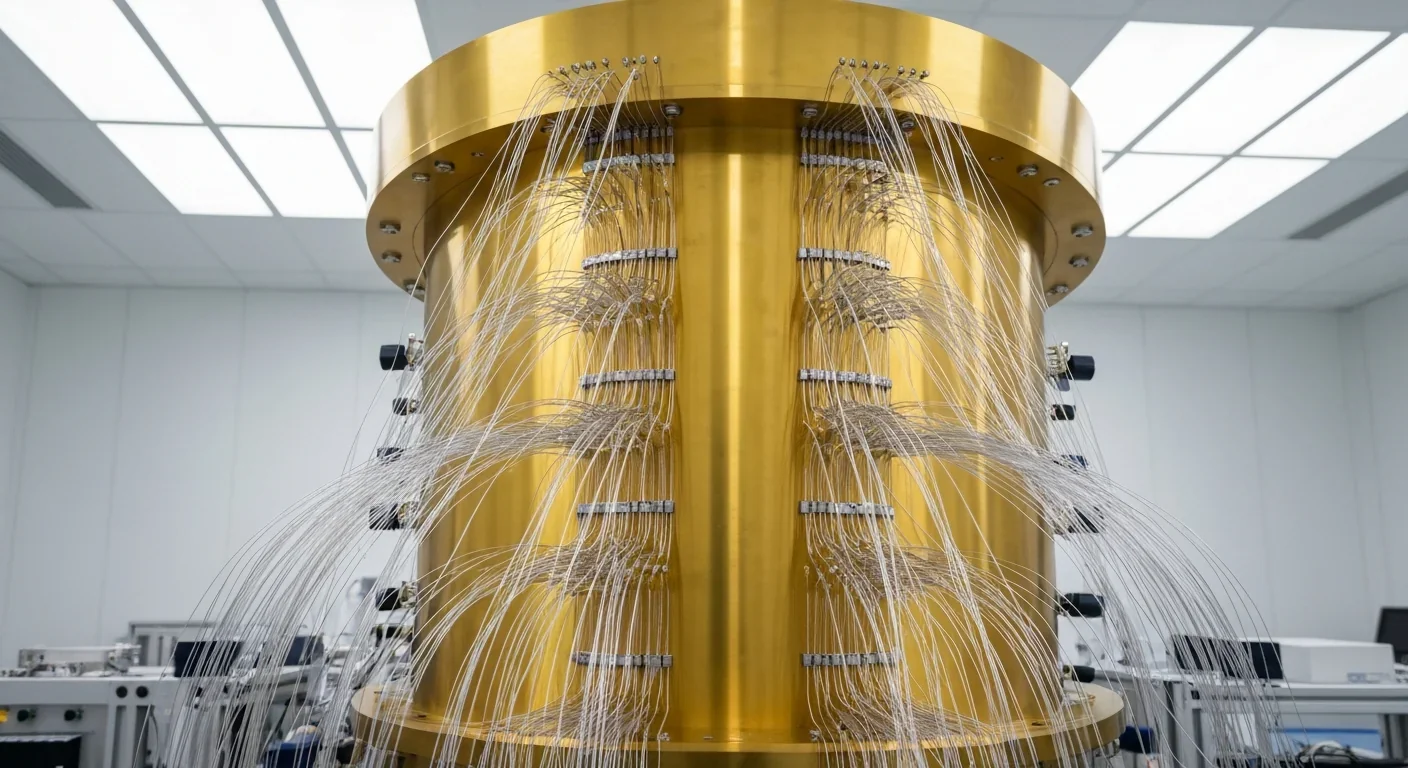

Quantum computers face a critical but overlooked challenge: classical control electronics must operate at 4 Kelvin to manage qubits effectively. This requirement creates engineering problems as complex as the quantum processors themselves, driving innovations in cryogenic semiconductor technology.