Why Quantum Computers Need Cryogenic Control Electronics

TL;DR: AWS Inferentia and Intel Gaudi are challenging Google's TPU dominance with compelling cost advantages and competitive performance. While Google maintains efficiency leadership for large-scale inference, alternatives now deliver 10% to 2.5x better price-performance on specific workloads, fundamentally reshaping AI infrastructure economics.

The battle for AI infrastructure supremacy isn't happening in boardrooms - it's unfolding in data centers worldwide, where AWS Inferentia and Intel Gaudi are mounting a serious challenge to Google's once-dominant Tensor Processing Units. What started as Google's private solution to a 2013 crisis has become a $283 billion market battleground reshaping how organizations deploy artificial intelligence.

When Google's leadership ran the numbers in 2013, they discovered something alarming: if every Android user utilized voice search for just three minutes daily, the company would need to double its global data center capacity. That existential threat sparked the creation of the TPU, custom silicon designed exclusively for neural network operations. For years, Google's hardware advantage seemed insurmountable.

But the landscape shifted dramatically in 2025. Organizations evaluating AI accelerators now face legitimate alternatives that weren't viable three years ago. AWS Inferentia 2 delivers inference workloads at $1.25 per hour, undercutting Google's TPU v5p by nearly 30 percent. Meanwhile, Intel Gaudi 3 demonstrates performance-per-dollar advantages ranging from 10 percent to 2.5 times over NVIDIA's H100, depending on workload configurations.

The competitive dynamics matter because the AI accelerator market is projected to grow from $28.59 billion in 2024 to $283.13 billion by 2032, expanding at a 33.19 percent compound annual growth rate. What's at stake isn't just hardware sales - it's the foundation of cloud computing economics for the next decade.

The fundamental difference between these platforms lies in their design philosophy. Google's TPU v7 Ironwood uses systolic arrays - specialized circuits that minimize memory bandwidth bottlenecks by passing data rhythmically through interconnected processing elements. This architecture achieves 4,614 teraflops per chip with 192 GB of high-bandwidth memory and 7.2 terabytes per second memory bandwidth.

AWS Inferentia takes a different approach, optimizing specifically for inference rather than training. The Neuron SDK compiles models from PyTorch, TensorFlow, and other frameworks into optimized instructions that maximize throughput on dedicated inference chips. This specialization means Inferentia excels at serving deployed models but can't handle training workloads.

Intel Gaudi 3 represents a third philosophy: competitive general-purpose AI acceleration without NVIDIA's price premium. According to independent testing, Gaudi 3 achieves up to 1.6 times performance-per-dollar advantage over NVIDIA H200 on certain AI models and configurations. The architecture features 24 integrated 200 Gbps Ethernet ports, enabling massive, flexible scale-out without specialized networking equipment.

Google's TPU v7 packs 192 GB of memory per chip - matching NVIDIA's latest Blackwell while consuming half the power. But memory capacity alone doesn't determine the winner in workloads where compiler optimization and interconnect bandwidth matter more than raw specifications.

The memory hierarchy reveals crucial differences. Google's Ironwood packs 192 GB of HBM per chip - matching NVIDIA's Blackwell B200 and substantially exceeding the H100's 80 GB. AWS Inferentia 2 uses 32 GB per chip but compensates through aggressive compiler optimizations. Gaudi 3 implements 96 GB HBM2e memory with 2.45 terabytes per second bandwidth, positioning it between cost-optimized and performance-extreme options.

Interconnect technologies separate hyperscale deployment capabilities. Google's custom Inter-Chip Interconnect delivers 1.2 terabits per second bandwidth, linking up to 9,216 TPUs in massive pods. Intel's integrated Ethernet approach eliminates external switches for smaller deployments while maintaining flexibility. AWS relies on Elastic Fabric Adapter for distributed workloads, though Inferentia's inference-only design reduces multi-chip communication requirements compared to training platforms.

Raw performance comparisons tell only part of the story. Recent testing by Signal65 running Granite-3.1-8B-Instruct with small input and output sizes found Gaudi 3 consistently outperformed NVIDIA H100, achieving 60 percent greater cost efficiency at scale (batch size 128). However, NVIDIA H200 maintained slight throughput advantages in the same configurations.

Workload characteristics dramatically impact relative performance. For Mixtral-8x7B inference with small inputs and outputs, Gaudi 3 delivered throughputs 8.5 to 21 percent higher than H200 across different batch sizes. The pattern emerged clearly: Gaudi 3 excels with smaller input-to-output ratios, while NVIDIA hardware performs better with large inputs and small outputs.

Google's TPU advantage becomes most apparent in large-scale inference operations. Former Google employees report that TPU v6 is 60-65 percent more efficient than GPUs for production workloads, with prior generations showing 40-45 percent efficiency gains. The specialized systolic array architecture delivers what one AMD engineer described as typical ASIC advantages: approximately 30 percent size reduction and 50 percent power reduction compared to GPUs.

AWS Inferentia 2 demonstrates different performance characteristics optimized for real-world deployment patterns. According to AWS customer testimonials, organizations achieve 2 to 4 times better price-performance ratios versus comparable GPU instances for inference workloads. The Neuron compiler's model-specific optimizations mean performance varies significantly based on architecture - transformers benefit more than convolutional networks.

"TPUs are anywhere from 25%-30% better to close to 2x better, depending on the use cases compared to Nvidia. Essentially, there's a difference between a very custom design built to do one task perfectly versus a more general purpose design."

- Former Google Chip Engineer

Training performance reveals starker divisions. Google's Gemini 3 was developed entirely on TPUs, demonstrating the platform's capability for frontier model development. Intel Gaudi 2 achieved recognition as the only benchmarked alternative to NVIDIA H100 for GPT-3 scale training in MLPerf results. AWS deliberately positioned Trainium (not Inferentia) for training, acknowledging the distinct optimization paths required.

Cloud pricing comparisons expose the economic battlefield. Google Cloud TPU v5p costs approximately $3.50 per training hour and $1.80 per inference hour. NVIDIA H100 instances run $4.50-$6.00 for training and $3.00-$4.00 for inference. AWS Inferentia 2 undercuts both at $1.25 per hour for inference-optimized instances.

On-premises economics shift the equation significantly. Detailed hardware pricing data from January 2025 reveals an eight-card server with NVIDIA H100 GPUs costs approximately $300,107, while an equivalent system with Intel Gaudi 3 accelerators runs $157,613 - nearly half the capital expenditure for comparable performance on many workloads.

Total cost of ownership extends beyond hardware acquisition. Power consumption increasingly dominates operational expenses at scale. Google's TPU v7 Ironwood achieves 100 percent better performance per watt than TPU v6e Trillium, while Gaudi 3 demonstrates up to 40 percent better price-performance than NVIDIA alternatives on comparable AWS instances. These efficiency gains compound over multi-year deployment lifecycles.

Hidden costs emerge from ecosystem investments. CUDA development expertise commands premium salaries, but enables deployment across all major cloud providers. TPU-optimized codebases lock organizations into Google Cloud, though Google recently formed sales-oriented teams specifically to expand TPU adoption. AWS Inferentia requires Neuron SDK integration, creating similar lock-in dynamics within Amazon's ecosystem.

The data gravity problem creates an economic trap: moving datasets between clouds often costs more than the compute savings from switching accelerators. This reality keeps organizations anchored to their primary cloud provider's custom silicon, regardless of benchmark advantages elsewhere.

Multi-cloud strategies face the data gravity challenge. One former customer running both TPUs and NVIDIA GPUs explained the economic trap: "Moving data out of one cloud is one of the bigger costs. In that case, if you have NVIDIA workload, we can just go to Microsoft Azure, get a VM that has NVIDIA GPU, same GPU in fact, no code change required. With TPUs, once you are all relied on TPU and Google says, 'You know what? Now you have to pay 10X more,' then we would be screwed."

NVIDIA's most formidable advantage isn't hardware - it's the CUDA ecosystem engraved in engineers' minds through years of university education. PyTorch dominates the research community, and until recently, PyTorch meant CUDA. This created a self-reinforcing cycle where developer familiarity drove adoption, which justified continued CUDA investment.

Google's ecosystem evolved internally before external adoption. TPUs use TensorFlow, JAX, and the Pathways runtime - powerful tools, but with steeper learning curves than mature CUDA workflows. Former Google Cloud employees acknowledge that ecosystem development lagged hardware capabilities because TPUs served internal workloads almost exclusively until recently.

AWS built the Neuron SDK specifically to lower migration barriers. The compilation approach means existing PyTorch and TensorFlow models can run on Inferentia with minimal code changes. This pragmatic strategy prioritizes compatibility over raw performance, reducing switching costs that previously protected NVIDIA's position.

Intel's software strategy emphasizes open standards and easy migration. Optimum Habana integrates with Hugging Face workflows, enabling developers to leverage familiar tools while accessing Gaudi hardware. According to Intel, models can migrate with reduced friction compared to more proprietary approaches.

Library maturity reveals development stage differences. CUDA boasts thousands of optimized kernels, years of community debugging, and extensive documentation. TPU software benefits from Google's internal pressure-testing but lacks the breadth of community contributions. Neuron SDK continues expanding model support, though gaps remain for newer architectures. Gaudi's ecosystem, while improving rapidly, still trails in edge cases and specialized operations.

Framework support increasingly commodifies across platforms. PyTorch now officially supports multiple backends including TPU, reducing CUDA's former exclusivity. JAX runs efficiently on TPUs, GPUs, and increasingly on other accelerators. TensorFlow maintains cross-platform compatibility as a design principle. The trend clearly favors hardware diversity over ecosystem lock-in.

Production deployment patterns reveal practical decision-making beyond benchmark wars. Google internally runs Gemini, Veo, and its entire AI stack on TPUs, according to former employees. The company purchases NVIDIA GPUs for Google Cloud Platform because customers demand familiar tools, but internal operations demonstrate confidence in proprietary silicon.

Meta provides a high-profile example of TPU adoption outside Google. After reports emerged that OpenAI began renting Google TPUs for ChatGPT workloads, NVIDIA CEO Jensen Huang personally called Sam Altman to verify and signal openness to renewed investment discussions. NVIDIA's official Twitter account subsequently posted a screenshot of OpenAI's denial, illustrating the competitive stakes involved.

"If I were to use eight H100s versus using one v5e pod, I would spend a lot less money on one v5e pod. When they came out with v4, the price of v2 came down so low that it was practically free to use compared to any NVIDIA GPUs."

- Google Cloud TPU Customer

AWS customers deploying Inferentia span diverse sectors. Published case studies include Snap using Trainium and Inferentia for recommendation systems, Anthropic evaluating Trainium for model development, and numerous enterprises optimizing inference costs for deployed applications. The pattern skews toward companies already committed to AWS infrastructure seeking cost optimization.

Intel Gaudi 3 adoption accelerated through cloud partnerships. IBM Cloud's implementation at $60 per hour versus $85 for NVIDIA instances creates compelling economics for price-sensitive workloads. Dell's offering of Gaudi-based systems targets enterprises preferring on-premises deployment with vendor support and integration guarantees.

Workload segmentation drives platform selection. Training frontier models remains NVIDIA and TPU territory, with AWS Trainium emerging as a potential third option. Inference increasingly fragmentizes across specialized accelerators based on cost-performance trade-offs. Niche applications like recommendation systems leverage TPU SparseCore capabilities. Edge deployment favors different silicon entirely - NVIDIA Jetson, Google Coral, or custom ASICs.

Hybrid strategies become common as organizations mature their AI operations. One customer described their approach: "If I were to use eight H100s versus using one v5e pod, I would spend a lot less money on one v5e pod. In the long run, if I am thinking I need to write a new code base, I need to do a lot more work, then it depends on how long I'm going to train. When they came out with v4, the price of v2 came down so low that it was practically free to use compared to any NVIDIA GPUs."

Vendor lock-in fears shape conservative infrastructure decisions. Organizations understand that deep TPU integration means dependence on Google Cloud pricing and availability. AWS Inferentia creates parallel dependency within Amazon's ecosystem. NVIDIA's cross-cloud availability provides insurance against single-vendor risk, even at higher costs.

Migration costs extend beyond code changes. Model optimizations, custom kernels, and performance tuning represent substantial engineering investments tied to specific platforms. One practitioner explained: "If you have a CUDA workload, we can just go to Microsoft Azure, get a VM that has NVIDIA GPU, same GPU in fact, no code required and just run it there. With TPUs, once you are all relied on TPU and Google says, 'You know what? Now you have to pay 10X more,' then we would be screwed."

Cloud providers deliberately design for stickiness. Google's TPU pods achieve remarkable performance through custom interconnects and compiler optimizations that don't transfer to other platforms. AWS Neuron SDK delivers best results through model-specific tuning that binds applications to Inferentia. Intel promotes openness, but Optimum Habana optimizations still favor Gaudi hardware.

The cloud margin squeeze is real: AI workloads are pushing providers from 50-70% gross margins down to 20-35%. Custom ASICs represent the only path back to historical profitability, which is why Google, AWS, and Microsoft are racing to perfect their proprietary silicon.

Standardization efforts aim to reduce switching costs. OpenXLA provides compiler infrastructure supporting multiple backends. ONNX format enables model portability across frameworks and hardware. PyTorch's backend abstraction increasingly decouples model code from acceleration platform. These developments favor challengers by lowering barriers to experimentation.

Risk mitigation strategies vary by organization size and sophistication. Startups often standardize on single platforms, betting on focus over flexibility. Mid-market companies maintain NVIDIA-centric toolchains while experimenting with alternatives for cost-sensitive workloads. Hyperscalers and large enterprises develop multi-platform capabilities, treating infrastructure diversity as strategic insurance.

The ASIC economics create interesting incentives for cloud providers. Google, AWS, and Microsoft all develop custom accelerators to escape NVIDIA's 75 percent gross margins. Success means returning from 20-35 percent margins on AI workloads to historical 50-70 percent cloud profitability. According to industry analysts, Google's advantage lies in controlling chip design while using Broadcom only for backend physical design, potentially limiting partner margins to 50 points.

Market dynamics reveal fundamental changes in AI infrastructure economics. Jensen Huang considers Google's TPU program a "special case" among ASIC competitors - acknowledgment from NVIDIA's CEO that custom silicon can achieve competitive parity. SemiAnalysis recently praised Google's position: "Google's silicon supremacy among hyperscalers is unmatched, with their TPU 7th Gen arguably on par with Nvidia Blackwell."

The trajectory favors custom accelerators for specific use cases. Inference workloads increasingly shift to specialized chips where CUDA's training advantages matter less. Former Google heads report TPUs are 5 times faster than GPUs for training dynamic models like search workloads, demonstrating domain-specific advantages beyond general benchmarks.

Cloud provider strategies diverge based on ASIC maturity. Google appears positioned to gain market share through TPU-enabled margin advantages and performance leadership. AWS Trainium faces greater uncertainty than TPU but represents the only other hyperscaler with credible custom silicon for training. Microsoft's MAIA program lags both, though OpenAI ownership provides access to custom ASICs that could accelerate development.

Intel's position depends on external validation beyond internal programs. Ending the Gaudi line in favor of Jaguar Shores signals strategic pivots amid execution challenges. However, competitive pricing and partnerships with IBM Cloud, Dell, and others demonstrate market traction. Success requires convincing organizations that cost advantages outweigh ecosystem maturity gaps.

Competitive pressure accelerates innovation cycles. Industry leaders commit to annual releases through the decade - a pace historically associated with consumer electronics rather than data center infrastructure. This cadence creates depreciation challenges where hardware capabilities advance faster than typical 3-5 year refresh cycles.

Decision frameworks must account for workload-specific requirements. Pure inference deployments should seriously evaluate Inferentia and Gaudi alternatives given their cost advantages. Training frontier models remains NVIDIA and TPU territory. Hybrid approaches segmenting workloads across platforms increasingly make economic sense despite added complexity.

Start small with alternatives using pilot programs isolated from critical production paths. AWS re:Invent 2025 highlighted how organizations break through cost barriers with Trainium without wholesale migration. Google's recent sales team expansion for TPUs means more POC support and migration assistance than historically available.

Multi-cloud strategies benefit from NVIDIA's universal availability but pay premium prices for that flexibility. Organizations already standardized on single cloud providers should evaluate native accelerators offering better economics within existing ecosystems. The data gravity problem means inference often runs most cost-effectively where training data already resides.

Talent considerations matter beyond hardware specifications. CUDA expertise remains more readily available and commands market rate compensation. TPU, Neuron SDK, and Optimum Habana skills are scarcer, potentially requiring training investments or premium hiring costs. This human capital factor often tilts conservative decisions toward NVIDIA despite higher infrastructure costs.

Future-proofing requires monitoring the ecosystem maturity trajectory. Google's rate of TPU improvement reportedly exceeds NVIDIA's pace, according to former Cloud employees. AWS continues expanding Neuron SDK model support. Intel's roadmap through Jaguar Shores signals sustained investment. The competitive dynamics favor continued improvement across platforms rather than winner-take-all consolidation.

The AI infrastructure landscape has fundamentally shifted from training-centric to inference-dominant workloads. This transition favors specialized accelerators optimized for deployment rather than general-purpose platforms handling both development and production. Google's Ironwood TPU v7 represents the first inference-only design from a major platform - validation that the market has bifurcated.

What started as Google's desperate solution to a data center crisis became a competitive advantage that forced AWS and Intel to respond with their own silicon. The result benefits organizations deploying AI because meaningful alternatives now exist across the performance-cost spectrum. Google no longer monopolizes the ASIC approach; NVIDIA no longer represents the only viable path.

The AI accelerator market's growth from $28.59 billion to a projected $283.13 billion by 2032 will be fought on multiple battlegrounds: raw performance, cost efficiency, ecosystem maturity, and workload-specific optimization. Different champions will emerge for different use cases rather than one platform dominating all scenarios.

Organizations evaluating infrastructure in 2025 face genuine choices with meaningful trade-offs. TPUs offer proven performance and efficiency for Google Cloud customers willing to embrace ecosystem lock-in. Inferentia delivers cost leadership for AWS-centric inference deployments. Gaudi 3 provides compelling economics for organizations prioritizing price-performance over ecosystem maturity. NVIDIA maintains cross-platform flexibility at premium pricing.

The monopoly is broken. What comes next depends on execution, ecosystem development, and how quickly the challengers can prove their platforms deliver not just competitive benchmarks but production-grade reliability at scale. For AI infrastructure, the competition has only just begun.

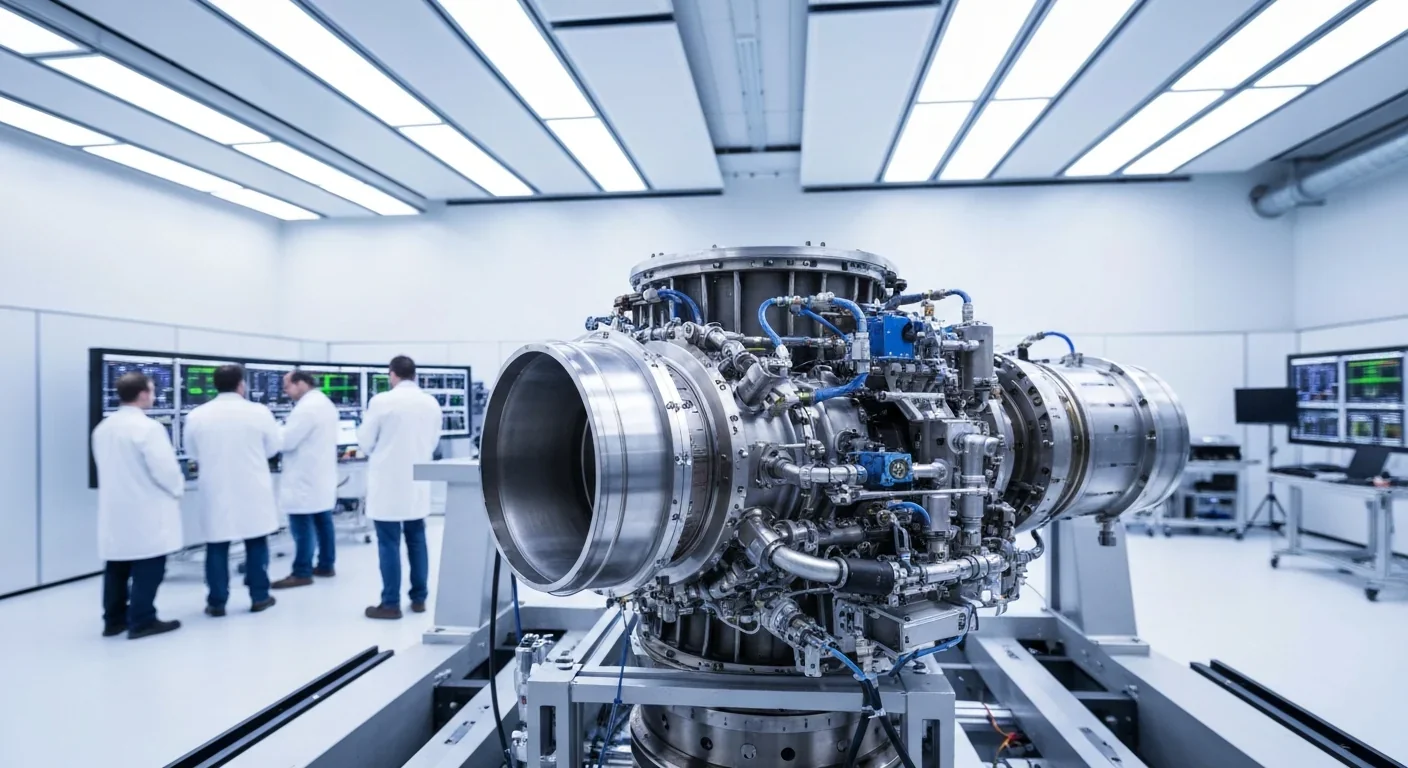

Rotating detonation engines use continuous supersonic explosions to achieve 25% better fuel efficiency than conventional rockets. NASA, the Air Force, and private companies are now testing this breakthrough technology in flight, promising to dramatically reduce space launch costs and enable more ambitious missions.

Triclosan, found in many antibacterial products, is reactivated by gut bacteria and triggers inflammation, contributes to antibiotic resistance, and disrupts hormonal systems - but plain soap and water work just as effectively without the harm.

AI-powered cameras and LED systems are revolutionizing sea turtle conservation by enabling fishing nets to detect and release endangered species in real-time, achieving up to 90% bycatch reduction while maintaining profitable shrimp operations through technology that balances environmental protection with economic viability.

The pratfall effect shows that highly competent people become more likable after making small mistakes, but only if they've already proven their capability. Understanding when vulnerability helps versus hurts can transform how we connect with others.

Leafcutter ants have practiced sustainable agriculture for 50 million years, cultivating fungus crops through specialized worker castes, sophisticated waste management, and mutualistic relationships that offer lessons for human farming systems facing climate challenges.

Gig economy platforms systematically manipulate wage calculations through algorithmic time rounding, silently transferring billions from workers to corporations. While outdated labor laws permit this, European regulations and worker-led audits offer hope for transparency and fair compensation.

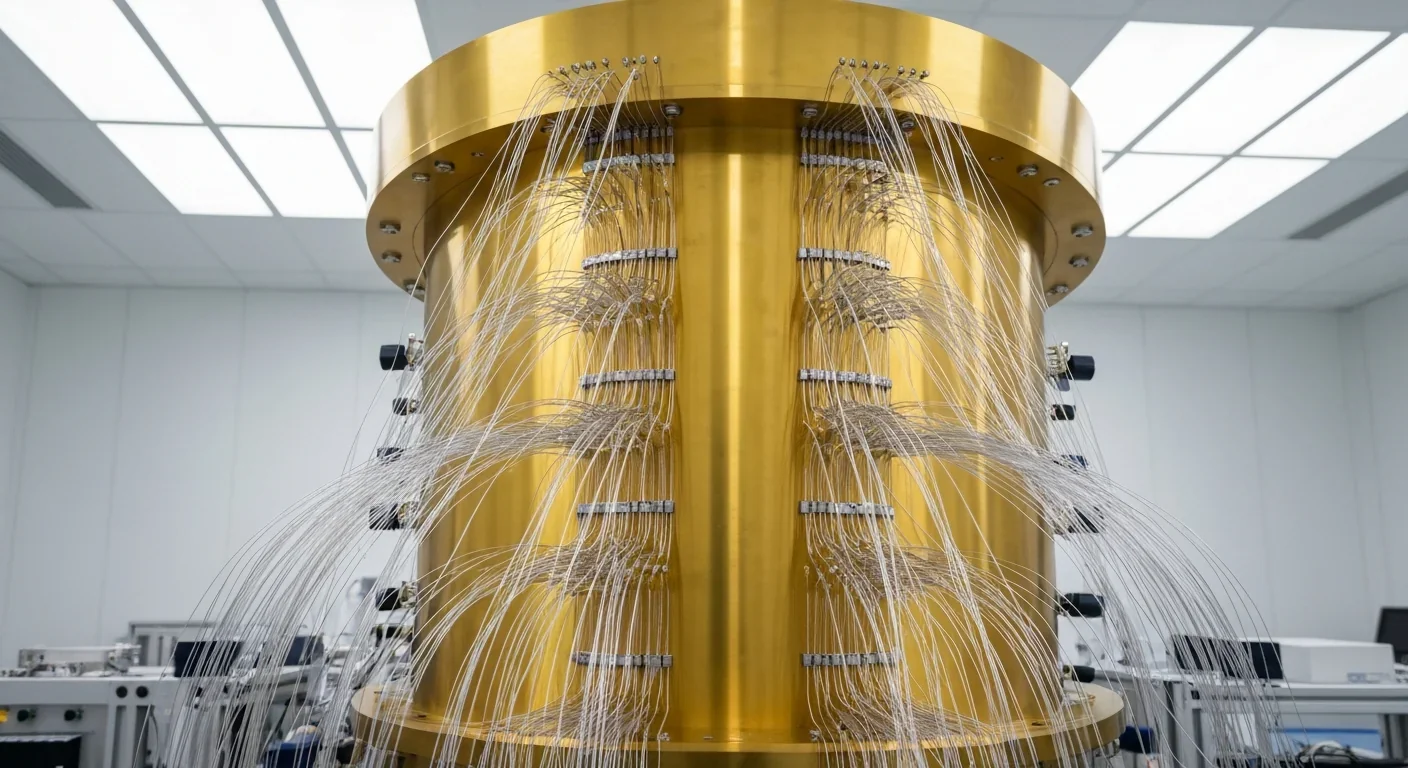

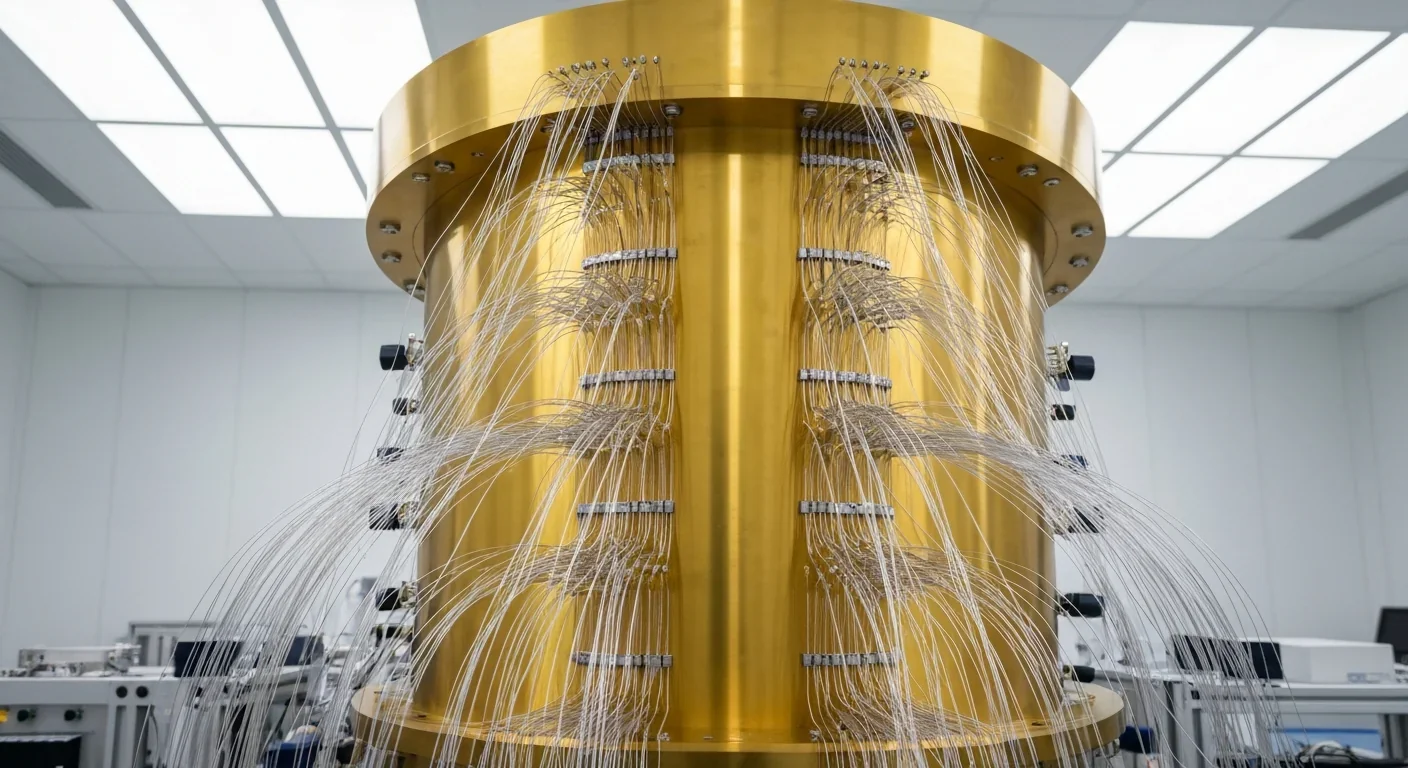

Quantum computers face a critical but overlooked challenge: classical control electronics must operate at 4 Kelvin to manage qubits effectively. This requirement creates engineering problems as complex as the quantum processors themselves, driving innovations in cryogenic semiconductor technology.