Cache Coherence Protocols: MESI and MOESI Explained

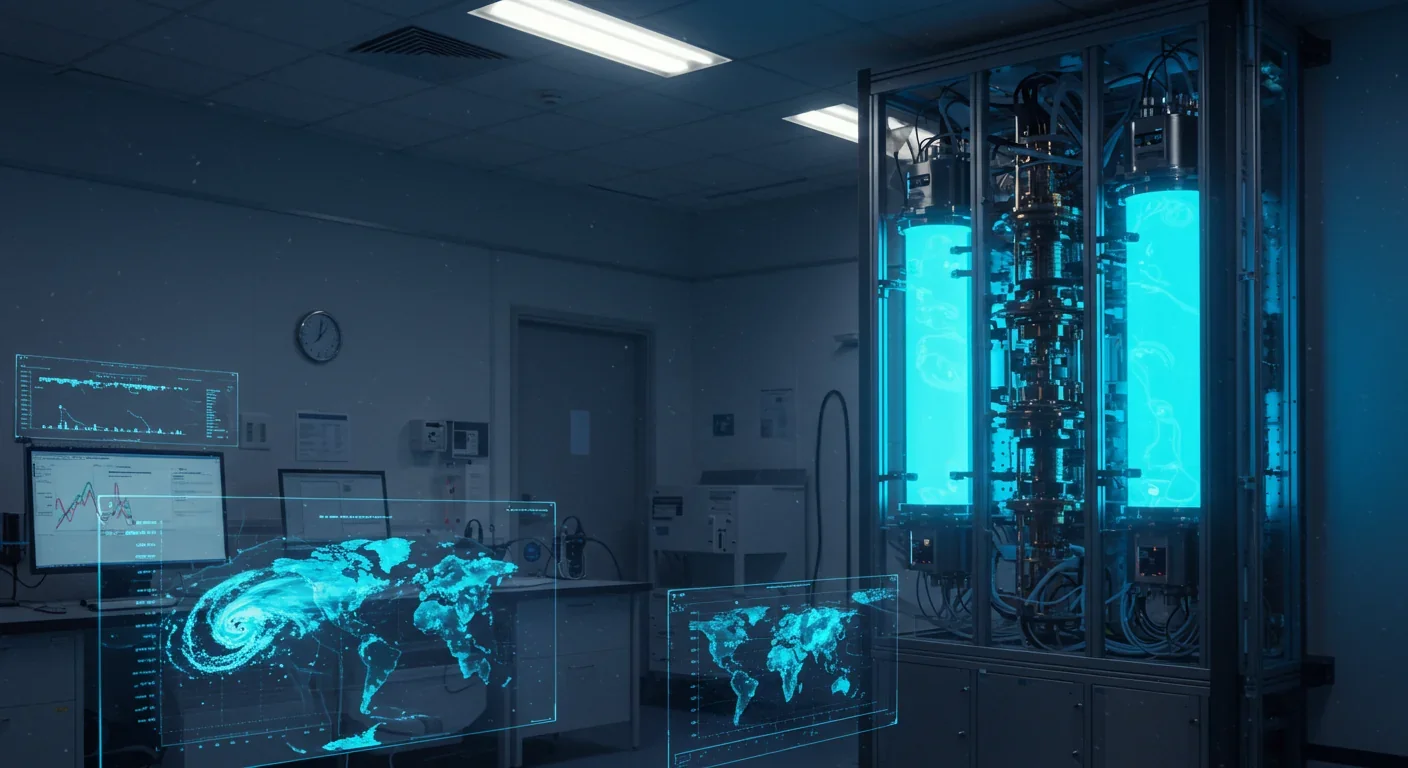

TL;DR: Quantum computers promise to revolutionize climate modeling by solving complex equations exponentially faster than traditional supercomputers. While no full quantum advantage has been demonstrated yet, recent breakthroughs in quantum algorithms and hybrid approaches show promise for dramatically improving forecast accuracy and policy decisions within the next decade.

By 2030, researchers predict quantum computers will cut climate prediction times from weeks to hours. This isn't science fiction anymore. Right now, in labs across the world, scientists are demonstrating that quantum algorithms can crack problems that make today's supercomputers sweat. The race is on to harness machines that process information in fundamentally different ways, because our current tools are hitting a wall just as climate forecasting has never been more critical.

Traditional supercomputers have served climate science remarkably well. Japan's Earth Simulator, once the world's fastest machine, reached 1.3 petaflops (that's a quadrillion calculations per second) and could model global climate down to a 10-kilometer grid. Impressive, right? Yet even at that scale, scientists have to make painful compromises.

Climate models track billions of data points: ocean currents, atmospheric temperatures, ice sheet movements, cloud formations. The equations governing these systems are nonlinear, meaning small changes cascade unpredictably. Current supercomputers solve these equations by breaking the planet into grid squares and stepping forward in time, but to capture critical details like individual storm systems or ocean eddies, you'd need grids measured in meters, not kilometers. The computational cost grows exponentially. We're talking about calculations that require petaflops of sustained power and massive energy consumption.

That's where quantum computing enters. Instead of processing bits as zeros or ones, quantum computers use qubits that exist in multiple states simultaneously. This property, called superposition, lets them explore many possible solutions at once. For certain types of problems - especially the differential equations that underpin climate physics - quantum algorithms promise exponential speedups.

The magic lies in quantum linear solvers, algorithms that can reduce the computational complexity of solving huge systems of equations from O(n³) operations down to O(poly(log n)). In plain English: problems that scale impossibly as they get larger suddenly become tractable.

Take data assimilation, the process of blending observational data with model predictions to improve forecast accuracy. Researchers at Chiba University recently tackled this using quantum annealing on a D-Wave Advantage machine. They reformulated the standard 4DVAR (four-dimensional variational) problem into a format quantum annealers can solve. The result? Computation time dropped to under 0.05 seconds for a 40-variable test system, compared to iterative classical methods that take much longer. Accuracy matched conventional approaches.

That experiment used the Lorenz-96 model, a simplified but chaotic system that mimics atmospheric behavior. It's a proof of concept, but it demonstrates something crucial: quantum hardware can already handle real climate-related calculations, not just toy problems.

Meanwhile, IonQ recently showcased its quantum-classical auxiliary-field quantum Monte Carlo algorithm (QC-AFQMC), which calculates atomic forces with unprecedented precision. Why does this matter for climate? Because modeling carbon capture materials - essential for decarbonization - requires understanding chemical reactions at the molecular level. IonQ's approach surpassed classical benchmarks and can trace reaction pathways, feeding results back into traditional computational chemistry pipelines.

Quantum computing offers two complementary routes to better climate models. The first is accelerating the core equations. Atmospheric dynamics, ocean circulation, energy transfer - all governed by partial differential equations that supercomputers grind through time step by time step. Quantum computers could solve these faster, enabling higher-resolution models or longer simulation periods without proportionally more hardware.

The second path is quantum machine learning for subgrid-scale processes. Climate models can't explicitly simulate every cloud or turbulent eddy; instead, they use parameterizations, simplified rules based on observations. But these parameterizations introduce errors. Quantum neural networks can learn these relationships more efficiently than classical machine learning, potentially with fewer parameters and less training data. Early research suggests quantum models could capture subgrid phenomena even on noisy intermediate-scale quantum (NISQ) devices available today.

A 2025 study explored variational quantum circuits as the most viable near-term algorithms because they can run on existing NISQ processors and integrate into high-performance computing workflows. Unlike gate-based quantum computers that require error correction (still years away at scale), variational circuits tolerate some noise and can be trained iteratively.

This isn't all theoretical. Google claimed in 2015 that its D-Wave 2X quantum annealer outperformed classical simulated annealing and quantum Monte Carlo by up to 100 million times on hard optimization problems. That claim sparked debate - other studies found no speedup - but it underscored the potential when problem structure aligns with quantum hardware strengths.

More recently, researchers have demonstrated hybrid quantum-classical workflows. The idea: use quantum processors for the hardest sub-problems (like optimization or specific linear solves) and classical supercomputers for everything else. This architecture acknowledges that quantum computers won't replace traditional HPC overnight. Instead, they'll act as accelerators, much like GPUs transformed machine learning.

One promising application is weather prediction accuracy. Studies suggest quantum computing could improve forecast models by 30-50%, particularly for extreme events like hurricanes or droughts. Better short-term forecasts save lives and money. Better long-term climate projections inform trillion-dollar infrastructure decisions.

Here's the catch: as of 2025, no one has demonstrated unambiguous quantum advantage for a full climate modeling task. Current quantum hardware is limited to noisy intermediate-scale devices with tens to hundreds of qubits, short coherence times, and error rates that require mitigation strategies.

Quantum decoherence - the loss of quantum information due to environmental interference - remains a fundamental challenge. Qubits are fragile. Even tiny vibrations or temperature fluctuations can destroy superposition. Practical quantum error correction demands thousands of physical qubits to create a single logical qubit, and we're not there yet.

Encoding classical climate data into quantum states and reading out the solution efficiently is another bottleneck. If you have to load and measure data at every time step across a global 3D grid, the overhead can erase any quantum speedup. Researchers are exploring ways around this, like keeping intermediate results in quantum memory or using quantum states to represent probability distributions rather than raw grid values.

Then there's the question of scale. The Earth's atmosphere has roughly 10⁶ degrees of freedom in a coarse model. Full-resolution models with ocean-atmosphere-ice coupling? Orders of magnitude more. Quantum algorithms show promise for linear systems, but scaling them to planetary complexity while maintaining coherence is a monumental engineering challenge.

The quantum computing community is tackling these hurdles from multiple angles. Error mitigation techniques - like taking multiple reads from quantum annealers and averaging results - can improve accuracy on NISQ devices without full error correction. The Chiba University weather prediction study demonstrated this: stochastic quantum effects caused larger errors initially, but multiple solution reads brought accuracy back in line with classical methods.

Hybrid algorithms offer another strategy. Instead of trying to run an entire climate model on a quantum processor, you identify the computational bottlenecks - say, solving a massive sparse matrix or optimizing parameterizations - and offload just those tasks to quantum hardware. Classical systems handle data preprocessing, model integration, and output analysis.

Algorithm innovation continues. Variational quantum eigensolvers, quantum approximate optimization algorithms, and quantum annealing are all being adapted for climate-relevant problems. Some researchers are exploring quantum-enhanced Monte Carlo methods, which could accelerate uncertainty quantification: running thousands of simulations with slightly varied inputs to understand the range of possible outcomes.

And then there's the hardware race. Companies like IonQ, IBM, Google, and Rigetti are scaling up qubit counts and improving coherence times. New architectures - topological qubits, photonic quantum computers - promise greater stability. Governments are investing billions. The European Union, United States, China, and others have launched quantum initiatives with climate applications explicitly in scope.

If quantum climate models deliver on their promise, the implications ripple far beyond academia. More accurate forecasts mean better disaster preparedness. Imagine predicting a cyclone's path three days further out with 20% more certainty. Evacuation plans become more effective. Infrastructure can be reinforced. Crops can be harvested early.

Long-term projections matter even more. Policymakers wrestling with carbon targets, adaptation strategies, and international agreements rely on climate models to estimate future warming, sea-level rise, and regional impacts. Uncertainty in these projections - currently wide enough to span catastrophic versus manageable outcomes - stems partly from computational limits. Sharper models could narrow those error bars, clarifying which interventions are essential and which are optional.

Public engagement shifts, too. Climate skepticism often fixates on model disagreement. If quantum-enhanced forecasts converge more tightly and verify against observations more consistently, the scientific consensus becomes harder to dismiss. Conversely, if quantum models reveal worse-case scenarios we've underestimated, that's critical information.

There's also an economic dimension. Quantum computing is expensive and energy-intensive (though ironically, a successful quantum climate model might run on less power than a petaflop-scale classical simulation). Access will initially concentrate in wealthy nations and big tech companies. Will climate modeling become a luxury good, or will international collaborations ensure equitable access?

Not everyone is convinced quantum computing will be the climate savior. Some physicists argue that the practical overhead - error correction, data encoding, measurement - could negate theoretical speedups for decades. Classical supercomputers are still improving. Moore's Law may be slowing, but specialized processors (like tensor processing units for machine learning) continue to advance.

A 2025 review noted that "no quantum advantage has yet been demonstrated in the context of climate modeling." That's blunt but fair. The Chiba University and IonQ studies are impressive, but they're proofs of concept on simplified systems. Scaling to operational weather forecasting or century-long climate simulations is a different beast.

There's also the question of problem fit. Quantum computers excel at certain tasks - factoring large numbers, searching unsorted databases, simulating quantum systems. But many climate modeling steps are inherently sequential or rely on classical heuristics that don't have known quantum equivalents. Hybrid approaches make sense, but they require seamless integration between vastly different computing paradigms.

Despite the challenges, momentum is building. The combination of urgent climate need and rapid quantum progress creates a unique window. Researchers describe this moment as a chance for "strong interdisciplinary collaboration between climate scientists and quantum computing experts" to overcome technical hurdles together.

Climate modeling has always pushed computing frontiers. In the 1960s, the first numerical weather predictions required the fastest machines available. Each generation of supercomputers enabled finer grids and more complex physics. Quantum computing is the next leap, but it demands rethinking algorithms from the ground up rather than just faster hardware.

Some breakthroughs could arrive sooner than expected. Quantum sensors, a related technology, can already detect CO₂ emissions at parts-per-billion precision, vastly improving monitoring accuracy. Integrating better observational data with quantum-enhanced assimilation could transform forecasting within a few years.

Meanwhile, quantum machine learning for subgrid parameterizations might be the low-hanging fruit. Training a quantum neural network doesn't require solving the full climate equations on quantum hardware. If it improves just one component - say, cloud cover representation - that's a win we can bank today.

The timeline depends on whom you ask. Optimists point to the rapid qubit scaling and suggest practical climate applications by 2030. Skeptics say we need fault-tolerant quantum computers with millions of qubits, which might not arrive until 2040 or later. The truth probably lies somewhere in between.

In the near term, expect more hybrid demonstrations: quantum processors handling optimization or linear algebra within classical climate codes. Expect more studies like the Chiba weather prediction work, applying quantum algorithms to progressively larger and more realistic systems. Expect investment to keep pouring in, because climate change and quantum computing both occupy the top tier of national research priorities.

Crucially, the conversation is shifting from "Can quantum computers help?" to "How do we make quantum climate modeling work?" That shift signals a field maturing from speculation to engineering.

For those watching from the sidelines - policymakers, climate activists, the public - the message is cautiously hopeful. Quantum computing won't solve climate change by itself. Reducing emissions, transitioning to renewables, protecting ecosystems - those remain paramount. But better models could guide those efforts more effectively, allocate resources more wisely, and communicate risks more compellingly.

The planet's climate is a quantum system in its own right, governed by the same probabilistic rules as quantum computers. Maybe it's fitting that the machines we build to mimic quantum reality will help us understand the one we live in. The race is on, and the stakes couldn't be higher.

Ahuna Mons on dwarf planet Ceres is the solar system's only confirmed cryovolcano in the asteroid belt - a mountain made of ice and salt that erupted relatively recently. The discovery reveals that small worlds can retain subsurface oceans and geological activity far longer than expected, expanding the range of potentially habitable environments in our solar system.

Scientists discovered 24-hour protein rhythms in cells without DNA, revealing an ancient timekeeping mechanism that predates gene-based clocks by billions of years and exists across all life.

3D-printed coral reefs are being engineered with precise surface textures, material chemistry, and geometric complexity to optimize coral larvae settlement. While early projects show promise - with some designs achieving 80x higher settlement rates - scalability, cost, and the overriding challenge of climate change remain critical obstacles.

The minimal group paradigm shows humans discriminate based on meaningless group labels - like coin flips or shirt colors - revealing that tribalism is hardwired into our brains. Understanding this automatic bias is the first step toward managing it.

In 1977, scientists discovered thriving ecosystems around underwater volcanic vents powered by chemistry, not sunlight. These alien worlds host bizarre creatures and heat-loving microbes, revolutionizing our understanding of where life can exist on Earth and beyond.

Automated systems in housing - mortgage lending, tenant screening, appraisals, and insurance - systematically discriminate against communities of color by using proxy variables like ZIP codes and credit scores that encode historical racism. While the Fair Housing Act outlawed explicit redlining decades ago, machine learning models trained on biased data reproduce the same patterns at scale. Solutions exist - algorithmic auditing, fairness-aware design, regulatory reform - but require prioritizing equ...

Cache coherence protocols like MESI and MOESI coordinate billions of operations per second to ensure data consistency across multi-core processors. Understanding these invisible hardware mechanisms helps developers write faster parallel code and avoid performance pitfalls.