Why Quantum Computers Need Cryogenic Control Electronics

TL;DR: Homomorphic encryption allows computers to analyze encrypted data without ever decrypting it, finally solving the privacy-versus-utility trade-off. After years of impracticality, recent breakthroughs make it viable for healthcare, finance, and cloud computing applications.

Your medical records sit on a cloud server somewhere. So does your financial history, your search queries, your DNA sequence. Every time an algorithm analyzes that data - checking for fraud, diagnosing disease, personalizing recommendations - someone's computer sees everything. The raw, unencrypted truth about you.

What if that could change? What if computers could crunch your most sensitive data without ever actually seeing it?

That's not science fiction anymore. It's homomorphic encryption, and after decades as a theoretical curiosity, it's finally becoming real. The technology lets cloud servers, research institutions, and AI systems perform complex calculations on encrypted data without decrypting it first. When you get the results back and unlock them with your key, they're exactly what you'd get if the computation happened on raw data - but the server never saw anything.

This solves one of cybersecurity's most persistent problems: you can't analyze what you can't see, but you also can't protect what you must reveal. For years, we've lived with that trade-off. Now we might not have to.

Think about how weird our current situation is. You encrypt your data before uploading it to the cloud - smart move. But the moment you want to actually use that data, you have to decrypt it. Send it to a server in the clear, let the server do its calculations, then re-encrypt the results.

During that processing window, your data is naked. The cloud provider can see it. A hacker who breaches the server can see it. A rogue employee can see it. Even if everyone behaves perfectly, you're trusting them with your secrets.

Healthcare faces this constantly. Researchers want to run predictive analysis on medical records to find patterns that save lives, but privacy laws like HIPAA and GDPR restrict what they can see. Financial services need to detect fraud across customer accounts without exposing transaction details. Governments want to analyze encrypted communications for threats without conducting mass surveillance.

The usual solution has been to minimize decryption time, tighten access controls, and hope nothing goes wrong. But that's risk management, not risk elimination.

Homomorphic encryption eliminates the risk. You send encrypted data to the cloud. The cloud performs whatever computations you need - statistical analysis, machine learning inference, database queries - all on the encrypted version. It sends back encrypted results. Only you, with your private key, can decrypt the final answer. The server never sees your data at any point in the process.

Cryptographers call homomorphic encryption the "holy grail" of encryption because it seemed impossibly elegant - a way to compute on data without ever seeing it.

Cryptographers call this the "holy grail" of encryption because it seemed impossibly elegant. For most of modern computing's history, it was impossible.

The story starts with a problem that stumped mathematicians for decades. Traditional encryption scrambles data so thoroughly that you can't do anything with it except unscramble it. That's the point - make the encrypted message useless to anyone without the key.

But what if you could design an encryption scheme where certain operations on the scrambled data produced the same result as performing those operations on the original? Add two encrypted numbers, get an encrypted sum that decrypts to the correct answer. Multiply, divide, compare - all possible on ciphertext, producing results that match what you'd get on plaintext.

People had created partial versions of this. RSA encryption, used everywhere in web security, supports one multiplication operation on encrypted data. The Paillier cryptosystem, developed in 1999, handles addition. These "partially homomorphic" schemes opened interesting possibilities - encrypted voting, where you could tally ballots without seeing individual votes, for instance.

But nobody could create fully homomorphic encryption that supported both addition and multiplication, which together enable any arbitrary computation. Most cryptographers thought it might be mathematically impossible.

Then in 2009, Craig Gentry, then a graduate student at Stanford, published a breakthrough that stunned the field. He proved fully homomorphic encryption was theoretically possible and provided the first working construction.

Gentry's insight was to build a system that could handle a limited number of operations before accumulating too much "noise" in the encrypted data - this is called "somewhat homomorphic encryption." Then, brilliantly, he showed how to "bootstrap" the scheme: use it to homomorphically decrypt itself, stripping away the noise, and repeat the process indefinitely. This meant you could chain together unlimited computations.

The catch? Gentry's original scheme was absurdly impractical. Processing even simple operations took hours. The encrypted data expanded to thousands of times its original size. It was a proof of concept, not a usable tool.

But it was enough. Gentry showed the path existed, and researchers worldwide started optimizing the journey.

Today's homomorphic encryption comes in three types, representing different trade-offs between capability and performance.

Partially Homomorphic Encryption (PHE) supports only one type of operation - either addition or multiplication, but not both. This sounds limited, but it's useful for specific tasks. The Paillier scheme's support for addition makes it perfect for secure voting systems: encrypt each vote, add up the encrypted ballots, then decrypt only the final tally. Nobody sees individual votes, but you get an accurate count. PHE is computationally cheap, making it practical for real-time applications.

Somewhat Homomorphic Encryption (SWHE) supports both addition and multiplication, but only up to a limited number of operations before the noise overwhelms the data. Think of it like a battery that runs down with each calculation. For specific workloads where you know exactly how many operations you need - say, running a particular machine learning model - SWHE can be tuned to handle that workload efficiently. Recent research shows SWHE performing quality-control checks on healthcare data with only a 30× slowdown compared to plaintext processing.

Fully Homomorphic Encryption (FHE) supports unlimited addition and multiplication operations, meaning it can run any computation you could perform on plaintext data. This is the "holy grail" version. Modern FHE schemes use Gentry's bootstrapping concept, but with vastly improved efficiency. Libraries like Microsoft SEAL and IBM's HElib now make FHE accessible to developers without requiring a PhD in cryptography.

"We evaluate the performance, resource requirements, and viability of deploying FHE in these settings through extensive testing and analysis, highlighting the progress made in FHE tooling and the obstacles still facing addressing the gap between conceptual research and practical applications."

- Research paper on FHE in Healthcare

The performance gap between these types keeps shrinking. What took hours in 2009 now takes seconds. But FHE still carries significant computational overhead - typically 100× to 200× slower than plaintext operations for complex tasks like neural network inference.

Here's the uncomfortable truth: homomorphic encryption is still slow and memory-hungry. Recent benchmarking shows that running a convolutional neural network on encrypted medical imaging data requires roughly 200× more CPU time than the same operation on unencrypted data. Encrypted data also expands - ciphertext can be 8× larger than the original plaintext, stressing storage and bandwidth.

These numbers sound prohibitive, but context matters. For many applications, "200× slower" is perfectly acceptable. If a fraud detection algorithm runs in 10 milliseconds on plaintext, running it in 2 seconds on encrypted data is fine. The user never notices, but the privacy gain is enormous.

And performance keeps improving. Hardware acceleration is arriving - specialized chips designed specifically for homomorphic operations. Companies are developing FPGAs and ASICs that dramatically speed up the math underlying FHE schemes. Software optimizations continue: better algorithms, more efficient encoding schemes, clever ways to minimize the number of operations needed.

The computational overhead also varies wildly by use case. Simple operations like quality-control checks - comparing values against thresholds, flagging outliers - run with only 30× overhead. Statistical analysis on encrypted datasets performs reasonably well. It's the complex workloads like deep learning that still struggle.

This creates a clear adoption path: start with simpler, high-value use cases where performance is acceptable, build expertise, and expand as the technology matures. You don't need to wait until FHE matches plaintext speed to find valuable applications.

Healthcare is the most obvious frontier. Medical data is incredibly sensitive, but its value multiplies when researchers can aggregate and analyze it across populations. Homomorphic encryption lets hospitals share encrypted patient records with research institutions, who can then run analytics - identifying disease patterns, testing treatment effectiveness, training diagnostic AI models - without ever seeing individual patient data.

IBM partnered with Banco Bradesco to pilot FHE for financial modeling on encrypted customer data. The bank's servers processed risk assessments and portfolio optimization without exposing sensitive financial information. This proves especially valuable in international banking, where data sovereignty laws prohibit moving certain data across borders, but analytical workloads might need to run in centralized data centers.

Financial services also drive encrypted transaction monitoring. Credit card companies want to detect fraud patterns across millions of accounts, but revealing all transaction details to a central fraud-detection system creates privacy and security risks. With FHE, individual banks can submit encrypted transaction data to a shared fraud-detection network that identifies suspicious patterns without seeing specific purchases.

Identity verification with homomorphic encryption enables attribute checks - confirming you're over 21 or live in a specific region - without revealing your actual birthdate or address.

Identity verification represents an emerging use case. When you prove you're over 21 to buy alcohol online, why should the vendor know your exact birthdate? Homomorphic encryption enables attribute checks - confirming you meet an age threshold or live in a specific region - without revealing the underlying data. The system returns only "yes" or "no," not your personal details.

Cloud computing adoption depends on solving the trust problem. Enterprises hesitate to move their most sensitive workloads to public clouds precisely because they must trust the provider. FHE turns any cloud into a "zero-trust" environment: your data stays encrypted end-to-end, the cloud provides computational power without gaining access, and you retain complete control.

For homomorphic encryption to go mainstream, it needs standards. Developers need to know which schemes are secure, which implementations are reliable, and how to make different systems interoperate.

That process is underway. The ISO/IEC JTC 1/SC 27 working group is developing international standards for homomorphic encryption schemes, APIs, and security parameters. NIST, the US National Institute of Standards and Technology, is evaluating FHE as part of its post-quantum cryptography initiative, since many FHE schemes are resistant to quantum computer attacks - a critical feature as quantum computing advances.

The Homomorphic Encryption Standardization consortium, formed in 2017, brings together researchers and industry players to establish security standards, API specifications, and application-level interoperability. Microsoft, IBM, Intel, and dozens of others participate, signaling serious commercial interest.

Open-source libraries are maturing rapidly. Microsoft SEAL, released in 2018, provides a well-documented C++ library with .NET wrappers, making FHE accessible to mainstream developers. IBM's HElib offers similar functionality. TFHE (Torus Fully Homomorphic Encryption), developed by researchers in France, emphasizes fast bootstrapping and has been deployed in real-world applications.

These libraries abstract away the mathematical complexity. Developers can build encrypted applications without understanding lattice cryptography or noise management - the libraries handle that. This democratization accelerates adoption by expanding the pool of people who can work with the technology.

While homomorphic encryption began in academia, commercial players now drive its evolution. Microsoft has integrated SEAL into Azure, allowing customers to run confidential computing workloads using FHE. The company sees encrypted machine learning as a key differentiator in the cloud wars - offering AI services that never see customer data addresses compliance concerns that block some enterprise adoption.

IBM positions FHE as part of its broader quantum-safe cryptography portfolio, bundling it with secure multiparty computation and other privacy-preserving technologies. The company's work with financial institutions demonstrates executive-level interest in moving beyond pilots to production deployments.

Intel develops hardware acceleration for homomorphic operations, recognizing that widespread adoption requires performance improvements beyond algorithmic optimization. The company's HEXL library provides optimized primitives for FHE operations, exploiting modern CPU instructions to speed up the underlying math.

Zama, a Paris-based cryptography startup, recently raised €49 million to bring fully homomorphic encryption to blockchain and AI applications. The company hit a $1 billion valuation, becoming the first FHE unicorn - a signal that investors believe the technology is approaching commercial viability. Zama's focus on blockchain is strategic: encrypted smart contracts could enable privacy-preserving decentralized finance applications where transactions remain confidential even though they're processed on a public ledger.

"Homomorphic encryption allows data to be encrypted and outsourced to commercial cloud environments for research and data-sharing purposes while protecting user or patient data privacy."

- CyberArk Security Research

Duality Technologies specializes in privacy-enhancing technologies for regulated industries, combining FHE with secure multiparty computation to enable secure data collaboration between organizations. Their platform lets companies and government agencies jointly analyze sensitive datasets without exposing underlying records - valuable for counter-terrorism, anti-money-laundering, and fraud prevention efforts that require information sharing.

Despite the progress, significant challenges remain. Performance overhead, while improving, still restricts FHE to workloads where latency isn't critical. Real-time applications - think video streaming, interactive gaming, high-frequency trading - remain out of reach.

Ciphertext expansion creates storage and bandwidth problems. If your encrypted data is 8× larger than the original, you need 8× more storage and network capacity. For large-scale analytics on petabyte datasets, those costs add up quickly.

Complexity poses an adoption barrier. Even with user-friendly libraries, building encrypted applications requires understanding new programming models and constraints. Developers must think carefully about which operations to perform, how to minimize computation depth, and how to manage cryptographic parameters. This learning curve slows deployment.

Security remains a moving target. FHE schemes are relatively new, and while they're believed to be secure, they haven't endured the decades of cryptanalysis that established standards like AES have. Parameter selection matters enormously - choose parameters too conservatively and performance suffers; too aggressively and security may be compromised. Organizations deploying FHE need cryptographic expertise to configure systems safely.

Integration with existing infrastructure isn't trivial. You can't just drop FHE into a legacy system and expect everything to work. Applications must be redesigned around encrypted computation, data pipelines reconfigured, and APIs rebuilt. This represents significant engineering investment, which naturally slows enterprise adoption.

Regulatory uncertainty adds friction. While privacy regulations like GDPR encourage technologies that minimize data exposure, they don't explicitly recognize or mandate homomorphic encryption. Legal frameworks haven't caught up with the technology, leaving companies uncertain about how regulators will treat encrypted processing for compliance purposes.

So when will homomorphic encryption go mainstream? The honest answer is "gradually, starting with specific use cases."

We're already seeing limited production deployments in healthcare analytics, financial fraud detection, and secure cloud services. Over the next 3-5 years, expect these to expand as performance improves and more developers gain expertise. Companies with stringent privacy requirements or regulatory pressure will adopt first - healthcare, finance, government.

Hardware acceleration could be the turning point: when encrypted inference runs at 10× overhead instead of 200×, many more applications become viable overnight.

Hardware acceleration will drive the next wave. Just as GPUs revolutionized machine learning by making previously impractical computations fast enough for real-world use, specialized FHE accelerators could do the same for encrypted computation. Intel, NVIDIA, and startups are all working on this. When you can run encrypted inference at 10× overhead instead of 200×, many more applications become viable.

Standardization reduces adoption friction. Once ISO and NIST standards solidify, enterprise architects will have clearer guidance on which schemes to use and how to implement them securely. Interoperability standards will let different FHE systems work together, preventing vendor lock-in and enabling ecosystem development.

Regulatory pressure could accelerate adoption. If privacy regulations begin explicitly requiring or incentivizing privacy-preserving computation techniques, companies will move faster. Some jurisdictions are already discussing "privacy by design" mandates that could favor technologies like FHE.

The quantum threat adds urgency. Many FHE schemes are believed to be quantum-resistant - they don't rely on factoring or discrete logarithms, which quantum computers can break. As organizations prepare for a post-quantum world, deploying FHE-based systems now provides both privacy benefits and quantum resilience.

Within a decade, homomorphic encryption will likely be common in specific domains - healthcare data sharing, confidential cloud computing, privacy-preserving AI - without necessarily becoming universal. Not every application needs encrypted processing, and for many use cases, simpler privacy techniques suffice. But for high-value scenarios where you absolutely must process sensitive data without exposing it, FHE is becoming the obvious choice.

The deeper significance of homomorphic encryption isn't just technical - it's philosophical. It changes the fundamental trust model of computing.

For decades, we've accepted that using someone's computational resources means trusting them with our data. You upload to the cloud, you trust the cloud. You use a web service, you trust the service. You send data to an AI model, you trust whoever runs the model. Trust is baked into the architecture of modern computing.

Homomorphic encryption breaks that assumption. You can use computational resources without trusting the provider. You can collaborate with other organizations on joint data analysis without revealing your data to them. You can build applications where even you, the developer, never see user data.

This has profound implications for how we architect systems. Today's cloud services are built on trust - access controls, audit logs, contractual obligations. Tomorrow's could be built on cryptographic guarantees. The cloud provider can't see your data because it's mathematically impossible, not because you trust them not to look.

That shift enables new forms of collaboration. Hospitals could pool patient data for research without violating privacy. Competitors could share market data to improve forecasting without revealing proprietary information. Governments could analyze encrypted communications for threats without conducting mass surveillance.

"Homomorphic encryption allows computations to be performed directly on encrypted data without first decrypting it. When the final result is decrypted, it matches the outcome that would have been produced if the operations had been conducted on the original, unencrypted data."

- Identity.com Research

It also changes the power dynamics around data. Right now, using powerful AI models often means sending your data to whoever controls the model - Google, OpenAI, Meta. With homomorphic encryption, you could send encrypted data to their models, get encrypted results back, and they'd never see what you were asking about. This preserves privacy while still leveraging centralized AI capabilities.

We're still in the early stages of figuring out what becomes possible when computation and access decouple. But the trajectory is clear: homomorphic encryption turns data privacy from a procedural problem (how do we make sure people follow the rules?) into a technical guarantee (the rules are enforced by mathematics, not policy).

Homomorphic encryption isn't vaporware anymore. It's not a laboratory curiosity. It's a maturing technology moving from specialized deployments toward broader commercial adoption.

The performance will keep improving - better algorithms, optimized libraries, hardware acceleration. The ecosystem will mature - standards will solidify, more developers will gain expertise, integration tools will smooth deployment. Applications will expand from healthcare and finance into other domains where privacy and utility must coexist.

Obstacles remain, certainly. The computational overhead may never reach zero, though it doesn't need to for most use cases. Complexity will persist - encrypted computing will always be harder than plaintext computing. And adoption takes time, especially for infrastructure technologies that require rethinking how systems are built.

But the direction is clear. We're moving toward a world where you can have both strong privacy and powerful computation, where sensitive data can be analyzed without being exposed, where the cloud doesn't need to see your secrets to process them.

The encryption revolution isn't coming. It's here, quietly transforming how we handle data in the places where privacy matters most. You might not notice it - that's the point. Your data stays encrypted, useful but unreadable, processed by computers that never see what they're computing.

That's the future taking shape: computation without observation, analysis without exposure, cloud services without trust. After decades of trade-offs between privacy and utility, we're finally building systems that deliver both.

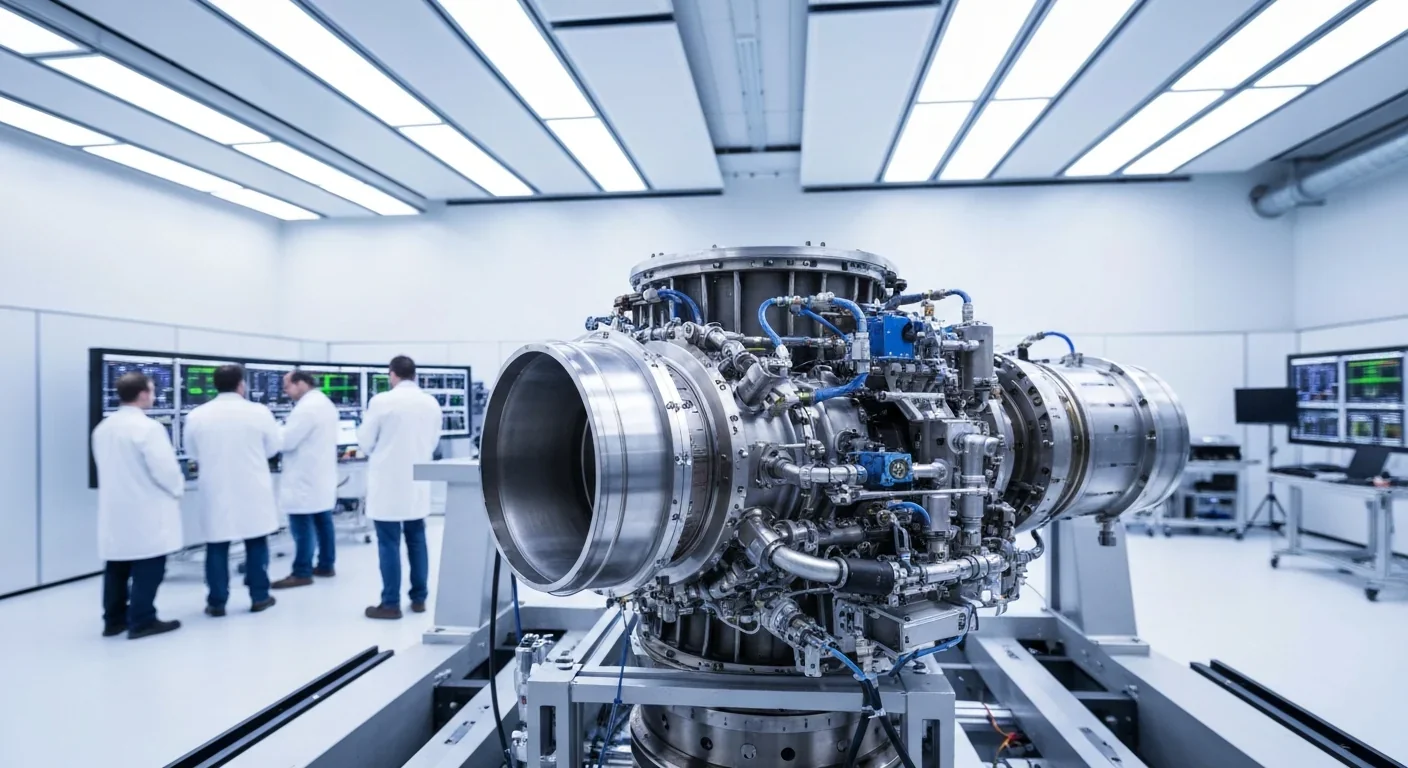

Rotating detonation engines use continuous supersonic explosions to achieve 25% better fuel efficiency than conventional rockets. NASA, the Air Force, and private companies are now testing this breakthrough technology in flight, promising to dramatically reduce space launch costs and enable more ambitious missions.

Triclosan, found in many antibacterial products, is reactivated by gut bacteria and triggers inflammation, contributes to antibiotic resistance, and disrupts hormonal systems - but plain soap and water work just as effectively without the harm.

AI-powered cameras and LED systems are revolutionizing sea turtle conservation by enabling fishing nets to detect and release endangered species in real-time, achieving up to 90% bycatch reduction while maintaining profitable shrimp operations through technology that balances environmental protection with economic viability.

The pratfall effect shows that highly competent people become more likable after making small mistakes, but only if they've already proven their capability. Understanding when vulnerability helps versus hurts can transform how we connect with others.

Leafcutter ants have practiced sustainable agriculture for 50 million years, cultivating fungus crops through specialized worker castes, sophisticated waste management, and mutualistic relationships that offer lessons for human farming systems facing climate challenges.

Gig economy platforms systematically manipulate wage calculations through algorithmic time rounding, silently transferring billions from workers to corporations. While outdated labor laws permit this, European regulations and worker-led audits offer hope for transparency and fair compensation.

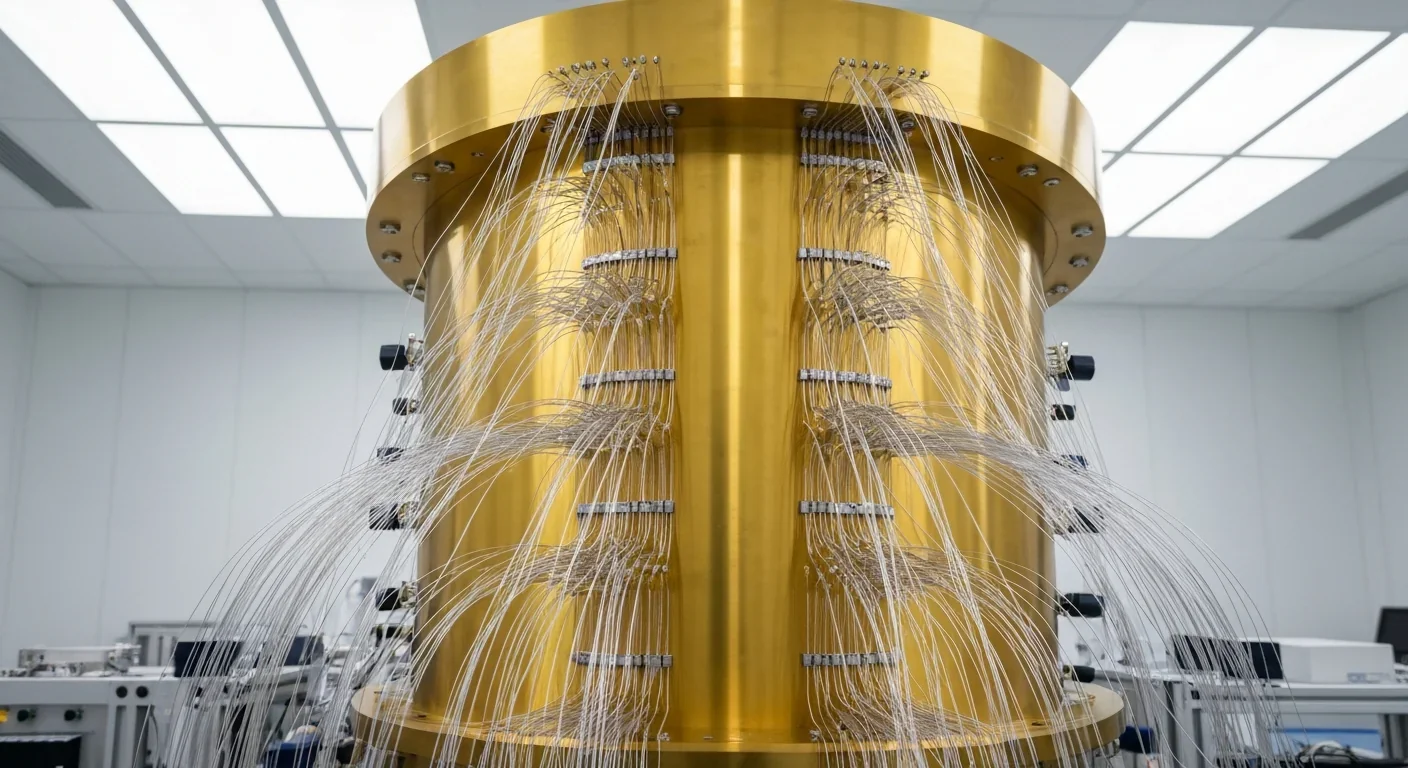

Quantum computers face a critical but overlooked challenge: classical control electronics must operate at 4 Kelvin to manage qubits effectively. This requirement creates engineering problems as complex as the quantum processors themselves, driving innovations in cryogenic semiconductor technology.