Why Quantum Computers Need Cryogenic Control Electronics

TL;DR: Hardware enclaves are transforming cloud security by protecting data during processing through trusted execution environments (TEEs). Unlike traditional encryption, confidential computing creates processor-level isolation that even cloud providers cannot breach, enabling secure AI training, multi-party computation, and regulatory compliance without requiring trust in infrastructure providers.

By 2030, analysts predict that over 70% of enterprise cloud workloads will incorporate confidential computing technology to protect data during processing. This represents a fundamental shift in how we think about cloud security. For decades, we've encrypted data at rest and in transit, but the moment data enters memory to be processed, it becomes vulnerable. Cloud providers, system administrators, and malicious insiders could theoretically access your most sensitive information. Hardware-based trusted execution environments are changing that calculus entirely, creating isolated computation zones where even the cloud provider can't peek inside.

Confidential computing addresses what security professionals call "data in use" - the moment when encrypted data must be decrypted to perform calculations. Traditional cloud security relies on trust: you trust your cloud provider won't look at your data, trust their employees follow proper procedures, trust their systems aren't compromised. AWS Nitro Enclaves, Intel Software Guard Extensions (SGX), and AMD Secure Encrypted Virtualization (SEV) eliminate that trust requirement through hardware-enforced isolation.

These technologies create what's essentially a vault inside your processor. When you run a computation in a trusted execution environment, the CPU itself ensures that code and data are completely isolated from everything else - including the operating system, hypervisor, and even physical access to the machine. The Confidential Computing Consortium, launched by the Linux Foundation in 2019, has brought together tech giants to standardize these approaches.

Think of it this way: traditional cloud security is like a locked filing cabinet in an office building. The building owner has a master key, the janitor can access the room, and a determined intruder might break the lock. Confidential computing is more like a sealed envelope that self-destructs if anyone except the intended recipient tries to open it, and that self-destruct functionality is built into the physics of the envelope itself.

Hardware enclaves create cryptographic isolation where trust is replaced by mathematical proof - even cloud providers can't access your data during processing.

The path to confidential computing wasn't a straight line. It emerged from both innovation and crisis. Intel introduced SGX in 2015, envisioning it primarily for digital rights management and secure key storage. Few predicted it would become foundational to cloud security architectures.

Then came 2018. Security researchers disclosed Spectre and Meltdown, devastating CPU vulnerabilities that exploited speculative execution to leak sensitive data across security boundaries. These weren't software bugs you could patch away - they were fundamental flaws in how modern processors optimize performance. The industry realized that hardware-level security problems required hardware-level solutions.

The CPU vulnerability crisis accelerated development of more robust trusted execution environments. AMD enhanced its Secure Encrypted Virtualization technology with SEV-SNP (Secure Nested Paging), adding cryptographic attestation that proves code hasn't been tampered with. ARM developed TrustZone for mobile and IoT devices. Cloud providers built their own implementations: AWS Nitro Enclaves, Azure Confidential Computing, and Google Confidential VMs.

Previous technological shifts followed similar patterns. When cloud computing emerged, skeptics worried about data security - "How can we trust our data to someone else's servers?" Organizations developed encryption, access controls, and compliance certifications. But those solutions all assumed the cloud provider was benevolent. Confidential computing represents a more mature security model: trustless architecture where mathematical guarantees replace trust relationships.

At its core, a trusted execution environment creates an isolated region of memory that's encrypted and inaccessible to any process outside the enclave - including privileged software like operating systems or hypervisors. Here's what makes this possible.

Modern processors include dedicated security coprocessors that manage encryption keys in hardware. When you launch a confidential workload, the CPU generates unique encryption keys that exist only within the processor's protected memory. Your data is encrypted before it leaves the CPU to reach system RAM, and decrypted only when it returns to the secure enclave. Memory encryption happens at the memory controller level, making it transparent to software while providing robust protection.

Attestation is equally crucial. Before you send sensitive data to an enclave, you need proof that the right code is running in a genuine secure environment. The hardware generates a cryptographically signed report - essentially a receipt that says "this specific code is running in a verified enclave on authentic hardware." Remote attestation allows you to verify this receipt before trusting the environment with your data.

"Enclaves are fully isolated virtual machines, hardened, and highly constrained. Even a root user or an admin user on the instance will not be able to access or SSH into the enclave."

- AWS Nitro Enclaves Documentation

AWS Nitro Enclaves take this further by creating fully isolated virtual machines with no persistent storage, no interactive access, and no external networking. Even the root user on the parent instance can't SSH into an enclave or view its memory. The system integrates with AWS Key Management Service, which verifies attestation documents before releasing encryption keys.

Intel SGX works differently, creating smaller enclaves within a single process rather than isolating entire VMs. This allows fine-grained protection of specific functions while maintaining normal application performance. AMD's SEV-SNP encrypts entire virtual machine memory, making it ideal for lift-and-shift migrations where you want to protect existing workloads without code changes.

The beauty of these approaches is that they're hardware-enforced. Software vulnerabilities, social engineering, or insider threats can't bypass protections that exist at the silicon level. The processor itself becomes your security perimeter.

Financial services were early adopters, and for good reason. When multiple banks collaborate on fraud detection, they need to pool data without exposing individual transaction details to competitors. Multi-party computation using Nitro Enclaves allows this: each bank sends encrypted data to a neutral enclave, which performs the analysis and returns aggregate results without any party seeing the others' raw data.

Cryptocurrency exchanges use enclaves to protect private keys. Traditional approaches store keys in hardware security modules (HSMs), which are expensive, inflexible, and create operational bottlenecks. Running key management in confidential computing environments provides equivalent security at cloud scale and cost.

Healthcare is another natural fit. Hospitals want to use cloud-based AI to analyze patient records, but HIPAA compliance makes this challenging. Confidential computing allows processing of protected health information in the cloud while ensuring the cloud provider can't access patient data. Researchers can train machine learning models on confidential medical data without ever seeing individual records.

AI and machine learning represent perhaps the most compelling use case. Training models requires massive compute resources, which typically means cloud infrastructure. But if you're developing proprietary AI for competitive advantage, sending your training data and model architecture to a cloud provider creates intellectual property risks. Confidential computing for AI training protects both your data and your algorithms.

Consider a pharmaceutical company using AI to discover new drugs. Their molecular databases and ML models represent billions in R&D investment. Running this in a Google Confidential VM means they can leverage Google's infrastructure without exposing their proprietary compounds or algorithms to Google. The attestation process proves their code is running in a genuine secure enclave before any data is processed.

Government and defense applications are emerging too. Intelligence agencies need to process classified data, but can't use traditional cloud services because of security requirements. Confidential computing enables cloud-like infrastructure for sensitive government workloads by removing the need to trust the infrastructure provider.

Nothing in security is perfect, and confidential computing has real limitations you need to understand before betting your security strategy on it.

Side-channel attacks remain a persistent threat. Even if attackers can't read memory directly, they can sometimes infer information by measuring timing, power consumption, or electromagnetic emissions. Recent research demonstrated physical attacks against secure enclaves from Nvidia, AMD, and Intel by using precise voltage manipulation to cause errors that leak data.

Performance overhead from encryption and isolation can range from 10-50% depending on workload type - a real cost to consider when evaluating confidential computing implementations.

The Foreshadow attack in 2018 showed that speculative execution vulnerabilities could break SGX's isolation guarantees. While vendors patched these specific flaws, the cat-and-mouse game continues. Modern hardware security research identifies new attack vectors constantly.

Performance overhead is another consideration. Encryption and isolation aren't free. Depending on your workload and implementation, you might see performance degradation of 10-50% compared to running the same computation in a standard environment. GPU-based confidential computing faces particular challenges, since GPU workloads often involve massive data transfers that need encryption.

Complexity creates security risks of its own. Implementing confidential computing correctly requires deep expertise in cryptography, attestation, and secure system design. A misconfigured enclave might provide a false sense of security while leaving vulnerabilities exposed. The technology is still maturing, and best practices are evolving.

Compatibility constraints matter too. AWS Nitro Enclaves have no persistent storage, no interactive access, and no external networking by design. That's great for security, but it means substantial application refactoring for many use cases. You can't just take an existing application and drop it into an enclave without changes.

Trust boundaries shift but don't disappear. While you no longer need to trust your cloud provider, you still need to trust the hardware manufacturer. If Intel's chip fabrication process is compromised, SGX's security guarantees evaporate. Nation-state actors with resources to conduct supply chain attacks remain a concern for the most sensitive applications.

European data sovereignty requirements are pushing confidential computing adoption faster than technology considerations alone. Under GDPR, EU regulators are increasingly skeptical of data transfers to US cloud providers, even with encryption. The Schrems II ruling invalidated Privacy Shield agreements, leaving companies scrambling for alternatives.

Confidential computing and data sovereignty offer a solution: you can use non-sovereign cloud infrastructure while maintaining cryptographic guarantees that the cloud provider can't access your data. This is becoming crucial for European companies that need global cloud scale but face regulatory constraints on data location.

HIPAA vs GDPR compliance creates similar pressures in healthcare. Both regulations require protecting personal information, but their specific requirements differ. Confidential computing provides technical controls that satisfy regulators in both jurisdictions: data is protected during processing, access is cryptographically verified, and audit trails prove who accessed what and when.

Financial regulators are paying attention too. The Basel Committee on Banking Supervision issued guidance on operational resilience that requires banks to protect customer data even from their own infrastructure providers. Confidential computing gives banks a way to meet these requirements while still leveraging cloud economics.

China's Personal Information Protection Law (PIPL) and California's Consumer Privacy Act (CCPA) add to the regulatory complexity. Companies operating globally face a patchwork of privacy laws with conflicting requirements. Technical controls that protect data at the hardware level provide a common foundation for multi-jurisdictional compliance.

"74% of organizations view confidential computing as a strategic imperative for secure AI and data collaboration, driven largely by compliance needs."

- Linux Foundation Study, 2024

Industry analysts expect regulatory requirements will drive faster adoption than technology pull. A recent Linux Foundation study found that 74% of organizations view confidential computing as a strategic imperative for secure AI and data collaboration, driven largely by compliance needs.

Every major cloud provider now offers confidential computing, but their approaches differ significantly based on their hardware partnerships and target use cases.

AWS Nitro Enclaves run on AWS's custom Nitro hardware, which offloads virtualization functions from the CPU to dedicated cards. This architecture enables stronger isolation than software-based approaches. Nitro supports both Intel and AMD processors, giving AWS flexibility in its supply chain.

Microsoft Azure Confidential Computing was the first major cloud to offer Intel SGX in 2017. Azure has since expanded to AMD SEV-SNP and Nvidia GPUs with confidential computing capabilities. Their Confidential Containers let you run containerized workloads in enclaves without code changes.

Google Cloud Confidential VMs focus on AMD SEV-SNP, offering memory encryption for entire VMs with minimal performance impact. Google recently added live migration for confidential VMs, addressing a previous limitation where maintenance required downtime.

IBM Cloud focuses on financial services and highly regulated industries, offering confidential computing through both Intel SGX and IBM's proprietary Secure Service Containers. Their sovereign cloud offerings combine confidential computing with contractual and organizational controls for maximum data protection.

Beyond the hyperscalers, specialized vendors are emerging. Duality Technologies provides privacy-enhanced computation platforms for collaborative analytics. OpenMetal offers bare-metal confidential computing for organizations that need cloud-like infrastructure with on-premises control.

The open-source community is critical too. The Confidential Computing Consortium has over 100 members working on standardization and open implementations. Projects like Enarx aim to make confidential computing application-portable across different TEE technologies.

Market projections are staggering. Analysts forecast the confidential computing market will grow from roughly $5 billion in 2024 to $451 billion by 2034, representing a compound annual growth rate approaching 50%. Other estimates are more conservative but still predict exponential growth driven by AI workloads and regulatory requirements.

Confidential computing fits naturally into zero trust security architectures. Zero trust's core principle is "never trust, always verify" - don't assume anything inside your security perimeter is safe. Traditional cloud security violates this principle by trusting the infrastructure provider.

Zero trust and confidential computing create a powerful combination. Cryptographic attestation verifies that code is running in a genuine secure environment before granting access to data or keys. Every access request is authenticated, authorized, and encrypted, with hardware providing the root of trust.

This matters especially for AI infrastructure. As organizations build AI data centers with shared GPU resources, they need assurance that one tenant's model training can't leak data to another tenant's workload. Hardware isolation provides this guarantee in ways that software containers cannot.

Financial services are pioneering zero trust architectures built on confidential computing. Banks use multi-party computation to share threat intelligence without exposing customer data, with attestation proving that all participants are running approved analysis code in verified enclaves.

The combination gets particularly interesting for edge computing and IoT. Devices at the edge often can't maintain constant connection to a central security system for authentication and authorization. ARM TrustZone allows edge devices to make security decisions locally while maintaining cryptographic proof of their actions for later audit.

The next frontier is confidential computing in a post-quantum world. Current attestation mechanisms rely on cryptographic algorithms that quantum computers could potentially break. Researchers are working to integrate post-quantum cryptography into hardware enclaves before large-scale quantum computers become practical.

This isn't just theoretical. Intelligence agencies and organizations with long-term secrets need to protect today's data against tomorrow's quantum computers. A breach today could be decrypted in a decade when quantum computing matures. Post-quantum confidential computing aims to provide forward secrecy against future attacks.

The confidential computing market is projected to grow from $5 billion in 2024 to $451 billion by 2034 - a nearly 50% compound annual growth rate driven by AI and regulatory demands.

Standardization remains a challenge. Each vendor's TEE implementation has different APIs, attestation mechanisms, and security properties. The Confidential Computing Consortium is working on common standards, but we're years away from true portability. An application written for Intel SGX can't simply run in AWS Nitro Enclaves without significant changes.

GPU confidential computing is advancing rapidly. Nvidia's upcoming architectures promise confidential computing capabilities integrated directly into GPUs, addressing the performance challenges that currently limit confidential AI training.

Developer tools are improving. Early confidential computing required deep expertise in cryptography and low-level system programming. Newer platforms like Secret Network's AI SDK abstract away complexity, letting developers build confidential applications with familiar tools.

The open-source ecosystem is critical for adoption. Proprietary confidential computing solutions create vendor lock-in and limit security research. Open implementations allow independent verification of security properties and enable innovation from the broader community.

For security professionals and IT decision-makers, confidential computing represents both an opportunity and a challenge. The opportunity is clear: truly trustless cloud computing that can satisfy the most stringent security and compliance requirements. The challenge is understanding when confidential computing is worth its costs and complexity.

Start by identifying your most sensitive workloads - the data and processing that pose the greatest risk if exposed. These are your candidates for confidential computing. Financial transactions, health records, AI training data, and cryptographic keys top the list for most organizations.

Evaluate your regulatory requirements. If you're subject to GDPR, HIPAA, or data sovereignty regulations, confidential computing may be mandatory rather than optional. Understanding your compliance landscape helps justify the investment.

Consider your threat model. If you're primarily worried about external hackers, traditional cloud security might suffice. If insider threats, nation-state actors, or malicious cloud providers concern you, confidential computing provides defenses that other approaches cannot.

Assess vendor offerings against your specific needs. AWS, Azure, and Google Cloud offer different implementations with different trade-offs. Test with pilot projects before committing to production deployments.

Develop in-house expertise. Confidential computing is still a specialized field. Training your security and development teams on trusted execution environments, attestation, and secure enclave development pays dividends as adoption accelerates.

The trajectory is clear: as AI workloads grow, as regulations tighten, and as cyber threats evolve, the demand for confidential computing will accelerate. Organizations that develop capabilities now will be positioned to leverage cloud infrastructure securely and compliantly. Those that wait may find themselves constrained by legacy architectures that can't meet tomorrow's security requirements.

We're witnessing the evolution from "trust but verify" to "verify then trust" - from security models based on organizational relationships to security models based on mathematical proofs. Hardware enclaves aren't perfect, but they represent our best answer yet to an increasingly urgent question: how do we use powerful cloud infrastructure without surrendering control of our most valuable data?

Rotating detonation engines use continuous supersonic explosions to achieve 25% better fuel efficiency than conventional rockets. NASA, the Air Force, and private companies are now testing this breakthrough technology in flight, promising to dramatically reduce space launch costs and enable more ambitious missions.

Triclosan, found in many antibacterial products, is reactivated by gut bacteria and triggers inflammation, contributes to antibiotic resistance, and disrupts hormonal systems - but plain soap and water work just as effectively without the harm.

AI-powered cameras and LED systems are revolutionizing sea turtle conservation by enabling fishing nets to detect and release endangered species in real-time, achieving up to 90% bycatch reduction while maintaining profitable shrimp operations through technology that balances environmental protection with economic viability.

The pratfall effect shows that highly competent people become more likable after making small mistakes, but only if they've already proven their capability. Understanding when vulnerability helps versus hurts can transform how we connect with others.

Leafcutter ants have practiced sustainable agriculture for 50 million years, cultivating fungus crops through specialized worker castes, sophisticated waste management, and mutualistic relationships that offer lessons for human farming systems facing climate challenges.

Gig economy platforms systematically manipulate wage calculations through algorithmic time rounding, silently transferring billions from workers to corporations. While outdated labor laws permit this, European regulations and worker-led audits offer hope for transparency and fair compensation.

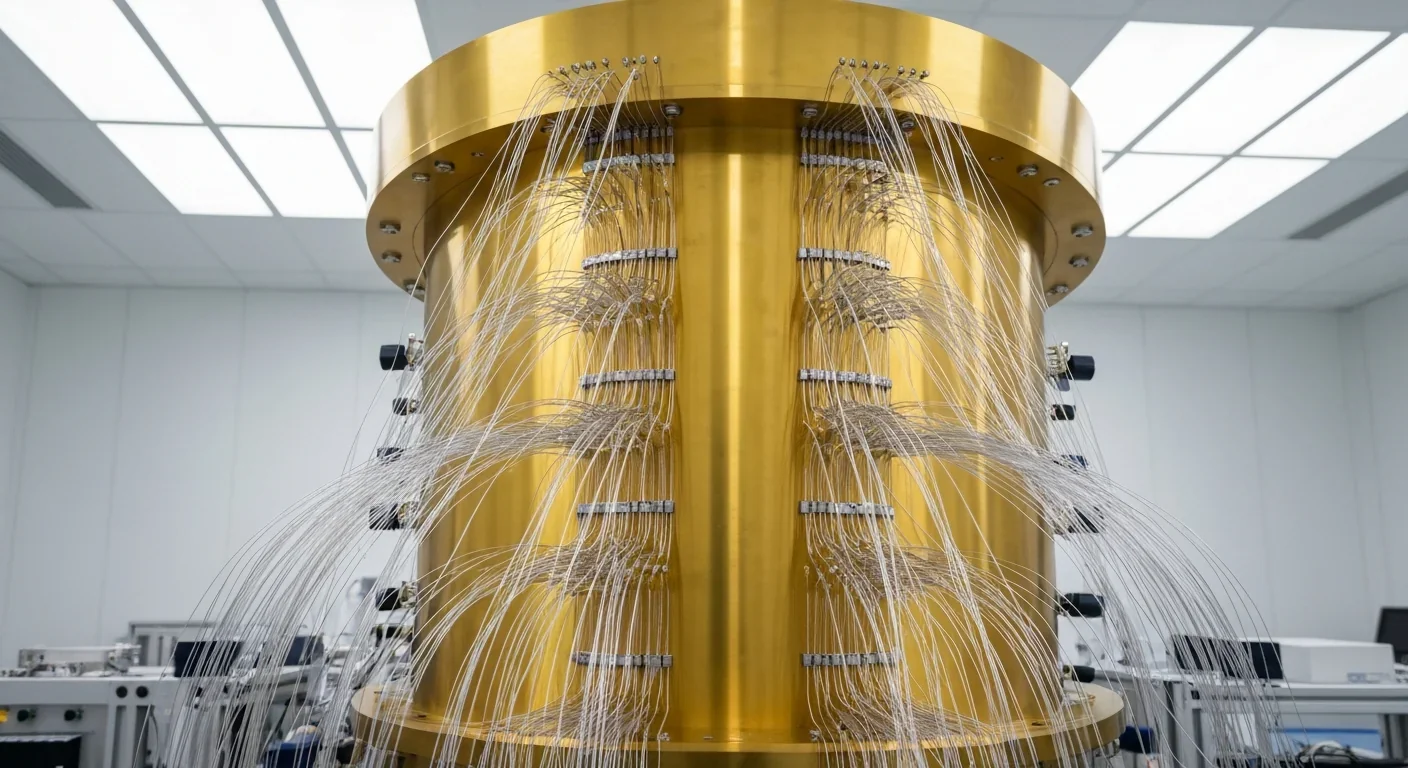

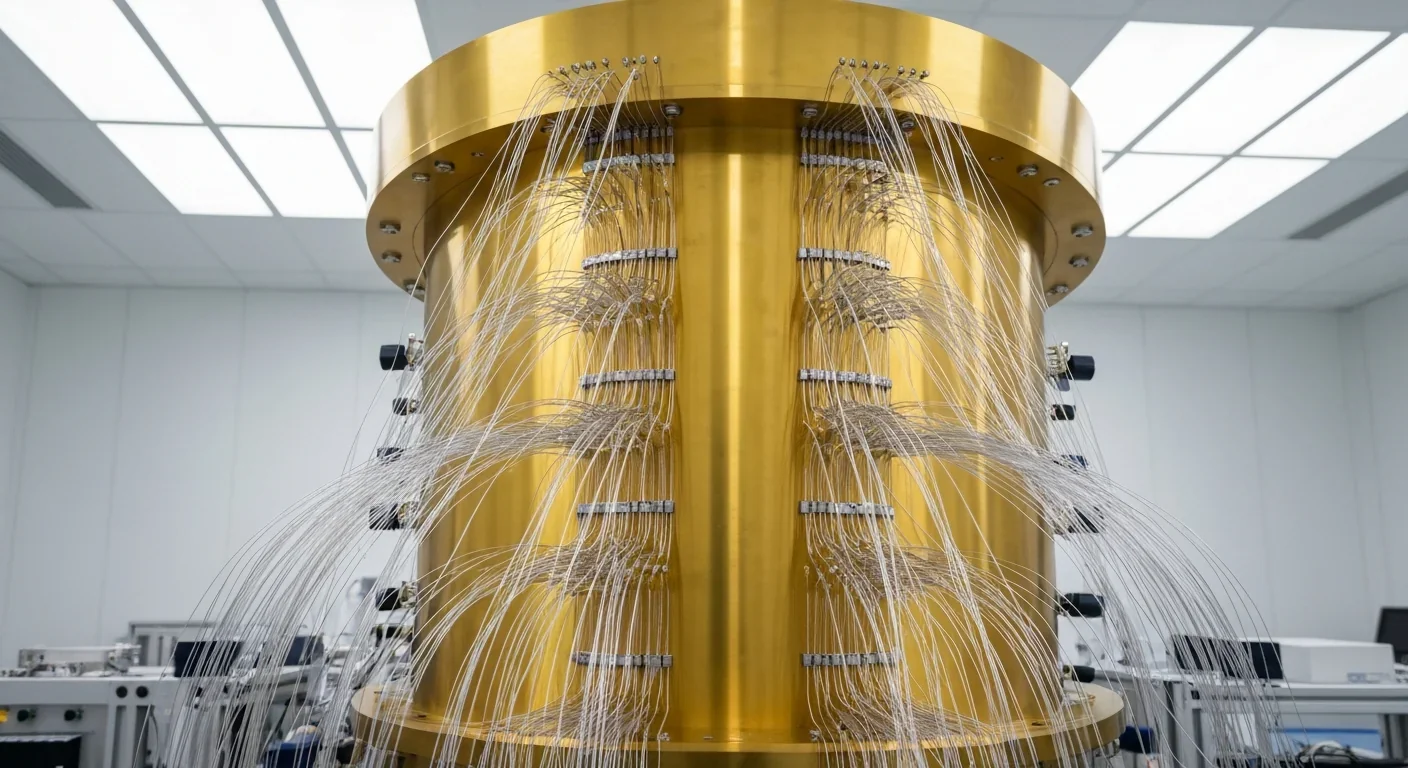

Quantum computers face a critical but overlooked challenge: classical control electronics must operate at 4 Kelvin to manage qubits effectively. This requirement creates engineering problems as complex as the quantum processors themselves, driving innovations in cryogenic semiconductor technology.