Cache Coherence Protocols: MESI and MOESI Explained

TL;DR: Decoherence - the rapid collapse of quantum states due to environmental interference - is the single biggest obstacle to practical quantum computing. Despite recent breakthroughs in error correction and qubit design, coherence times remain measured in milliseconds, forcing researchers to race against physics itself.

Every few months, another headline announces that quantum supremacy is finally here. Google processes calculations in minutes that would take classical computers millennia. IBM unveils roadmaps promising hundreds of logical qubits by 2029. Startups raise billions betting on a quantum future. But buried in the fine print of every announcement is a stubborn truth that threatens to undermine it all: qubits can't stay quantum.

The problem is called decoherence, and it's not just an engineering hiccup. It's a fundamental confrontation between quantum mechanics and thermodynamics. While quantum computers promise to revolutionize drug discovery, crack encryption, and simulate molecular interactions with unprecedented accuracy, they can only do so if qubits maintain their delicate quantum states long enough to complete calculations. Right now, most can't. The quantum information stored in even the most advanced qubits evaporates in fractions of a second, destroyed by the very environment meant to protect it.

To understand why decoherence is so devastating, you need to grasp what makes quantum computers powerful in the first place. Classical computers store information as bits: ones or zeros, on or off. Quantum computers use qubits, which exploit superposition to exist as both one and zero simultaneously until measured. Entangle multiple qubits together, and you can explore vast computational spaces in parallel, processing solutions to problems that would take classical computers longer than the age of the universe.

But superposition and entanglement are spectacularly fragile. They exist only when qubits remain isolated from their environment. The moment a qubit interacts with anything outside the quantum system - a stray photon, thermal vibration, electromagnetic noise - its quantum state collapses into a classical one. This is decoherence, and it happens fast. In superconducting qubits, the kind Google and IBM use, coherence times hover around microseconds to milliseconds. Trapped ion qubits fare better, maintaining coherence for minutes, but that's still not enough for the complex calculations quantum computers are supposed to tackle.

Decoherence is like trying to solve a Rubik's cube while the colors fade in real time - every operation must complete before the quantum information decays into noise.

The speed at which decoherence strikes means that quantum processors are racing against the clock. Every gate operation, every entanglement, every measurement must happen before the quantum information decays. Miss that window, and your calculation collapses into noise.

The physics behind decoherence is brutally simple: quantum systems become entangled with their environment, and once that happens, the system's quantum behavior leaks away. Imagine a qubit as a spinning coin, hovering between heads and tails. In an ideal world, it stays suspended until you catch it. In reality, air currents, vibrations, electromagnetic fields - anything that touches the coin - nudges it toward one side or the other, destroying the superposition before you're ready to observe it.

For superconducting qubits, which operate at temperatures near absolute zero, the dominant threat is noise. Flux noise, with its characteristic 1/f spectrum, arises from defects in materials and magnetic field fluctuations. Charge noise comes from electrons hopping between trap states in the substrate. Even at 10 millikelvin - colder than outer space - thermal energy isn't zero, and quasiparticles can tunnel through superconducting gaps, introducing errors.

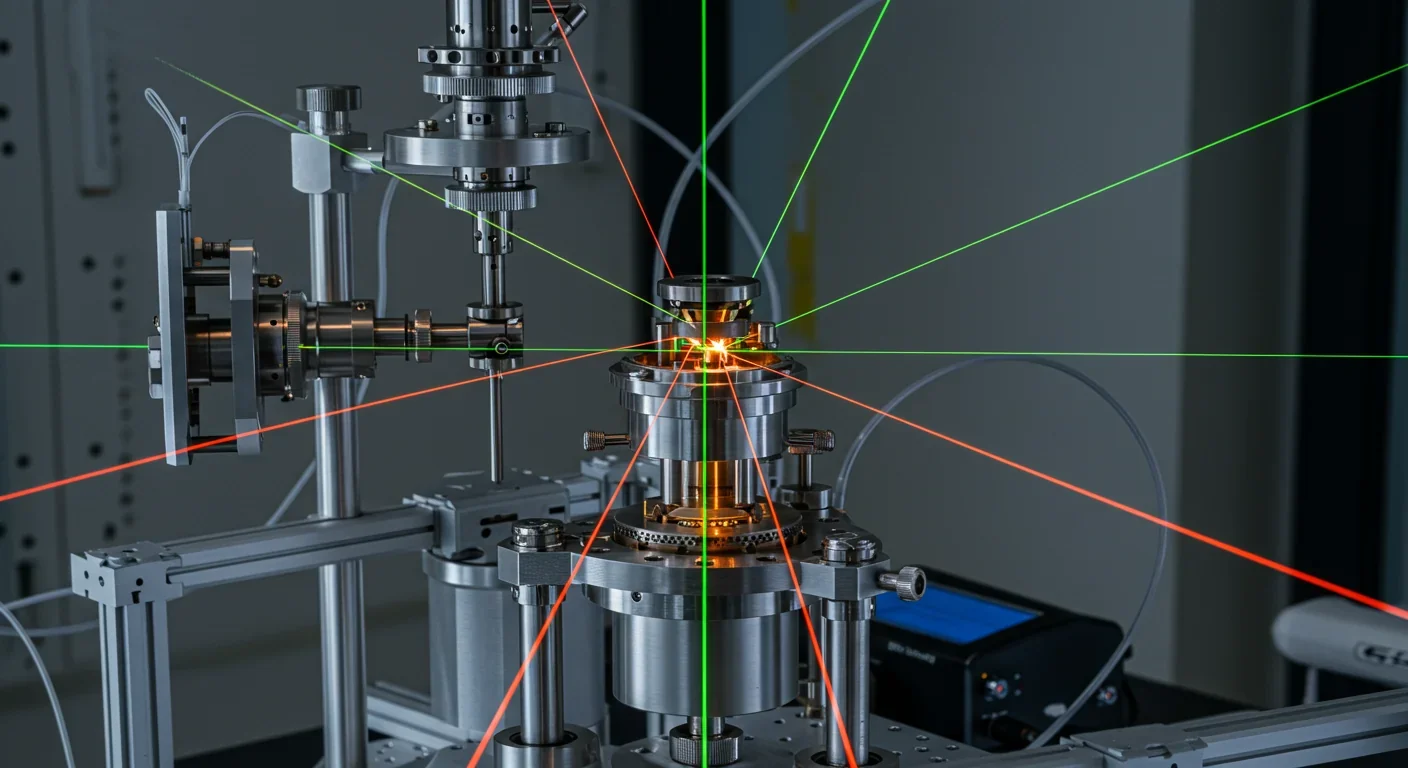

Trapped ion qubits face different enemies. Ions sit in electromagnetic traps, isolated from most environmental disturbances, which is why they achieve coherence times measured in minutes. But they're not invincible. When ions are transported between trap zones, nonuniform magnetic fields induce phase shifts that ruin coherence. Laser noise, used to control and read out qubits, introduces additional errors. Even the act of measuring a qubit disturbs it, collapsing superposition and injecting entropy back into the system.

"Decoherence also proves to be challenging to eliminate, and is caused when the qubits interact with the external environment undesirably."

- Wikipedia, Trapped-ion quantum computer

And then there's the third law of thermodynamics. Recent theoretical work shows that preparing pure quantum states requires infinite thermodynamic resources. In practice, this means any realistic quantum computer will always operate with mixed states - qubits that aren't perfectly isolated, carrying residual entanglement with their environment. The purer you want your qubits, the more energy you must expend to cool and isolate them, and even then, perfection remains out of reach.

Let's put some numbers on the table. The best superconducting qubits today achieve coherence times of one to four milliseconds. That sounds short, but it's a dramatic improvement. A decade ago, coherence times were measured in tens of microseconds. IBM's modular architecture, using tunable couplers to connect chips, maintains low error rates within those few-millisecond windows. Google's Sycamore processor, which famously claimed quantum supremacy in 2019, operated with qubits that decohered in roughly 20 microseconds.

Trapped ion qubits tell a different story. Hyperfine qubits - encoded in the nuclear spin states of ions - have theoretical lifetimes of thousands to millions of years. In practice, environmental coupling limits coherence to minutes, still orders of magnitude longer than superconducting qubits. But that advantage comes at a cost: gate operations in trapped ion systems are slower, limiting the number of operations per second.

New qubit designs are pushing boundaries. Researchers at Argonne National Laboratory recently demonstrated a single-electron charge qubit on a solid neon surface with a coherence time of 0.1 milliseconds - a thousand-fold improvement over previous charge qubits. The secret? Neon's chemical inertness shields the electron from the charge noise that normally destroys coherence in microseconds. Meanwhile, molecular qubits embedded in asymmetric host crystals have achieved coherence times of 10 microseconds, five times longer than in symmetric hosts, by using crystal asymmetry to deflect disruptive magnetic fields.

Even more exotic approaches are emerging. Cat qubits, which encode information in superpositions of coherent states in microwave cavities, exhibit bit-flip times of tens of seconds - far longer than conventional qubits. But they remain vulnerable to phase-flip errors, which occur on much shorter timescales.

In 2024, IBM cut the physical-to-logical qubit ratio from 3,000:1 to just 24:1 using breakthrough qLDPC codes - a tenfold efficiency gain that makes fault-tolerant quantum computing suddenly look feasible.

Since perfect isolation is impossible, the quantum computing industry has bet everything on error correction. The idea is conceptually simple: encode a single logical qubit across many physical qubits, then continuously monitor and correct errors before they accumulate. If you can catch errors faster than they occur, you can, in theory, perform arbitrarily long calculations.

But the overhead is staggering. Early surface code proposals required thousands of physical qubits to encode a single logical qubit. IBM's breakthrough in 2024 slashed that to 24 physical qubits per logical qubit using quantum low-density parity-check (qLDPC) codes, a tenfold improvement in efficiency. Google demonstrated that increasing the physical qubit count in a logical qubit from 17 to 49 reduced error rates by a factor of two, showing that error correction actually works in practice.

Microsoft and Quantinuum pushed even further, creating four logical qubits from just 30 physical qubits using active syndrome extraction in a trapped-ion system. These logical qubits achieved error rates up to 800 times lower than their physical counterparts, a stunning validation of error correction theory.

Yet even with these advances, scaling remains daunting. IBM's 2029 roadmap targets 200 logical qubits performing 100 million gate operations. If each logical qubit requires 24 physical qubits - and that's an optimistic estimate - you're looking at nearly 5,000 physical qubits, all needing near-perfect coherence and connectivity. The processor must execute millions of error-correction cycles without catastrophic failure, a feat that has never been demonstrated at scale.

And there's a catch: error correction only works if the physical error rate stays below a threshold, typically around one error per 10,000 operations. Current systems are just crossing that line. Google's experiments show logical error rates approaching the physical gate error rate, which is progress, but not yet sufficient for practical algorithms. The race is on to push error rates lower and coherence times longer before the thermodynamic and material limits slam the door shut.

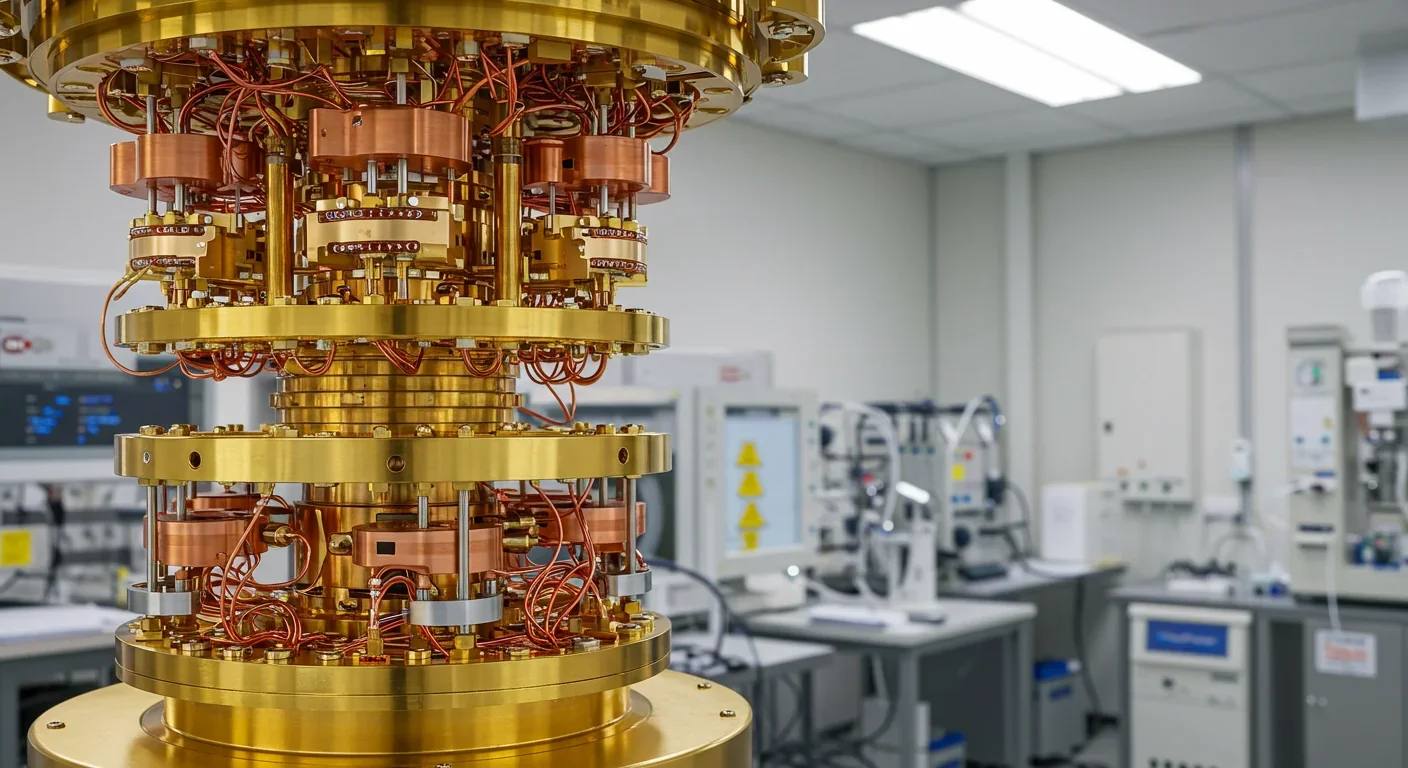

Because decoherence is fundamentally a battle against the environment, quantum engineers are fighting on every front. Temperature control is paramount. Superconducting qubits operate at 10 to 100 millikelvin, requiring dilution refrigerators that mix helium-3 and helium-4 isotopes. These machines consume kilowatts of power and cost millions of dollars, yet they only buy milliseconds of coherence.

Material science offers another lever. The flux noise that plagues superconducting qubits arises from surface spins and defects in the substrate. Researchers are experimenting with ultraclean fabrication techniques, exotic substrates like silicon, and atomic-layer deposition to minimize impurities. Each incremental improvement translates into longer coherence.

"We've created a new handle for modifying coherence properties in molecular systems."

- Danna Freedman, MIT Professor of Chemistry

Dynamical decoupling - applying rapid pulse sequences to "average out" environmental noise - has become a standard tool. By flipping qubits at rates faster than the noise fluctuations, engineers can extend coherence without changing the hardware. Machine learning now plays a role too: convolutional neural networks trained on synthetic noise spectra can infer the real-time noise environment of a qubit and design bespoke decoupling sequences on the fly, effectively adapting to changing conditions.

MIT's recent development of the quarton coupler, which enables ten-times-faster operations through stronger nonlinear coupling, tackles decoherence from a different angle. If you can't extend coherence time, shrink the time needed for each operation. By running processors faster, more gates fit inside the coherence window, effectively stretching the usable lifetime of quantum information.

Topological qubits - still largely theoretical - promise intrinsic protection. By encoding information in global properties of a system rather than local states, topological qubits should resist local perturbations that cause decoherence. Microsoft has invested heavily in Majorana zero modes, exotic quasiparticles that could realize topological qubits, but experimental progress has been slow and contentious. No scalable topological quantum computer exists yet.

IBM's modular approach aims to scale by connecting smaller quantum processors via tunable couplers, sidestepping the fabrication challenges of building monolithic chips with thousands of qubits. Their 2029 Starling processor is designed to reach 200 logical qubits by distributing physical qubits across linked modules, each operating within its coherence envelope. Success hinges on keeping cross-chip error rates low while preserving coherence during inter-chip operations - an untested feat at scale.

Google continues refining its Sycamore architecture, focusing on improving gate fidelities and error-correction codes. Their 2023 demonstration that logical qubit performance improves with increased physical qubit count was a crucial proof of concept, showing that bigger codes can indeed beat the noise if errors stay below threshold.

Quantinuum, leveraging trapped-ion technology, has moved from optical traps to electrode traps and introduced all-to-all connectivity, allowing any qubit to interact with any other without shuttling ions. This reduces decoherence from transport-induced phase errors. Their collaboration with Microsoft on efficient logical qubit encoding hints at a strategy to win the coherence race through fundamentally different hardware rather than brute-force error correction.

Meanwhile, startups like Quandela are betting on photonic qubits, which barely interact with their environment. Photons don't decohere in the traditional sense - they either arrive at a detector or they're lost. This immunity to thermal noise is attractive, but photonic qubits face their own challenges: generating and detecting single photons reliably, and implementing two-qubit gates with high fidelity, remain formidable obstacles.

No matter how clever the engineering, thermodynamics imposes hard limits. The third law tells us we can't reach absolute zero, which means thermal fluctuations will always exist. The uncertainty principle guarantees that any measurement disturbs the system. And decoherence is ultimately an entropy problem: quantum information is extraordinarily low-entropy, and the universe wants to spread that entropy around. Fighting decoherence is fighting the second law of thermodynamics.

The Holevo-Landauer bound reveals a fundamental truth: the more quantum information you encode, the more heat you generate - and heat accelerates decoherence. We're approaching a hard wall set by thermodynamics itself.

Recent theoretical work has formalized these limits. The Holevo-Landauer bound links the amount of classical information extractable from a quantum system to the heat dissipated during encoding. In practical terms, this means the more information you try to cram into a quantum system, the more you heat it, accelerating decoherence. There's a fundamental trade-off between information density and coherence time, and we're starting to bump against it.

Even if we achieve seconds of coherence, certain algorithms will remain out of reach. Shor's algorithm for factoring large integers - the poster child for quantum computing's threat to cryptography - requires millions of high-fidelity gates. Running that on a processor with millisecond coherence times demands error correction so aggressive that the overhead spirals into the millions of physical qubits. Unless coherence times improve by orders of magnitude, or error rates drop far below current thresholds, practical code-breaking remains decades away.

Despite the challenges, progress is real. Coherence times have improved by three orders of magnitude in the past 15 years. Error-correction codes are becoming practical. New qubit designs are circumventing old problems. Autonomous error correction - using engineered dissipation rather than measurement-based feedback - recently achieved logical qubit lifetimes exceeding physical qubit lifetimes for the first time, crossing the "break-even" point that theorists have long considered the gateway to scalable quantum computing.

Hybrid approaches are gaining traction. Pairing fast, short-lived superconducting qubits with long-lived memory qubits - such as nuclear spins or rare-earth ions embedded in crystals - could offer the best of both worlds: rapid computation within a coherence window, then storage in a stable memory until the next burst of processing. This architectural shift mirrors how classical computers use registers and RAM, adapting decades-old computer science wisdom to quantum constraints.

Materials innovation continues to surprise. The discovery that asymmetric crystal hosts can shield qubits from magnetic noise, or that solid neon can suppress charge noise, suggests that the material science of quantum computing is still in its infancy. Every new substrate, every novel fabrication technique, opens possibilities for longer coherence.

And then there's the wildcard: breakthroughs we can't yet predict. Just as silicon transistors scaled far beyond early projections, quantum hardware might find unexpected paths around decoherence. Exotic phases of matter, new quasiparticles, or entirely different physical implementations could sidestep today's limits.

The reality is that quantum computers will probably never escape decoherence entirely. Instead, the field is learning to live with it. Algorithms are being redesigned to minimize circuit depth, packing more computation into fewer gates. Variational quantum algorithms, which offload part of the computation to classical processors, reduce the burden on fragile qubits. Quantum annealing, which doesn't require long coherence times, has already found commercial applications in optimization problems.

The hype around quantum computing often glosses over decoherence, treating it as a temporary inconvenience. It's not. It's the central fact shaping the technology's trajectory. Every roadmap, every processor design, every algorithm is a negotiation with decoherence. When IBM promises 200 logical qubits by 2029, they're betting they can engineer around it. When Google demonstrates quantum supremacy, they're cherry-picking problems that fit within their coherence window.

"The many faces of decoherence remind us that quantum systems are as fragile as they are powerful."

- PostQuantum.com

Understanding decoherence is essential for anyone trying to gauge where quantum computing is headed. The next time you see a headline about quantum breakthroughs, ask: how long did the qubits stay coherent? How many error-correction qubits did they need? What's the error rate? Those numbers tell you whether the demo is a genuine step toward practical quantum computing or just a controlled lab trick.

The quantum revolution is coming, but it's arriving on a timescale dictated by decoherence. Whether that's five years or fifty depends on whether we can finally teach qubits to stay quantum just a little bit longer.

Ahuna Mons on dwarf planet Ceres is the solar system's only confirmed cryovolcano in the asteroid belt - a mountain made of ice and salt that erupted relatively recently. The discovery reveals that small worlds can retain subsurface oceans and geological activity far longer than expected, expanding the range of potentially habitable environments in our solar system.

Scientists discovered 24-hour protein rhythms in cells without DNA, revealing an ancient timekeeping mechanism that predates gene-based clocks by billions of years and exists across all life.

3D-printed coral reefs are being engineered with precise surface textures, material chemistry, and geometric complexity to optimize coral larvae settlement. While early projects show promise - with some designs achieving 80x higher settlement rates - scalability, cost, and the overriding challenge of climate change remain critical obstacles.

The minimal group paradigm shows humans discriminate based on meaningless group labels - like coin flips or shirt colors - revealing that tribalism is hardwired into our brains. Understanding this automatic bias is the first step toward managing it.

In 1977, scientists discovered thriving ecosystems around underwater volcanic vents powered by chemistry, not sunlight. These alien worlds host bizarre creatures and heat-loving microbes, revolutionizing our understanding of where life can exist on Earth and beyond.

Automated systems in housing - mortgage lending, tenant screening, appraisals, and insurance - systematically discriminate against communities of color by using proxy variables like ZIP codes and credit scores that encode historical racism. While the Fair Housing Act outlawed explicit redlining decades ago, machine learning models trained on biased data reproduce the same patterns at scale. Solutions exist - algorithmic auditing, fairness-aware design, regulatory reform - but require prioritizing equ...

Cache coherence protocols like MESI and MOESI coordinate billions of operations per second to ensure data consistency across multi-core processors. Understanding these invisible hardware mechanisms helps developers write faster parallel code and avoid performance pitfalls.