Cache Coherence Protocols: MESI and MOESI Explained

TL;DR: Memristor memory merges storage and computation into a single chip, enabling AI to run faster and more efficiently by computing where data lives rather than shuttling information between separate memory and processors.

What if your computer's memory could think? Not in some distant, sci-fi future, but right now, in labs from Stanford to Seoul. Engineers are building chips where storage and processing aren't separate kingdoms but unified territories. The technology behind this shift is called memristor memory, and it's poised to change everything from how your phone recognizes your face to how data centers crunch climate models.

Traditional computing has a problem: data spends most of its life traveling. Information stored in memory chips must shuttle to the CPU for processing, then return home. This commute, repeated billions of times per second, burns energy and creates bottlenecks. It's like having a library where every book must be carried to a single reading room across campus. Memristors tear down that wall. They compute where data lives.

The memristor story begins with a prediction. In 1971, circuit theorist Leon Chua argued that four fundamental electrical components should exist: resistor, capacitor, inductor, and a fourth element he called the memristor (memory resistor). The first three were already powering electronics. The fourth remained theoretical until 2008, when HP Labs built a working device from titanium dioxide.

A memristor is deceptively simple: two electrodes sandwiching a thin film of material, often metal oxide. Apply voltage and the resistance changes. Remove the power and the resistance stays put, remembering its last state without energy. That's the "mem" in memristor. Unlike RAM, which forgets everything the moment you unplug it, memristors are non-volatile. They're storage that doesn't sleep.

But here's where it gets interesting. Because resistance changes gradually rather than flipping binary switches, memristors can store multiple values in a single cell. Instead of just 0 or 1, you might have 0.3 or 0.7. This analog behavior makes them ideal for mimicking biological synapses, where strength varies continuously. Neural networks accelerated by memristors can perform calculations directly in the memory array, bypassing the CPU entirely.

The physics involves ion migration. When voltage flows through the device, oxygen vacancies or metal ions drift through the oxide layer, forming or breaking conductive filaments. These filaments determine resistance. Reverse the voltage and the filaments dissolve, resetting the state. The process happens in nanoseconds and consumes picojoules, a tiny fraction of what traditional memory requires.

Memristors aren't alone in the quest to replace conventional RAM. They compete with several other non-volatile technologies, each with different trade-offs. MRAM (magnetoresistive RAM) uses magnetic tunnel junctions where data is stored as magnetic orientation. It's fast and endures unlimited write cycles, making it attractive for industrial applications. But MRAM struggles to scale down to the tiny dimensions needed for high-density storage, and it consumes more power per operation than memristors.

Phase-change memory (PCM) works by switching materials between crystalline and amorphous states, changing electrical resistance. Intel and Micron commercialized PCM as Optane memory for data centers. PCM offers high endurance and decent speed, but the phase transition requires significant heat, limiting energy efficiency. Writing data to PCM can take hundreds of nanoseconds, slower than memristors.

Then there's ReRAM (resistive RAM), which operates on principles nearly identical to memristors. In fact, the terms are often used interchangeably, though ReRAM typically refers to commercial implementations while memristor remains broader. Both rely on resistance switching via filament formation. The key advantage over PCM and MRAM is simplicity: memristors/ReRAM use basic metal-oxide structures compatible with existing semiconductor manufacturing.

What sets memristors apart is their ability to perform in-memory computation. While MRAM and PCM excel at storage, memristors arranged in crossbar arrays can execute matrix-vector multiplication using Ohm's law and Kirchhoff's current law. Input voltages applied to rows interact with memristor resistances, and currents collected at columns represent the computed output. The entire operation happens in one clock cycle, massively parallel. For AI workloads dominated by matrix math, this architectural leap delivers projected performance gains of 10 times or more compared to GPU-based systems.

The promise of in-memory computing has moved beyond whitepapers. Prototype memristor accelerators have demonstrated order-of-magnitude improvements in performance density and power efficiency over conventional CPUs and SRAM-based ASICs. These aren't production chips yet, but they're functional enough to run real neural networks.

AI inference, where trained models classify images or recognize speech, is memristors' sweet spot. Edge devices like smartphones, drones, and autonomous vehicles need to run AI locally without constant cloud connectivity. Traditional inference accelerators rely on SRAM to cache model weights, but SRAM is volatile, power-hungry, and occupies significant chip area. Memristor crossbars can store weights persistently in the same array that computes with them, slashing both energy use and physical footprint.

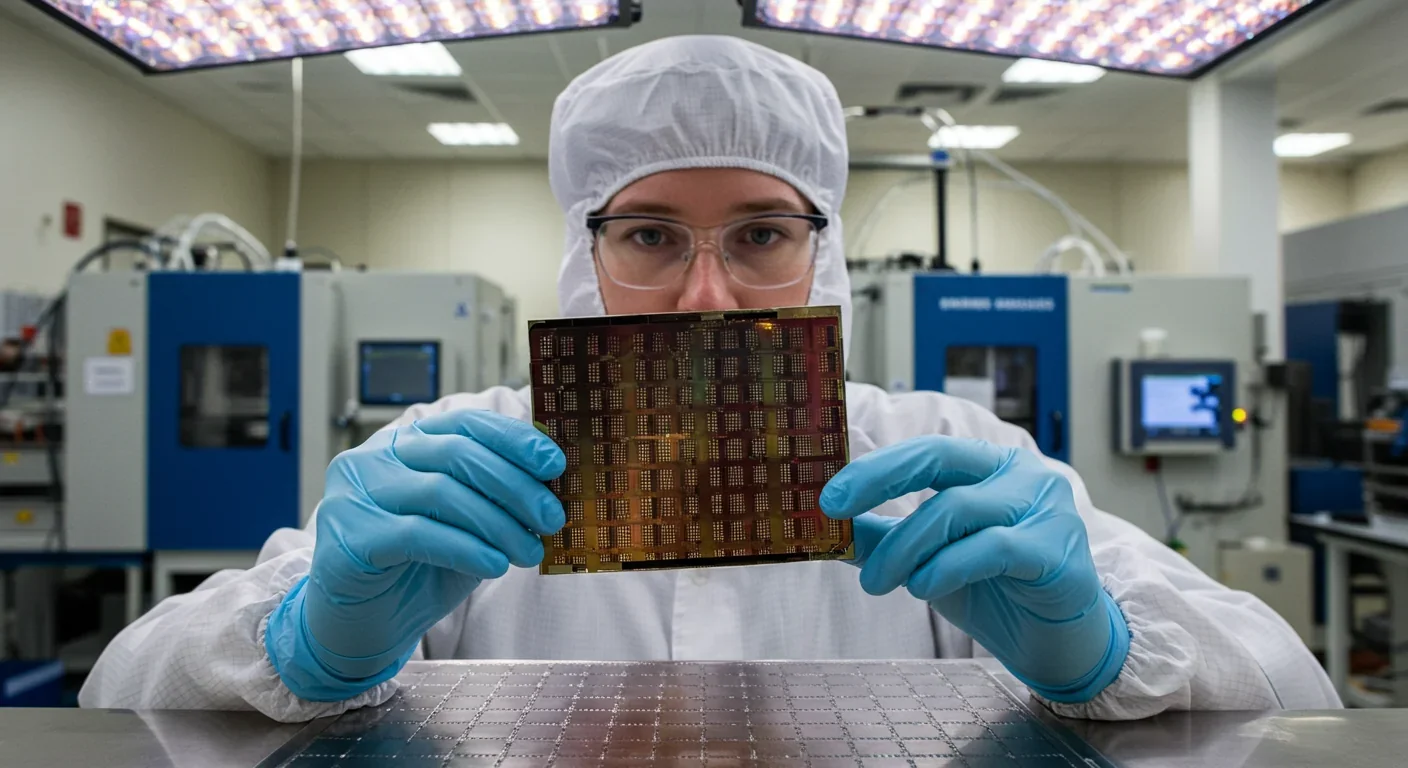

Neuromorphic computing represents another frontier. IBM and other research teams are building chips that mimic the brain's structure, using memristors as artificial synapses. These systems process information asynchronously, reacting to events rather than marching to a clock. For tasks like sensor fusion or pattern recognition, neuromorphic chips can achieve human-level efficiency. A wafer-scale memristive circuit recently demonstrated brain-scale neuromorphic computing with millions of synapses on a single chip.

Data centers are also experimenting. Training large language models like GPT requires moving terabytes of data between memory and processors, a task that dominates energy budgets. Memristor-based accelerators could reduce that overhead by keeping model parameters stationary and computing in place. Early prototypes suggest energy savings of 100 times for specific workloads, though commercial deployment remains years away.

Even specialized applications are emerging. Epileptic seizure detection systems use memristors in reservoir computing architectures to analyze brain signals in real time. The analog nature of memristors allows them to process time-series data naturally, without digitizing every sample. Similarly, guidance and control neural networks for aerospace applications benefit from memristors' radiation tolerance and low power draw.

For all their promise, memristors face sobering challenges before they can replace conventional memory. Device variation tops the list. No two memristors behave identically, even when fabricated side by side on the same wafer. Resistance values drift due to microscopic differences in oxide composition, electrode interfaces, and filament geometry. This variability wreaks havoc on neural networks, where precise weight values determine accuracy.

Conductance drift compounds the problem. Over time, memristor resistance shifts as ions continue migrating at room temperature, even without applied voltage. A weight programmed today might differ significantly a month later. Engineers compensate with error-correction algorithms and periodic recalibration, but these workarounds add complexity and power overhead. Achieving drift rates low enough for decade-long data retention remains an open research question.

Write endurance is another barrier. Unlike flash memory, which tolerates roughly 100,000 write cycles, many memristor materials degrade after just millions of writes. For AI training, where weights update constantly, this lifespan is inadequate. Hybrid CMOS-memristor designs attempt to mitigate degradation by using transistors to limit current during programming, but this increases circuit area and cost.

Scaling memristor arrays to billions of devices introduces new headaches. Crossbar architectures suffer from sneak currents, where unintended electrical paths through neighboring cells corrupt data. Selector devices, similar to transistors, must be integrated with each memristor to block sneak paths. But selectors consume area, reducing density advantages. 3D stacking offers one solution, layering multiple crossbar planes vertically. However, thermal management becomes critical when heat from lower layers can't dissipate efficiently.

Manufacturing compatibility with existing CMOS fabs is crucial for commercial viability. Memory makers have invested hundreds of billions in current production lines. If memristors require exotic materials or processes incompatible with standard silicon fabs, adoption will stall. Fortunately, metal-oxide memristors can leverage many existing deposition and patterning tools, though optimizing material stacks for performance and reliability demands years of iteration.

Venture capital and corporate R&D are flowing into memristor startups and established chipmakers alike. Market analysts project the global memristor market could reach billions in revenue by the early 2030s, driven primarily by AI accelerator demand. That growth hinges on solving the technical hurdles outlined above, but momentum is building.

Crossbar Inc., a Silicon Valley startup, partnered with Chinese foundries to manufacture ReRAM for embedded applications. Their chips target IoT devices where low power and instant-on capability justify the cost premium over flash. Meanwhile, Samsung and SK Hynix have announced R&D programs exploring memristor integration into future memory product lines, though commercial timelines remain vague.

New hybrid memory technologies combine memristors with traditional SRAM or DRAM, aiming for best-of-both-worlds solutions. On-chip AI learning and inference become feasible when fast SRAM caches intermediate results while memristors store model weights. This hybrid approach sidesteps some of memristors' speed and endurance limitations, at the expense of added design complexity.

The broader non-volatile memory landscape is heating up, with projected compound annual growth rates exceeding 20% through 2030. Memristors, MRAM, and PCM are all vying for slices of that pie, targeting different niches. The technology that achieves the best balance of density, speed, endurance, and cost will likely dominate. Right now, no clear winner has emerged.

If memristors fulfill their potential, the devices you use daily will change in subtle but profound ways. Smartphones could recognize your voice or face instantaneously, even when offline, because the AI model lives in non-volatile memristor memory that never powers down. Battery life could stretch days longer, since computation happens in memory rather than shuttling data across power-hungry buses.

Autonomous vehicles rely on split-second decision-making, processing sensor feeds from cameras, lidar, and radar. Memristor-based inference engines could reduce latency and energy consumption, making electric self-driving cars more practical. Safety-critical systems also benefit from memristors' inherent radiation hardness, a byproduct of their simple oxide structure. This makes them appealing for aerospace and military applications where cosmic rays can flip bits in conventional memory.

Wearable health monitors might use memristor-based neuromorphic chips to analyze biometric data locally, alerting users to irregular heartbeats or early signs of illness without transmitting sensitive data to the cloud. Privacy improves when computation stays on-device, and memristors make that feasible at ultra-low power budgets suitable for battery-powered gadgets.

Data centers, the invisible backbone of cloud computing, stand to gain enormously. Training cutting-edge AI models currently consumes megawatts, equivalent to small towns. Memristor accelerators could slash that consumption, making AI development more sustainable and affordable. This, in turn, could democratize access to advanced models, enabling smaller companies and researchers to compete with tech giants.

The leap to memristor-based systems won't happen overnight. Software and hardware ecosystems must co-evolve. Algorithms designed for perfect, deterministic arithmetic on CPUs don't translate directly to analog memristor crossbars, where noise and variation are facts of life. Machine learning frameworks will need to incorporate noise-aware training techniques and fault-tolerant architectures.

Developers accustomed to programming traditional computers may struggle with neuromorphic paradigms. Instead of sequential instructions, event-driven spiking neural networks process data asynchronously. New programming languages and tools are emerging to bridge this gap, but the learning curve is steep. Educational institutions will play a crucial role in training the next generation of engineers fluent in both digital and analog computing.

Standards and interoperability matter too. If each chipmaker implements memristor memory differently, software portability suffers. Industry consortia are forming to define benchmarks, testing protocols, and interface specifications. Success depends on cooperation between competitors, a historically difficult proposition in semiconductors.

For consumers, the transition will be invisible if done right. Just as solid-state drives replaced hard disks without requiring you to learn new skills, memristor memory should simply make devices faster and more efficient. The real question is whether manufacturers can deliver that seamlessness while managing the technical risks.

Looking ahead, memristors represent more than a faster memory chip. They embody a shift away from the von Neumann architecture that has dominated computing for 80 years. In that architecture, memory and processing are separate, connected by a limited-bandwidth bus. The "von Neumann bottleneck" constrains performance, no matter how fast transistors get. Memristors attack the bottleneck at its root, merging memory and computation into a single operation.

This convergence mirrors biological brains, where synapses both store and process information. Neuromorphic chips built with memristors could unlock new forms of intelligence, capable of learning and adapting in ways current AI cannot. Imagine devices that understand context, anticipate needs, and operate autonomously for months without retraining. That future hinges on memristors proving themselves in production.

The path forward is neither straight nor guaranteed. Competing technologies might leapfrog memristors with unexpected breakthroughs. Manufacturing yields might plateau below commercial viability. Or unforeseen physics could impose hard limits on scalability. History is littered with promising memory technologies that never escaped the lab.

Yet the breadth of research, the level of industry investment, and the tangible prototypes suggest memristors have staying power. Engineers are solving problems one by one: improving endurance with new oxide recipes, compensating for drift with clever circuit designs, scaling arrays with advanced selectors. Progress is incremental but persistent.

Within the next decade, you'll likely encounter memristor technology without knowing it, embedded in devices that simply work better. Your laptop might boot instantly, your car might dodge accidents faster, your doctor might diagnose diseases earlier. The memory that computes is no longer a lab curiosity. It's becoming the invisible foundation of a smarter, more efficient world. Whether that world emerges gradually or arrives in a sudden wave depends on decisions being made right now in research labs and boardrooms across the globe. The silicon revolution isn't over. It's just learned to remember.

Ahuna Mons on dwarf planet Ceres is the solar system's only confirmed cryovolcano in the asteroid belt - a mountain made of ice and salt that erupted relatively recently. The discovery reveals that small worlds can retain subsurface oceans and geological activity far longer than expected, expanding the range of potentially habitable environments in our solar system.

Scientists discovered 24-hour protein rhythms in cells without DNA, revealing an ancient timekeeping mechanism that predates gene-based clocks by billions of years and exists across all life.

3D-printed coral reefs are being engineered with precise surface textures, material chemistry, and geometric complexity to optimize coral larvae settlement. While early projects show promise - with some designs achieving 80x higher settlement rates - scalability, cost, and the overriding challenge of climate change remain critical obstacles.

The minimal group paradigm shows humans discriminate based on meaningless group labels - like coin flips or shirt colors - revealing that tribalism is hardwired into our brains. Understanding this automatic bias is the first step toward managing it.

In 1977, scientists discovered thriving ecosystems around underwater volcanic vents powered by chemistry, not sunlight. These alien worlds host bizarre creatures and heat-loving microbes, revolutionizing our understanding of where life can exist on Earth and beyond.

Automated systems in housing - mortgage lending, tenant screening, appraisals, and insurance - systematically discriminate against communities of color by using proxy variables like ZIP codes and credit scores that encode historical racism. While the Fair Housing Act outlawed explicit redlining decades ago, machine learning models trained on biased data reproduce the same patterns at scale. Solutions exist - algorithmic auditing, fairness-aware design, regulatory reform - but require prioritizing equ...

Cache coherence protocols like MESI and MOESI coordinate billions of operations per second to ensure data consistency across multi-core processors. Understanding these invisible hardware mechanisms helps developers write faster parallel code and avoid performance pitfalls.