Cache Coherence Protocols: MESI and MOESI Explained

TL;DR: Quantum computers have moved beyond Google's 2019 supremacy claim to solve real problems in drug discovery, optimization, and materials science. While limitations remain severe, industries from pharmaceuticals to finance are already seeing practical quantum advantages.

By 2030, experts predict quantum computers will solve problems affecting billions of lives, from designing miracle drugs to securing our digital infrastructure. But the revolution has already begun, quietly transforming what we thought computers could do. The question isn't whether quantum supremacy is real anymore. It's what happens next.

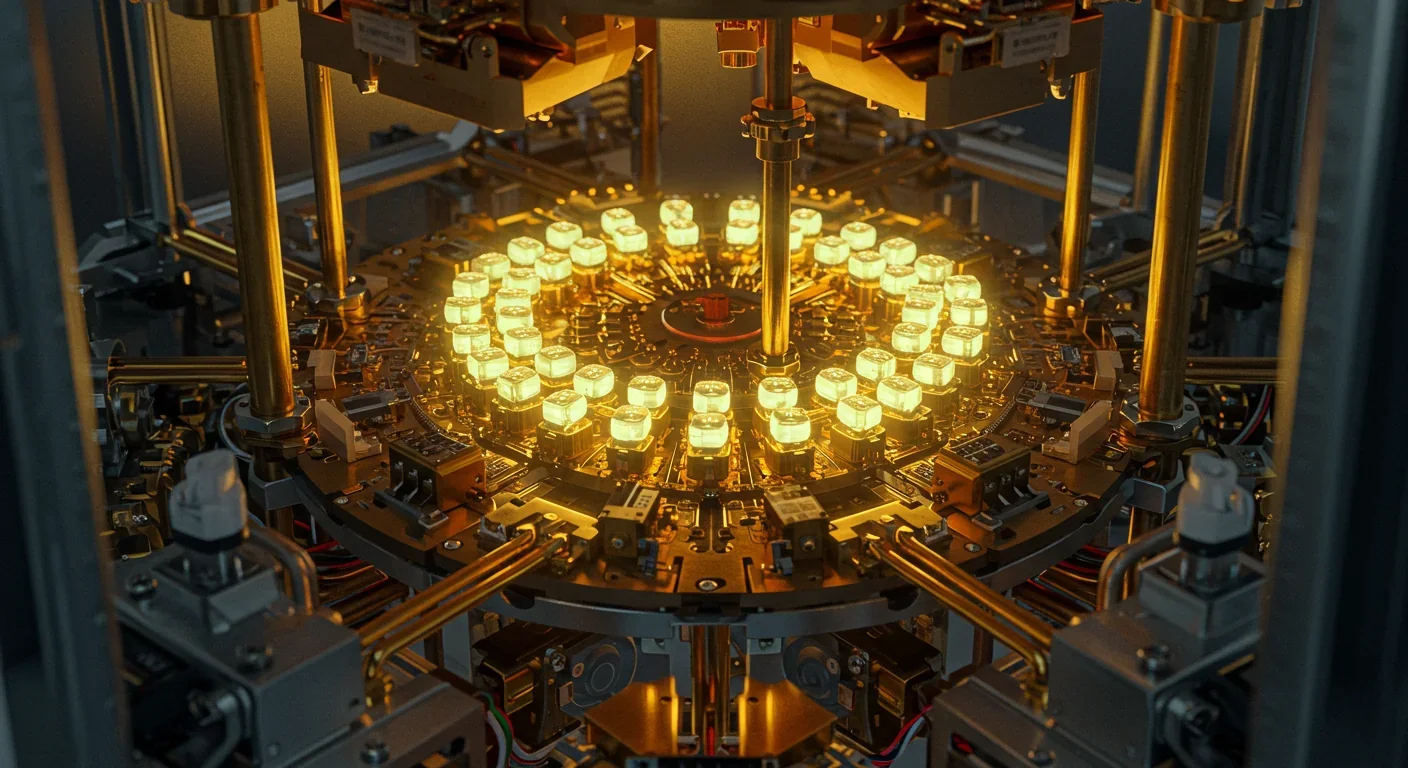

In October 2019, Google's Sycamore processor solved a problem in 200 seconds that would take the world's most powerful supercomputer 10,000 years. The task itself, random circuit sampling, had no practical application. Critics called it a parlor trick. But they missed the point entirely.

That moment proved something fundamental: quantum computers don't just work faster on certain problems. They work in a completely different way, exploiting quantum mechanics to explore exponentially more possibilities simultaneously. It's like comparing a lightbulb to a laser. Both produce light, but one focuses energy in ways the other never could.

The achievement sparked immediate controversy. IBM quickly challenged Google's 10,000-year claim, arguing classical computers could solve the same problem in days with clever optimizations. The back-and-forth revealed an important truth: quantum supremacy isn't about raw speed. It's about discovering which problems are fundamentally quantum-native, where classical computers hit mathematical walls that quantum systems simply sidestep.

Five years later, quantum computers are tackling problems that actually matter. In 2024, multiple companies demonstrated quantum advantages in optimization, drug discovery, and materials science. These aren't abstract demonstrations anymore. They're solving problems with commercial value.

Pharmaceutical companies now use quantum computers to simulate molecular interactions that classical computers struggle with. Qubit Pharmaceuticals recently used quantum systems to model protein folding and drug binding with unprecedented accuracy. The reason? Molecules are quantum systems. Simulating them on classical computers means translating quantum behavior into classical mathematics, losing critical information in the process. Quantum computers speak the molecule's native language.

The breakthrough isn't just theoretical. Drug discovery typically takes 10-15 years and costs billions. If quantum computers can accurately predict which drug candidates will work before synthesis, they could cut years and hundreds of millions from the timeline. Recent trials suggest we're approaching that threshold faster than expected.

Optimization presents another quantum-native problem space. D-Wave's quantum annealers have been tackling logistics, scheduling, and resource allocation problems since 2011. While debate continues about whether D-Wave achieves true quantum advantage, companies report solving previously intractable optimization problems. Volkswagen used quantum optimization for traffic flow management. DENSO optimized factory paint shop scheduling. These applications save real money and resources.

Understanding why quantum computers excel at specific problems requires grasping quantum mechanics' weird properties: superposition, entanglement, and interference.

Superposition means a qubit exists in multiple states simultaneously until measured. A classical bit is either 0 or 1. A qubit is both, allowing quantum computers to evaluate countless possibilities at once. With 50 qubits in superposition, you're processing 2^50 possibilities simultaneously. That's over a quadrillion states. Classical computers must check each one sequentially.

Entanglement links qubits so measuring one instantly affects others, no matter the distance between them. This creates correlations impossible in classical systems. Optimization algorithms exploit entanglement to find patterns across vast solution spaces that classical algorithms would never discover.

Interference amplifies correct answers while canceling wrong ones, like waves constructively and destructively interfering. Quantum algorithms are choreographed interference patterns, designed so wrong answers cancel out and right answers reinforce themselves. Grover's algorithm searches unsorted databases quadratically faster than any classical algorithm using exactly this principle.

These properties make quantum computers phenomenal at specific tasks: simulating quantum systems, solving certain optimization problems, factoring large numbers, searching databases, and sampling complex probability distributions. They're terrible at others. Quantum computers won't replace your laptop for email or web browsing. The future is hybrid: classical computers for most tasks, quantum computers for the problems that break classical systems.

The quantum computing landscape has become remarkably competitive. IBM's roadmap aims for 2,000+ qubit systems by 2025. Google continues refining error correction. Meanwhile, Rigetti Computing's chiplet architecture achieved 99.5% gate fidelity on their 36-qubit Cepheus system, halving error rates compared to larger single-chip designs.

That last detail matters enormously. Raw qubit count is misleading. A quantum computer with 1,000 noisy qubits is less useful than one with 100 stable qubits. Error rates determine everything. Current systems have 1-2% error rates per operation. Most quantum algorithms require thousands or millions of operations. Errors compound quickly, corrupting results.

Quantum error correction promises a solution by encoding one logical qubit across multiple physical qubits, using redundancy to detect and correct errors. Google's Willow chip, announced in December 2024, demonstrated error-corrected qubits with longer coherence times than physical qubits, a critical threshold. But practical error correction requires thousands of physical qubits per logical qubit. We're not there yet.

Different approaches are emerging. Superconducting qubits (Google, IBM, Rigetti) are currently leading but require near-absolute-zero temperatures. Trapped ion systems (IonQ, Honeywell) offer better coherence at the cost of slower gate speeds. Photonic quantum computers (Xanadu) promise room-temperature operation but face different scaling challenges. Neutral atom systems (QuEra, Atom Computing) occupy a middle ground. Nobody knows which will dominate.

Pharmaceuticals will likely be first. Quantum simulations of molecular dynamics already outperform classical methods for specific protein structures. Major pharmaceutical companies have quantum computing partnerships. Expect initial quantum-designed drugs to enter trials by 2028-2030.

Finance is investing heavily, particularly in portfolio optimization and risk analysis. Quantum algorithms can evaluate vastly more market scenarios than Monte Carlo simulations. Goldman Sachs, JPMorgan, and other financial giants have dedicated quantum teams. Practical applications may emerge by 2026.

Materials science benefits from quantum simulation's ability to predict material properties before synthesis. Research teams are using quantum computers to design better batteries, superconductors, and catalysts. This could accelerate development of sustainable energy technologies.

Cryptography faces an existential threat. Shor's algorithm can factor large numbers exponentially faster than classical computers, breaking RSA encryption that secures most internet traffic. Current quantum computers can't run Shor's algorithm at the scale needed to threaten encryption, but experts estimate that threshold could arrive by 2030-2035. Governments and companies are scrambling to deploy post-quantum cryptography before then.

Artificial intelligence might benefit from quantum speedups in certain machine learning algorithms, though this remains controversial. Some researchers argue quantum computers could dramatically accelerate training for specific neural network architectures. Others question whether quantum advantage in AI will materialize.

Despite the hype, quantum computers face severe constraints that won't disappear soon.

Environmental sensitivity is brutal. Qubits decohere in microseconds to milliseconds, losing their quantum properties through interaction with the environment. Building quantum computers means constructing the most isolated systems in existence, shielding them from heat, electromagnetic radiation, and vibrations. Even cosmic rays from space can corrupt quantum calculations.

Scalability presents daunting engineering challenges. Adding qubits isn't like adding transistors to a chip. Each qubit needs individual control systems, error correction, and isolation from neighbors. Current systems max out around 100-200 qubits. Reaching thousands or millions requires fundamentally new architectures.

Algorithm design is incredibly difficult. Most quantum algorithms require deep physics and mathematics expertise. Unlike classical programming where millions write code, perhaps thousands understand quantum algorithm design. Research suggests most computational problems don't have quantum speedups at all.

Cost remains prohibitive. Quantum computers cost tens of millions to build and maintain. Operating them requires specialized facilities and expert staff. Cloud access democratizes availability, but computation time is expensive. Only high-value problems justify quantum computing's cost.

The honest answer: it already does, just indirectly. Pharmaceutical researchers using quantum computers to design better drugs might save your life someday. Financial institutions using quantum optimization might offer better loan rates. Materials scientists might create more efficient solar panels.

Direct interaction with quantum computers? That's further out. Expert consensus suggests three phases:

2025-2030: Quantum Utility Era - Quantum computers solve specific problems better than classical computers for industries willing to pay premium prices. Most people never directly interact with quantum technology but benefit from drugs, materials, and financial products developed using quantum computing.

2030-2040: Hybrid Classical-Quantum Era - Cloud quantum computing becomes accessible to researchers and developers beyond quantum specialists. Classical computers automatically offload quantum-native problems to quantum processors. You might use quantum computing without knowing it, similar to how GPUs accelerate graphics without user awareness.

2040+: Quantum Integration Era - If error correction scales successfully, quantum computers might become common in data centers. This depends on solving enormous engineering challenges. Some experts think scalable quantum computers are impossible. Others believe we're on track. The debate continues.

Nations recognize quantum computing as strategically vital. The US invested $1.2 billion through the National Quantum Initiative. China reportedly invests several billion annually. The EU has a €1 billion quantum flagship program. Australia, Canada, Japan, and others have national quantum strategies.

The race isn't just about economic advantage. Quantum computers threaten current encryption, risking national security if adversaries develop them first. Conversely, quantum-secure communications promise unbreakable encryption. Military applications include improved sensing, navigation without GPS, and optimization for logistics.

This creates an interesting dynamic. Quantum computing requires global scientific collaboration to advance rapidly. But national security concerns encourage secrecy and protectionism. The tension between cooperation and competition will shape how fast quantum computing develops and who controls it.

If you're in technology, pharmacy, finance, materials science, or cybersecurity, quantum literacy is becoming essential. Not everyone needs to program quantum computers, but understanding which problems are quantum-native and how to leverage quantum resources through cloud platforms provides competitive advantage.

For everyone else, the biggest immediate concern is post-quantum cryptography. Organizations must upgrade encryption systems before quantum computers can break current standards. The transition is underway, with NIST standardizing post-quantum algorithms in 2024. If you handle sensitive data, ensure your systems are quantum-resistant.

The deeper implication is philosophical. Quantum mechanics is deeply counterintuitive. Building technologies that exploit quantum phenomena forces us to accept that reality operates by rules that violate common sense. Particles existing in multiple states simultaneously. Information transmitted instantaneously across distances. Effects without apparent causes. Quantum computing makes the abstract math of quantum mechanics tangible and practically useful.

Google's Sycamore achievement in 2019 proved quantum supremacy was possible. The years since have shown quantum advantage is achievable for practical problems. The next phase is scaling quantum systems to the point where they solve problems that change industries.

We're close. Current quantum computers are roughly where classical computers were in the 1950s: expensive, finicky, requiring expert operation, but demonstrably useful for specific tasks. The transistor revolution took decades to produce personal computers. Quantum computing might follow a similar trajectory.

Or it might not. Quantum systems face physical constraints classical computers don't. Error correction might require resources that make scaling impossible. Alternative computing paradigms like neuromorphic chips might solve many problems quantum computers target without quantum mechanics' complications.

What's certain is that quantum supremacy isn't a single moment or achievement. It's a gradual transition as quantum computers solve increasingly important problems better than classical alternatives. We're living through that transition now, witnessing the birth of a fundamentally new computing paradigm. Whether it transforms civilization or becomes a specialized tool for niche applications, the problems quantum computers have already solved prove one thing: the future of computing isn't just faster classical machines. It's learning to compute with the universe's most fundamental rules.

Ahuna Mons on dwarf planet Ceres is the solar system's only confirmed cryovolcano in the asteroid belt - a mountain made of ice and salt that erupted relatively recently. The discovery reveals that small worlds can retain subsurface oceans and geological activity far longer than expected, expanding the range of potentially habitable environments in our solar system.

Scientists discovered 24-hour protein rhythms in cells without DNA, revealing an ancient timekeeping mechanism that predates gene-based clocks by billions of years and exists across all life.

3D-printed coral reefs are being engineered with precise surface textures, material chemistry, and geometric complexity to optimize coral larvae settlement. While early projects show promise - with some designs achieving 80x higher settlement rates - scalability, cost, and the overriding challenge of climate change remain critical obstacles.

The minimal group paradigm shows humans discriminate based on meaningless group labels - like coin flips or shirt colors - revealing that tribalism is hardwired into our brains. Understanding this automatic bias is the first step toward managing it.

In 1977, scientists discovered thriving ecosystems around underwater volcanic vents powered by chemistry, not sunlight. These alien worlds host bizarre creatures and heat-loving microbes, revolutionizing our understanding of where life can exist on Earth and beyond.

Automated systems in housing - mortgage lending, tenant screening, appraisals, and insurance - systematically discriminate against communities of color by using proxy variables like ZIP codes and credit scores that encode historical racism. While the Fair Housing Act outlawed explicit redlining decades ago, machine learning models trained on biased data reproduce the same patterns at scale. Solutions exist - algorithmic auditing, fairness-aware design, regulatory reform - but require prioritizing equ...

Cache coherence protocols like MESI and MOESI coordinate billions of operations per second to ensure data consistency across multi-core processors. Understanding these invisible hardware mechanisms helps developers write faster parallel code and avoid performance pitfalls.