Cache Coherence Protocols: MESI and MOESI Explained

TL;DR: Quantum computers will soon break today's encryption. NIST's new post-quantum cryptography standards use lattice-based mathematics to protect against this threat. Organizations must begin migrating now to avoid catastrophic data breaches.

Your encrypted messages, bank transactions, and state secrets are protected by mathematical problems so hard that classical computers would need millennia to crack them. But quantum computers don't play by those rules. Within the next decade, a sufficiently powerful quantum machine could shatter the encryption protecting nearly everything digital, and adversaries are already harvesting encrypted data to decrypt once they build the right quantum computer. The countdown to what cybersecurity experts call "Q-Day" has begun.

Here's the nightmare scenario keeping cryptographers up at night: Shor's algorithm, a quantum computing method discovered in 1994, can factor enormous numbers exponentially faster than any classical approach. That matters because RSA encryption, which protects over 90% of internet connections during the SSL handshake, relies entirely on the difficulty of factoring large numbers. So does elliptic curve cryptography (ECC), the other pillar of modern encryption.

The scale of vulnerability is staggering. Your WhatsApp messages, online banking, digital signatures, VPN connections, blockchain transactions - essentially every secure digital communication - depends on mathematical problems that quantum computers could solve in hours or days instead of billions of years.

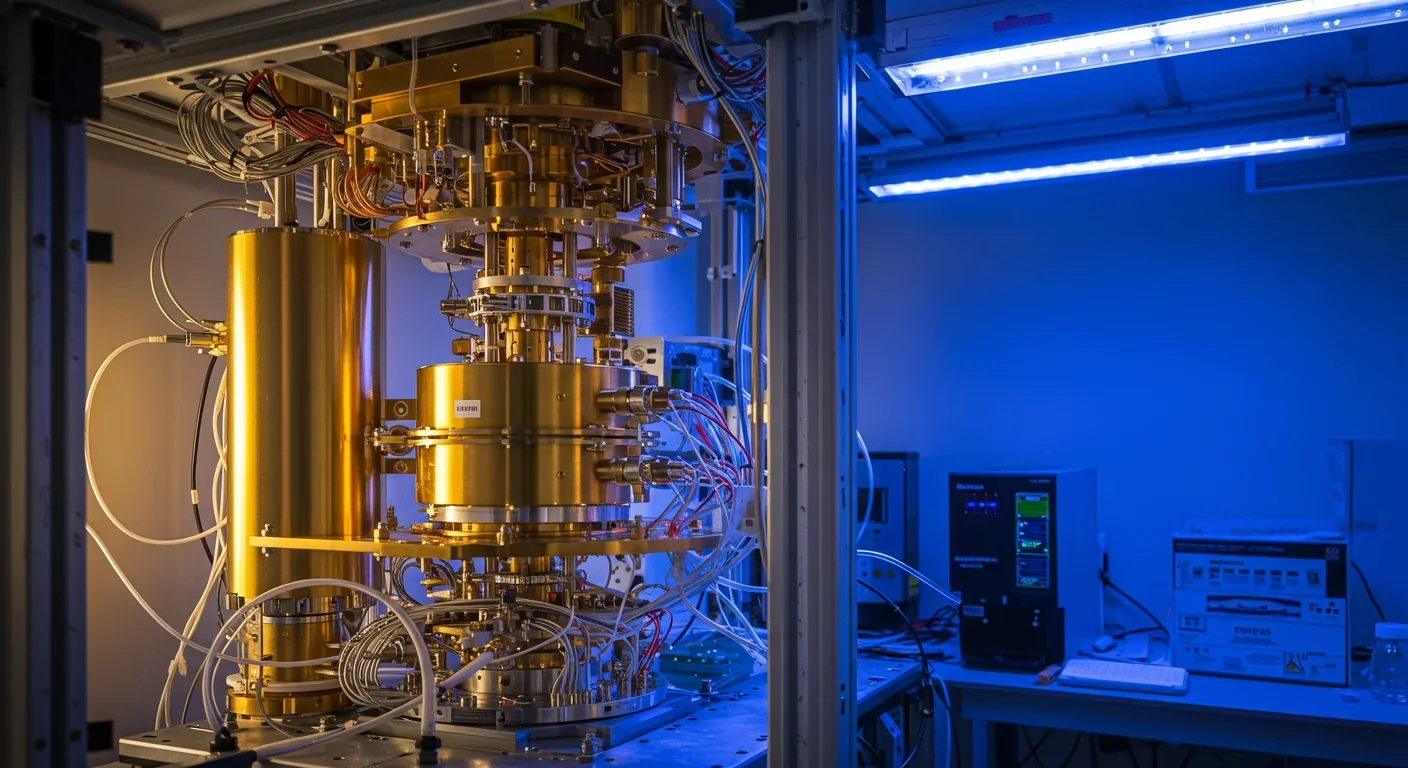

What's changed recently is the timeline. While early demonstrations in 2001 factored only the number 15 using a 7-qubit IBM quantum computer, today's machines are scaling rapidly. Breaking a 2048-bit RSA key would require millions of logical qubits accounting for error correction, but experts now predict that cryptographically relevant quantum computers could emerge between 2030 and 2035 - maybe sooner.

Nation-states are already intercepting and storing your encrypted data, betting they'll decrypt it when quantum computers mature. If your sensitive information needs to stay secret beyond 10-15 years, it's effectively compromised right now.

Even more alarming is the harvest now, decrypt later threat. Nation-states and sophisticated actors are already intercepting and storing encrypted communications, betting they'll be able to decrypt everything once quantum computers mature. If your organization handles sensitive data with a secrecy lifespan beyond 10-15 years - medical records, government communications, trade secrets, infrastructure plans - that data is effectively compromised right now.

Recognizing the existential threat, the National Institute of Standards and Technology launched a global competition in 2016 to identify quantum-resistant algorithms. After years of rigorous analysis, public scrutiny, and iterative testing across multiple rounds, NIST made history on August 13, 2024, by releasing the first three finalized post-quantum cryptography standards.

These aren't minor tweaks - they represent a fundamental reimagining of how we protect digital information:

FIPS 203 (ML-KEM): Previously known as CRYSTALS-Kyber, this lattice-based algorithm handles key encapsulation, the process of securely establishing encryption keys over public channels. With a key size of just 2,448 bits, it's comparable to RSA-2048 while providing a 256-bit security level - and it's resistant to both classical and quantum attacks.

FIPS 204 (ML-DSA): Formerly CRYSTALS-Dilithium, this provides digital signatures for authenticating messages and verifying identity. Digital signatures prove who sent a message and that it hasn't been tampered with - critical for everything from software updates to legal contracts.

FIPS 205 (SLH-DSA): Based on SPHINCS+, this hash-based signature algorithm serves as a backup to ML-DSA, using a completely different mathematical approach to ensure diversity in case one method is compromised.

Then in March 2025, NIST announced a fifth algorithm, HQC (Hamming Quasi-Cyclic), as an additional backup for key encapsulation. This code-based approach provides crucial redundancy - if a breakthrough somehow cracks lattice-based cryptography, the system doesn't collapse entirely.

The diversity strategy is intentional. NIST's standardization process incorporated intellectual property clearances to avoid licensing disputes and used iterative public rounds to catch vulnerabilities before algorithms were finalized. Even during standardization, researchers discovered attacks that forced modifications, like side-channel electromagnetic attacks on FALCON that required masking mitigations.

So what makes these new algorithms quantum-proof when RSA and ECC are vulnerable? It comes down to the mathematical problems they're based on.

Lattice-based cryptography, the foundation for ML-KEM and ML-DSA, relies on the difficulty of finding the shortest vector in a high-dimensional lattice - imagine trying to find the closest dot on an infinitely complex grid in hundreds of dimensions. This problem remains hard even for quantum computers because it doesn't reduce to the types of problems Shor's algorithm can solve efficiently.

"Lattice cryptography operations are based on simple polynomial arithmetic, making encryption and decryption fast, while the underlying mathematical structure ensures that breaking the system requires solving a problem that scales exponentially in difficulty even for quantum attackers."

- Cloudflare Cryptography Team

Cloudflare's cryptography team explains the beauty of lattice cryptography: operations are based on simple polynomial arithmetic, making encryption and decryption fast, while the underlying mathematical structure ensures that breaking the system requires solving a problem that scales exponentially in difficulty even for quantum attackers.

The practical advantages are striking. ML-KEM generates a shared secret key using straightforward matrix multiplications, operations that modern processors handle efficiently. Unlike RSA, which requires careful implementation to avoid timing attacks, lattice-based schemes offer stronger security guarantees with less implementation complexity.

Hash-based signatures like SLH-DSA take a different route. They build security on the one-way nature of cryptographic hash functions - it's easy to hash data but virtually impossible to reverse the process. These schemes have been studied for decades and are extremely well-understood, which is why NIST selected them as a conservative backup option.

Code-based cryptography, exemplified by HQC, relies on the difficulty of decoding general linear codes - essentially solving equations with intentional errors introduced. This approach has withstood scrutiny since the 1970s and offers a mathematically distinct security foundation from lattice problems.

Understanding the algorithms is one thing. Actually deploying them across billions of devices and trillions of encrypted connections is an engineering challenge of unprecedented scale.

NIST's draft transition report lays out the staggering complexity: organizations must inventory every system using cryptography, assess quantum vulnerability, prioritize critical systems, test new implementations, and migrate without disrupting operations. All while maintaining backward compatibility with systems that can't be updated.

The performance considerations alone require careful planning. While recent analyses show that post-quantum algorithms perform reasonably well on modern hardware - ML-KEM key generation takes only microseconds on contemporary processors - some operations are slower than their classical equivalents. Digital signature verification with ML-DSA, for instance, can be 2-10 times slower than RSA depending on security parameters.

Key sizes are another practical concern. A 2048-bit RSA public key fits comfortably in network protocols designed decades ago. Post-quantum certificates and keys are often larger, sometimes requiring updates to protocols that assumed cryptographic material would stay small. This affects everything from TLS handshakes to firmware updates on embedded devices.

The real nightmare is legacy systems. Critical infrastructure - power grids, medical devices, industrial control systems - often runs on equipment designed to last 20-30 years. Some devices can't be updated remotely or economically. Organizations are discovering that complete migration might require replacing hardware, not just updating software.

Given the uncertainties around both quantum computing timelines and the long-term security of new algorithms, a pragmatic middle path has emerged: hybrid cryptography.

The concept is straightforward - use both classical and post-quantum algorithms together. A TLS connection might combine RSA or ECDH with ML-KEM, so data remains secure even if one algorithm fails. If quantum computers take longer to arrive than expected, RSA still protects you. If a subtle flaw emerges in lattice cryptography, RSA buys you time.

Recent research demonstrates hybrid frameworks combining classical cryptography, post-quantum algorithms, and even quantum key distribution (QKD) for ultra-sensitive applications. The approach adds complexity but provides defense in depth.

Major tech companies are embracing this strategy. Google added quantum-resistant signatures to Cloud KMS, implementing hybrid schemes that combine classical and post-quantum methods. Microsoft rolled out post-quantum cryptography to Windows Insiders and Linux systems, allowing developers to test hybrid implementations.

Hybrid cryptography combines classical and post-quantum algorithms, so your data stays secure even if one method fails. But you're only as protected as your weakest link.

But hybrid approaches aren't without controversy. Critics point out that combining algorithms doubles the attack surface - you're only as secure as the weakest component. Performance suffers when you're running two complete cryptographic operations for every secure communication. And complexity breeds implementation bugs, historically the source of more breaches than algorithmic weaknesses.

Despite the debates, hybrids represent the pragmatic reality for most organizations during the transition period, which could stretch across a decade or more.

The race to implement post-quantum cryptography is well underway, driven by governments, financial institutions, and tech giants who recognize they can't wait for Q-Day to arrive.

The U.S. government is leading with aggressive mandates. The White House is preparing executive actions on quantum technology and post-quantum cybersecurity. Federal agencies are being pressed to adopt post-quantum cryptography in government acquisitions, with requirements that new systems support quantum-resistant algorithms.

The NSA released its Commercial National Security Algorithm Suite 2.0 in 2022, recommending specific post-quantum algorithms for protecting national security systems. The timeline is tight: classified systems must complete migration by 2030, and systems handling sensitive but unclassified information must transition by 2033.

Internationally, the UK set an official timeline and roadmap for post-quantum cryptography migration in March 2025, coordinating efforts across government and critical national infrastructure. European institutions are developing similar frameworks, recognizing that quantum threats don't respect borders.

The financial sector faces particularly acute pressure. Banks, payment processors, and stock exchanges handle data with long-term value - transaction records, customer information, trading strategies. Industry analyses argue that finance must lead the shift because compromised cryptography could undermine trust in the entire system. Major financial institutions are already piloting post-quantum implementations for high-value transactions.

Technology companies are integrating post-quantum algorithms into products. Cisco is updating its security portfolio to support new standards. Cloud providers are offering post-quantum options for customers who want to get ahead of the curve. Open-source projects are adding support, ensuring that even small organizations can access quantum-resistant tools.

Predicting when a cryptographically relevant quantum computer will emerge is notoriously difficult, mixing scientific capability, engineering challenges, and unknowable breakthroughs.

Timeline analyses show expert opinion ranges from wildly optimistic to extremely conservative. Some researchers believe we'll see cryptanalytically relevant quantum computers by 2030. Others argue fundamental physics barriers mean we're decades away, if it's possible at all at practical scale.

"Expert consensus suggests 2030-2035 represents the earliest plausible window for breaking RSA-2048, with significant uncertainty extending well beyond 2040."

- Marin's Q-Day Analysis

Marin's Q-Day predictions survey experts and compile estimates, finding consensus that 2030-2035 represents the earliest plausible window for breaking RSA-2048, with significant uncertainty extending well beyond 2040.

The trouble with Q-Day predictions is that they depend on solving multiple hard problems simultaneously: building millions of stable qubits, achieving fault-tolerant quantum error correction, developing efficient quantum algorithms, and engineering systems that can maintain quantum states long enough to complete factorization.

Recent claims that Chinese researchers broke military-grade encryption with quantum computers turned out to be misleading. They factored a 22-bit number - a far cry from the 2048-bit keys protecting real systems - and used a hybrid classical-quantum approach that doesn't scale to cryptographically relevant sizes.

The sobering reality is that we don't know when Q-Day will arrive. It could be 2030. It could be 2050. Or never, if quantum error correction proves fundamentally impractical at the required scale. But the consequences of being wrong are catastrophic, which is why the cryptographic community is racing to deploy defenses before the threat materializes.

The good news is you don't need to panic, but you absolutely should prepare.

For organizations, experts recommend starting with a cryptographic inventory: identify every system using public-key cryptography, document what algorithms they use, assess how long the data needs to remain secure, and prioritize systems handling information with long secrecy requirements.

Critical steps include:

Assess risk timeline: If your data only needs to stay secret for a year, harvest-now-decrypt-later threats are minimal. If you're handling state secrets, medical records, or long-term business strategy, you're already at risk.

Test post-quantum implementations: Major vendors are releasing products with post-quantum support. Start pilot programs to understand performance impacts and integration challenges before you're forced to migrate under time pressure.

Plan for hybrid deployments: Unless you're protecting ultra-sensitive data requiring immediate action, hybrid approaches let you add quantum resistance without abandoning proven classical methods.

Update procurement requirements: When purchasing new systems, specify support for post-quantum algorithms so you're not buying equipment that's cryptographically obsolete before it's even deployed.

Monitor standardization: NIST continues refining standards and releasing guidance. Follow developments so your migration strategy aligns with evolving best practices.

For individuals, the transition will be largely invisible - software updates will handle most of it. But understanding the shift helps you make informed choices. Prefer services and devices from vendors actively implementing post-quantum cryptography. Be skeptical of products marketed as "quantum-proof" without specifying which NIST-standardized algorithms they use.

The energy cost alone tells you how far we are from practical quantum threats. Breaking RSA-2048 with a quantum computer would require enormous energy - some estimates suggest megawatt-hours for a single factorization, making it impractical for mass surveillance even if the hardware existed.

The transition to post-quantum cryptography represents one of the most significant technology migrations in history, touching every encrypted system on the planet. Unlike the shift to HTTPS or the IPv6 transition - both of which are still incomplete after decades - we can't afford to be gradual. The harvest-now-decrypt-later threat means the clock is already running on data encrypted today with vulnerable algorithms.

Comparative studies of classical and post-quantum algorithms show that the new standards offer security advantages beyond quantum resistance. Many post-quantum schemes have simpler implementation requirements, reducing the attack surface from implementation bugs. The mathematical foundations are more transparent and better understood than some currently deployed systems.

Industry deployment analyses suggest that organizations starting migration now will complete the transition over 5-10 years - right in line with when quantum threats might materialize. Those who wait risk a panicked, expensive migration under crisis conditions.

What's remarkable about this moment is the global coordination. NIST's standardization process drew submissions from researchers worldwide, subjected candidates to years of public scrutiny, and produced standards that governments and companies across the world are adopting without fragmentation into incompatible regional approaches.

We're witnessing cryptography evolve from protecting data against computational limits to protecting against entirely new kinds of machines that exploit quantum mechanics itself. The mathematics is elegant, the engineering is formidable, and the stakes couldn't be higher.

Your encrypted data won't protect itself. But with the right preparation, the quantum threat becomes manageable - one more evolution in the endless cat-and-mouse game between security and those who would break it.

Ahuna Mons on dwarf planet Ceres is the solar system's only confirmed cryovolcano in the asteroid belt - a mountain made of ice and salt that erupted relatively recently. The discovery reveals that small worlds can retain subsurface oceans and geological activity far longer than expected, expanding the range of potentially habitable environments in our solar system.

Scientists discovered 24-hour protein rhythms in cells without DNA, revealing an ancient timekeeping mechanism that predates gene-based clocks by billions of years and exists across all life.

3D-printed coral reefs are being engineered with precise surface textures, material chemistry, and geometric complexity to optimize coral larvae settlement. While early projects show promise - with some designs achieving 80x higher settlement rates - scalability, cost, and the overriding challenge of climate change remain critical obstacles.

The minimal group paradigm shows humans discriminate based on meaningless group labels - like coin flips or shirt colors - revealing that tribalism is hardwired into our brains. Understanding this automatic bias is the first step toward managing it.

In 1977, scientists discovered thriving ecosystems around underwater volcanic vents powered by chemistry, not sunlight. These alien worlds host bizarre creatures and heat-loving microbes, revolutionizing our understanding of where life can exist on Earth and beyond.

Automated systems in housing - mortgage lending, tenant screening, appraisals, and insurance - systematically discriminate against communities of color by using proxy variables like ZIP codes and credit scores that encode historical racism. While the Fair Housing Act outlawed explicit redlining decades ago, machine learning models trained on biased data reproduce the same patterns at scale. Solutions exist - algorithmic auditing, fairness-aware design, regulatory reform - but require prioritizing equ...

Cache coherence protocols like MESI and MOESI coordinate billions of operations per second to ensure data consistency across multi-core processors. Understanding these invisible hardware mechanisms helps developers write faster parallel code and avoid performance pitfalls.