Cache Coherence Protocols: MESI and MOESI Explained

TL;DR: Differential privacy and federated analytics are transforming data analysis by enabling organizations to extract insights while mathematically guaranteeing individual privacy. Major tech companies and government agencies are already deploying these technologies to balance innovation with protection.

Within five years, how you share personal data will change completely. Two technologies - differential privacy and federated analytics - are quietly rewriting the rules. They let organizations extract insights without seeing your raw information. No more choosing between privacy and innovation. You get both. Tech giants like Apple and Google already use these methods to collect usage data while mathematically guaranteeing your anonymity. Hospitals are analyzing patient records across institutions without transferring a single file. Financial firms are detecting fraud patterns while keeping transactions local. The shift is subtle but profound: data can now stay private while still being useful.

Differential privacy works by injecting noise - random, carefully calibrated distortions - into datasets before releasing statistics. The core idea is counterintuitive: add errors to tell the truth more safely. When the U.S. Census Bureau released 2020 population counts, they used differential privacy mechanisms to ensure no individual household could be identified, even by sophisticated attackers with access to auxiliary data.

Here's the technical foundation. Every query has a "sensitivity" - the maximum amount one person's data could change the result. If you're counting people in a city and one person opts out, the count changes by exactly one. Differential privacy adds noise proportional to that sensitivity, drawn from distributions like Laplace or Gaussian. The privacy budget, denoted epsilon (ε), controls the trade-off. Smaller epsilon means more noise and stronger privacy but less accuracy. Larger epsilon gives clearer results but weaker guarantees.

NIST finalized formal guidelines in early 2025, standardizing how organizations should evaluate differential privacy claims. The framework specifies that a mechanism is ε-differentially private if, for any two datasets differing by one record, the probability of any output changes by at most a factor of e^ε. That mathematical rigor is what separates this from vague "anonymization" promises that have failed repeatedly.

The beauty lies in composition. You can run multiple queries, and privacy guarantees still hold - though your budget depletes with each query. Researchers use composition theorems to track cumulative privacy loss. After ten queries, you know exactly how much privacy you've spent. Run out of budget, and you stop querying. This makes privacy accountable in a way traditional methods never were.

Practical tools have matured quickly. Google's Differential Privacy Project and OpenDP from Microsoft and Harvard provide open-source libraries with battle-tested implementations. They handle the tricky parts - selecting noise distributions, clipping outliers, managing budgets - so data scientists can deploy privacy without a PhD in cryptography.

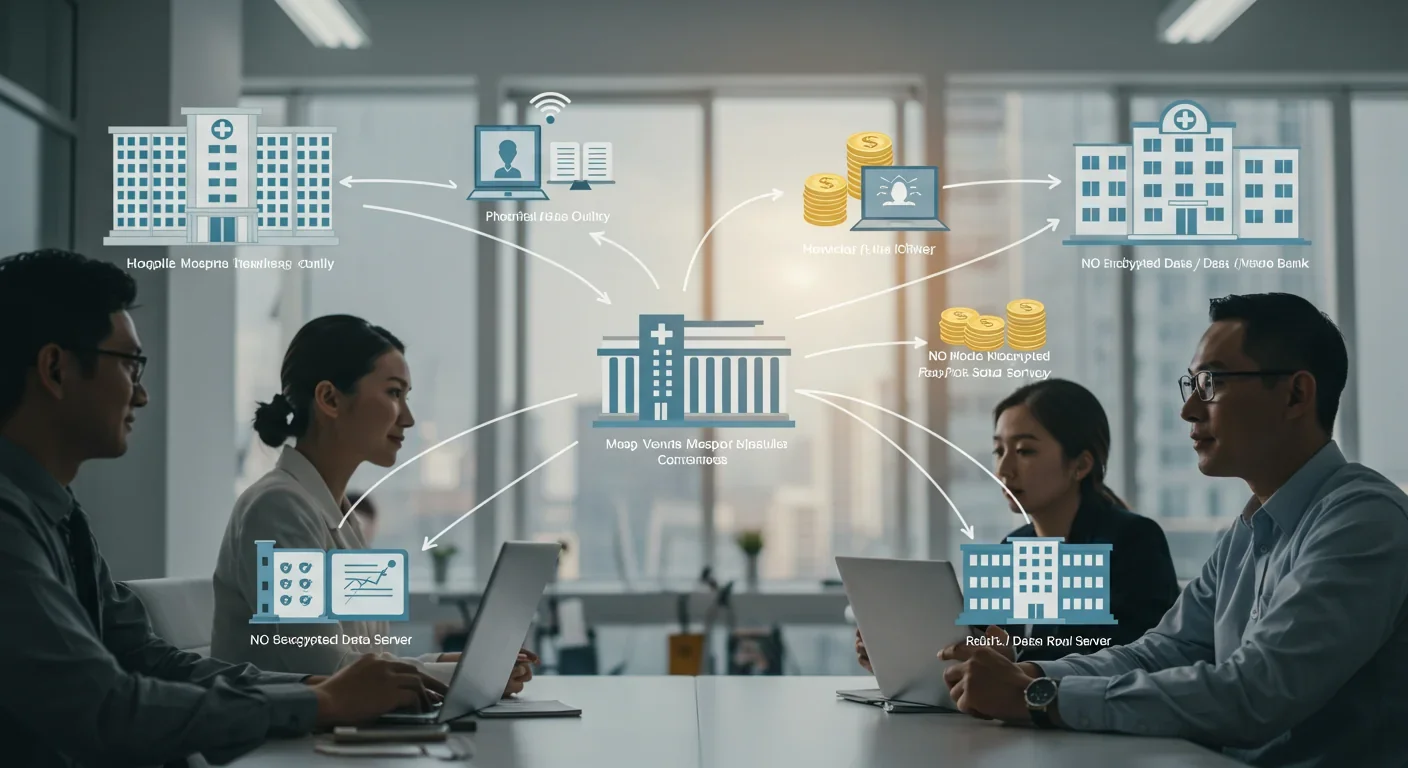

Federated analytics flips the script. Instead of centralizing data and then protecting it, you never centralize it at all. Computation travels to the data, not the other way around. Each institution - hospital, bank, research lab - runs the same analysis locally. Only aggregated results leave the premises. Raw data never moves.

The architecture is elegant. A central coordinator distributes an analytical query or model to participating nodes. Each node computes results on its own dataset. Nodes return encrypted or aggregated outputs, which the coordinator combines. The coordinator never sees individual records, only summaries. If you're training a fraud detection model across fifty banks, each bank trains the model on its transactions and sends back updated model weights. The coordinator averages those weights into a global model. No bank reveals its customer data.

Privacy protections are multilayered. Secure aggregation ensures the coordinator can't inspect individual contributions, only the sum. Homomorphic encryption allows computation on encrypted data, so even during processing, plaintext stays hidden. Differential privacy can be injected at the node level before aggregation, adding a second shield.

Healthcare is a natural fit. Federated analytics platforms let researchers query electronic health records across hospitals without violating HIPAA. A study on rare diseases might need data from a hundred institutions. Centralized databases would require massive legal overhead and put patient privacy at risk. Federated systems bypass both issues. Each hospital keeps its records behind its firewall. The research team gets statistically valid results.

Finance is equally promising. Banks want to detect money laundering patterns that span institutions, but sharing transaction details would violate privacy laws and competitive interests. Federated learning models trained across banks can identify suspicious behaviors without exposing any single customer's transactions. Each bank benefits from collective intelligence while safeguarding its data.

The edge computing revolution amplifies federated analytics. IoT devices - smart thermostats, wearables, autonomous vehicles - generate torrents of data. Sending it all to the cloud is expensive, slow, and privacy-invasive. Federated edge computing processes data at the source. Your fitness tracker learns your exercise patterns locally. Only anonymized insights sync to the cloud.

Differential privacy excels when you need mathematical guarantees and centralized control. If you're publishing open datasets - census statistics, public health metrics, economic indicators - DP provides provable protection against re-identification. Attackers can't reverse-engineer individuals even with infinite computing power and auxiliary databases. The privacy promise is absolute, not probabilistic.

But DP has costs. Adding noise reduces accuracy. For small subgroups or rare events, the noise can swamp the signal. If you're studying a disease affecting fifty people nationwide, differential privacy might make meaningful analysis impossible. Utility trade-offs are unavoidable. You choose your epsilon, and you live with the blur.

Federated analytics shines when data can't or shouldn't move. Regulatory constraints - GDPR's data localization requirements, China's data sovereignty laws - make centralization illegal. Competitive dynamics prevent data sharing even when it's legal. Pharmaceutical companies won't pool clinical trial data with rivals, but they'll participate in federated studies. Federated systems navigate these barriers.

The downside is complexity. Coordinating distributed computation is harder than running queries on a single database. Network failures, version mismatches, and heterogeneous data formats create headaches. Federated learning requires all nodes to run compatible software and return results in sync. One slow or buggy node can stall the entire process.

Security risks differ too. Differential privacy protects published outputs but doesn't secure the computation itself. If an attacker infiltrates your system before noise is added, they see raw data. Federated analytics protects raw data by design - it never centralizes - but individual nodes are vulnerable. A compromised hospital could leak its local data or poison the global model with malicious inputs.

Combining both techniques creates defense in depth. Run federated analytics with differential privacy applied locally at each node. Even if the coordinator is hacked or a node misbehaves, the noise masks individual contributions. This hybrid approach is becoming standard in sensitive domains like genomics and national security.

The 2020 U.S. Census was differential privacy's debut at scale. The Census Bureau applied DP to all redistricting data, balancing accuracy for large populations with privacy for small communities. Critics worried noise would distort political boundaries. Proponents argued prior methods had already been defeated by linkage attacks. The release went forward, and billions of dollars in federal funding now flow based on DP-protected counts.

Apple deployed differential privacy in iOS 10 back in 2016, collecting keyboard usage and emoji patterns to improve QuickType suggestions. The system uses local differential privacy, where noise is added on-device before data leaves your phone. Apple never sees your raw typing, yet aggregate trends emerge from millions of users. Google followed with RAPPOR, a similar technique for Chrome telemetry.

Microsoft applies DP to Windows diagnostics. Billions of PCs send crash reports and performance metrics, and differential privacy ensures no individual machine can be singled out. Enterprise customers demanded proof that telemetry couldn't leak proprietary workflows. DP's mathematical guarantees satisfied legal and security teams where handwaving about anonymization had failed.

Healthcare research is embracing federated analytics. TriNetX DataWorks connects over 300 million de-identified patient records across healthcare organizations. Researchers query the network for cohorts matching specific criteria - say, diabetic patients over sixty who started a new medication. Each institution runs the query locally and returns counts. TriNetX aggregates results without ever centralizing PHI.

Finance is experimenting cautiously. Banks face a paradox: sharing data improves fraud detection and risk models, but regulators forbid exposing customer details. Federated learning pilots are training anti-money-laundering models across institutions. Early results show federated models match centralized accuracy while satisfying compliance teams. The technology hasn't scaled industry-wide yet, but momentum is building.

Europe's GDPR set the privacy bar high, and differential privacy helps organizations clear it. GDPR's core principle: data minimization. Collect and process only what's necessary. Differential privacy formalizes minimization mathematically. By adding noise, you extract the minimum information needed for a statistic while guaranteeing individuals can't be re-identified. Courts and regulators are starting to recognize DP as a valid de-identification technique, though case law is still evolving.

California's CCPA and its successor CPRA impose transparency requirements. Consumers can demand to know what data you collect and how you use it. Federated analytics simplifies compliance: if data never leaves the source, there's less to disclose. You're not "collecting" patient records from hospitals in a federated network; you're running distributed queries. That distinction could reshape regulatory interpretations.

Industry-specific rules add layers. HIPAA governs health data in the U.S., and federated systems inherently align with HIPAA's "minimum necessary" standard. Financial regulators are watching too. The SEC and FINRA care about data integrity and auditability. Differential privacy's formal guarantees provide audit trails that satisfy examiners.

Global divergence complicates deployment. China's data localization laws require certain data types to stay within national borders. Russia, India, and others have similar mandates. Federated analytics naturally complies - data never crosses borders because it never centralizes. Multinational corporations are exploring federated infrastructure to operate legally in fragmented regulatory landscapes.

Standards bodies are catching up. NIST's 2025 guidelines codify best practices for evaluating DP implementations. ISO is drafting standards for federated learning. IEEE has working groups on privacy-preserving analytics. Interoperability remains a challenge - different vendors implement DP and federated protocols differently - but convergence is underway.

AI integration is accelerating. Training large language models on sensitive data is a minefield. Differentially private stochastic gradient descent (DP-SGD) injects noise into model gradients during training, preventing the model from memorizing individual records. Companies want to train on medical notes, legal documents, financial transactions - all high-value, high-sensitivity data. DP-SGD makes that feasible without violating privacy laws.

Federated learning for LLMs is exploding. Imagine training a model across thousands of hospitals, each contributing local insights about rare diseases without sharing patient files. Or banks collaborating on fraud detection without exposing transaction logs. Federated AI systems could unlock datasets previously too sensitive to touch. The technical challenges - communication overhead, model convergence across heterogeneous data - are solvable with better algorithms and faster networks.

Scalability will test both technologies. Differential privacy's noise calibration becomes harder as dimensionality increases. High-dimensional queries - say, releasing correlations among thousands of variables - can require so much noise that results are useless. Researchers are developing adaptive algorithms that allocate privacy budgets strategically, spending more on important queries and less on marginal ones.

Federated analytics faces bandwidth and latency limits. Coordinating thousands of nodes in real time over unreliable networks is nontrivial. Edge computing helps by processing locally, but aggregating results still requires network hops. 5G and satellite internet will ease bottlenecks, but physical constraints remain.

Pitfalls loom if we get complacent. Differential privacy is only as strong as its implementation. Timing side-channel attacks and floating-point arithmetic bugs have already compromised DP systems in lab settings. A single coding error can leak information that the math says should stay hidden. Rigorous testing and formal verification are essential.

Federated systems are vulnerable to poisoning attacks. A malicious node can inject bad data or corrupted models, skewing global results. Byzantine-robust aggregation methods detect and discard outlier contributions, but sophisticated attackers can evade detection. Trust and authentication become critical.

Regulatory lag could stifle innovation. If lawmakers don't understand these technologies, they might ban beneficial uses or fail to prevent harmful ones. Privacy advocates worry that organizations will claim differential privacy while using weak parameters that provide minimal protection. Industry might lobby for lax standards. Balancing innovation with accountability requires informed policymakers and engaged civil society.

If you're a data scientist, start experimenting now. OpenDP and Google's DP libraries are free and well-documented. Apply differential privacy to internal analytics before publishing anything external. Learn how epsilon values affect your specific queries. Build intuition for the privacy-utility trade-off.

Privacy officers should update policies to reflect these technologies. Differential privacy can strengthen data protection impact assessments under GDPR. Federated architectures reduce breach risk by eliminating central honeypots. Document your privacy engineering choices; regulators increasingly expect technical safeguards, not just legal agreements.

Tech managers face a decision: centralize and protect, or federate and isolate. If your data is already centralized and you need to release statistics, differential privacy is the move. If you're designing new systems and data localization matters - due to regulation, competition, or user trust - federate from the start. Retrofitting federation is harder than building it in.

Consumers should demand these protections. When a service asks for your data, ask how they'll protect it. "We anonymize" is not good enough. Differential privacy offers proof, not promises. Federated systems never centralize your data at all. As awareness spreads, market pressure will push adoption faster than regulation alone.

The data landscape is shifting beneath our feet. Organizations that master privacy-preserving analytics will unlock value competitors can't touch. Those that ignore these techniques will drown in compliance costs or lose user trust. Differential privacy and federated analytics aren't silver bullets, but they're the best tools we have to reconcile privacy with progress. Learn them, deploy them, and demand them. The future of data depends on it.

Ahuna Mons on dwarf planet Ceres is the solar system's only confirmed cryovolcano in the asteroid belt - a mountain made of ice and salt that erupted relatively recently. The discovery reveals that small worlds can retain subsurface oceans and geological activity far longer than expected, expanding the range of potentially habitable environments in our solar system.

Scientists discovered 24-hour protein rhythms in cells without DNA, revealing an ancient timekeeping mechanism that predates gene-based clocks by billions of years and exists across all life.

3D-printed coral reefs are being engineered with precise surface textures, material chemistry, and geometric complexity to optimize coral larvae settlement. While early projects show promise - with some designs achieving 80x higher settlement rates - scalability, cost, and the overriding challenge of climate change remain critical obstacles.

The minimal group paradigm shows humans discriminate based on meaningless group labels - like coin flips or shirt colors - revealing that tribalism is hardwired into our brains. Understanding this automatic bias is the first step toward managing it.

In 1977, scientists discovered thriving ecosystems around underwater volcanic vents powered by chemistry, not sunlight. These alien worlds host bizarre creatures and heat-loving microbes, revolutionizing our understanding of where life can exist on Earth and beyond.

Automated systems in housing - mortgage lending, tenant screening, appraisals, and insurance - systematically discriminate against communities of color by using proxy variables like ZIP codes and credit scores that encode historical racism. While the Fair Housing Act outlawed explicit redlining decades ago, machine learning models trained on biased data reproduce the same patterns at scale. Solutions exist - algorithmic auditing, fairness-aware design, regulatory reform - but require prioritizing equ...

Cache coherence protocols like MESI and MOESI coordinate billions of operations per second to ensure data consistency across multi-core processors. Understanding these invisible hardware mechanisms helps developers write faster parallel code and avoid performance pitfalls.